An efficient marketing campaign may also help enhance an organization’s income by rising the gross sales of its merchandise, clearing out extra inventory, bringing in additional prospects and likewise assist introduce new merchandise. Campaigns can embrace gross sales promotions, coupons, rebates, and seasonal reductions through offline or on-line channels.

Subsequently, it is essential to plan campaigns meticulously to make sure their effectiveness. It is equally essential to research the influence of the campaigns on gross sales efficiency. A enterprise can be taught from previous campaigns and enhance its future gross sales promotions by way of environment friendly evaluation.

Problem and motivation:

Analyzing historic information and making correct machine studying predictions for advertising campaigns could be fairly difficult. Every marketing campaign has its personal distinctive set of metrics, making it essential to adapt analytical approaches to suit every case. Moreover, campaigns might make the most of totally different information sources and platforms, leading to all kinds of knowledge codecs and constructions. Consolidating information from these numerous sources right into a unified dataset could be daunting, requiring thorough information integration and cleaning procedures. It is important to make sure information accuracy, align time frames, and engineer related options for prediction, which additional compounds the complexity of this analytical activity.

Answer:

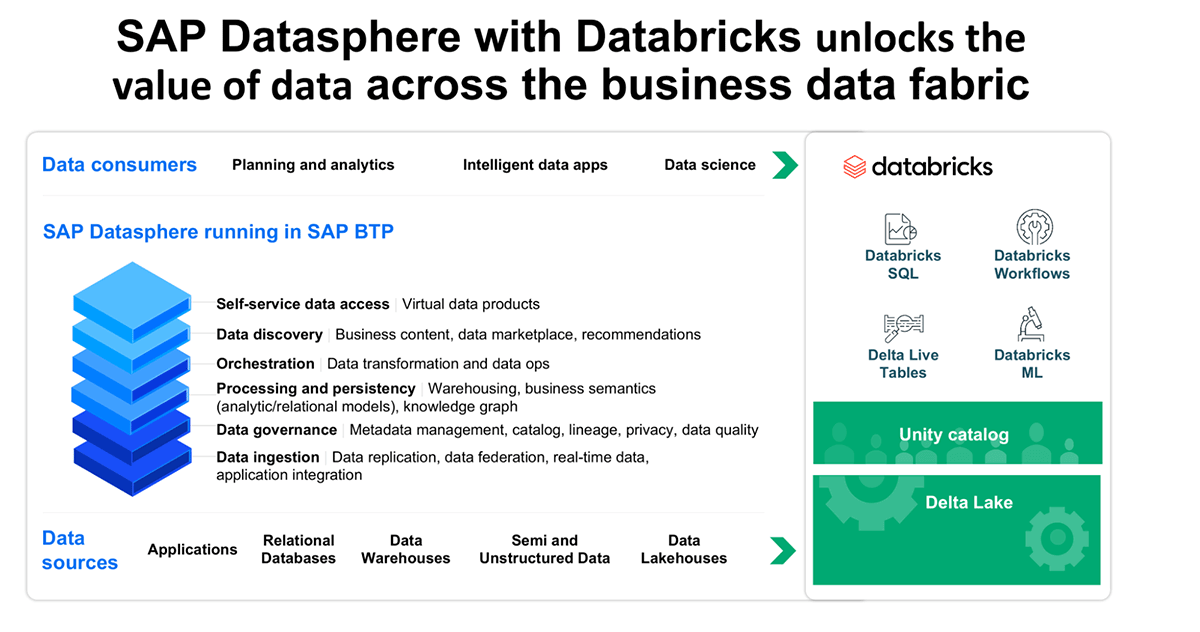

That is the place an answer supporting the enterprise information material structure could make all of it simpler and extra environment friendly.

SAP Datasphere is a complete information service constructed on SAP BTP and is the inspiration for a enterprise information material structure; an structure that helps combine enterprise information from disjointed and siloed information sources with semantics and enterprise logic preserved. With SAP Datasphere analytic fashions, information from these a number of sources can then be harmonized with out the necessity for information duplication. One of many key metrics to evaluating marketing campaign effectiveness is thru the visitors metrics that features clicks and impressions. It is essential to have an environment friendly option to stream, acquire and consolidate all of the metrics round campaigns.

The Databricks Knowledge Intelligence Platform permits organizations to successfully handle, make the most of and entry all their information and AI. The platform — constructed on the lakehouse structure, with a unified governance layer throughout information and AI and a single unified question engine that spans ETL, SQL, machine studying and BI — combines one of the best components of knowledge lakes and information warehouses to assist cut back prices and ship sooner information and AI initiatives. The cherry on prime is the Unity Catalog which empowers organizations to effectively handle their structured and unstructured information, machine studying fashions, notebooks, dashboards, and recordsdata throughout varied clouds and platforms. By way of Unity Catalog, information scientists, analysts, and engineers acquire a safe platform for locating, accessing, and collaborating on dependable information and AI property. This built-in governance method accelerates each information and AI initiatives, all whereas guaranteeing regulatory compliance in a streamlined method.

Collectively, SAP Datasphere and Databricks Platform assist carry worth to enterprise information by way of superior analytics, prediction and comparability of gross sales towards marketing campaign spend for the use case of 360o marketing campaign evaluation.

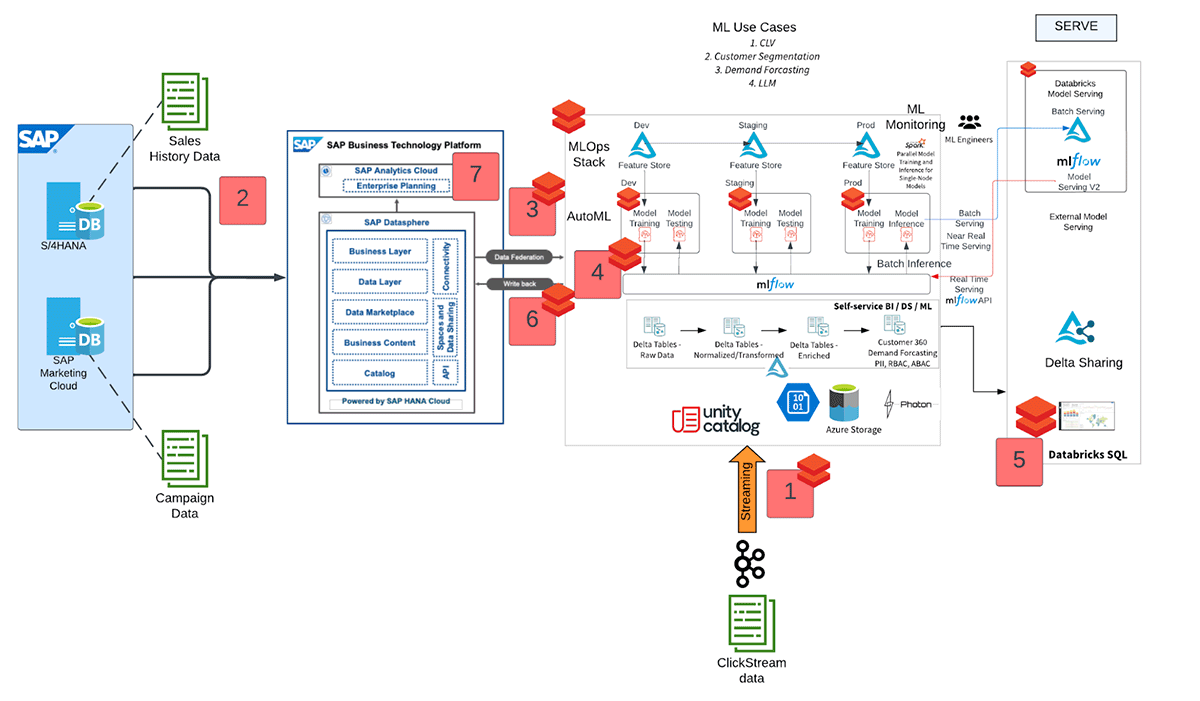

Three information sources gasoline our marketing campaign evaluation:

a. Gross sales Historical past Knowledge: This information originates from SAP S/4HANA financials, offering a wealthy supply of historic gross sales data.

b. Marketing campaign Knowledge: Sourced from the SAP Advertising and marketing Cloud system, this information provides insights into totally different advertising marketing campaign methods and outcomes.

c. Clickstream Knowledge: Streaming in through Kafka into Databricks, clickstream information captures real-time person interactions, enhancing our evaluation.

Let’s discover the great evaluation of marketing campaign information spanning two distinct platforms. Leveraging the strengths of every platform, SAP Datasphere unifies information from varied SAP programs. Databricks, with its highly effective machine studying capabilities, predicts marketing campaign effectiveness and Databricks SQL crafts insightful visualizations to reinforce our understanding.

1. Stream and acquire information clickstream information

Utilizing structured streaming capabilities native to Databricks through Apache Spark™, has seamless integration with Apache Kafka. Utilizing the Kafka connector we will stream Clickstream information into databricks in real-time for evaluation. Right here is the code snippet to attach and skim from a Kafka subject and hyperlink to the detailed documentation.

df = (spark.readStream

.format("kafka")

.possibility("kafka.bootstrap.servers", "<server:ip>")

.possibility("subscribe", "<subject>")

.possibility("startingOffsets", "newest")

.load()

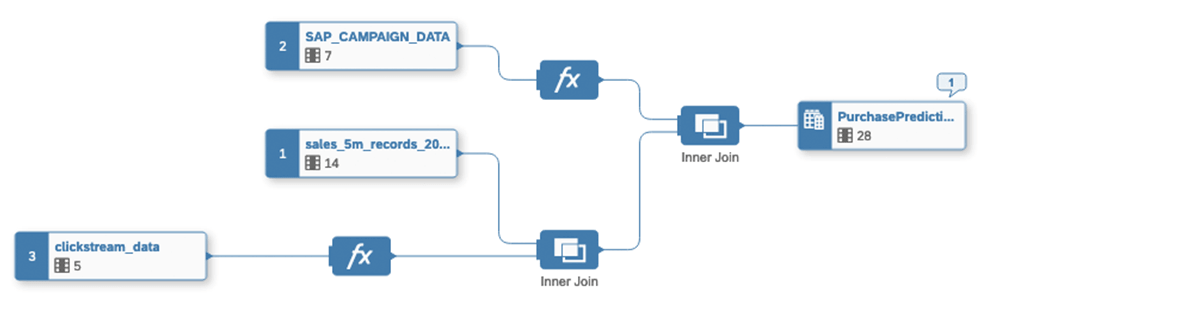

)2. Combine and harmonize information with SAP Datasphere analytic fashions

SAP Datasphere’s unified multi-dimensional analytic mannequin helps just about mix Gross sales and Marketing campaign information from SAP sources with ClickStream from Databricks with out duplication. For data on find out how to join varied SAP sources to SAP Datasphere that will assist entry gross sales historical past & marketing campaign information, please refer right here:

SAP Datasphere can federate the queries dwell towards the supply programs whereas giving flexibility to persist and snapshot the information on SAP Datasphere if wanted. The analytic fashions created are deployed to create the runtime artifacts in SAP Datasphere and could be consumed by SAP Analytics Cloud and by Databricks Platform by way of SAP FedML.

3. Federate information utilizing SAP FedML into Databricks Platform for Gross sales predictions

Utilizing SAP FedML python library for Databricks, information could be federated into Databricks from SAP Datasphere for machine studying situations. This Python library (FedML) is put in from the PyPi repository. The largest benefit is that the SAP FedML package deal has a local implementation for Databricks, with strategies like “execute_query_pyspark(‘question’)” that may execute SQL queries and return the fetched information as a PySpark DataFrame. Right here is the code snippet, however for detailed steps, see the connected pocket book.

from fedml_databricks import DbConnection

#Create the Dbconnection utilizing the config_json which carries the SAP

#Datasphere connection data.

db = DbConnection(dict_obj=config_json)

df_sales = db.execute_query_pyspark('SELECT * FROM "SALES"

."SALES_ORDERS"')4. AI on Databricks

AI Software Structure

Databricks has a number of machine studying instruments to assist streamline and productionalize ML purposes constructed on prime of knowledge sources resembling SAP. The primary software utilized in our utility is the characteristic engineering in Unity Catalog, which offers characteristic discoverability and reuse, permits characteristic lineage and mitigates on-line/offline skew when serving ML fashions in manufacturing

Moreover, our utility makes use of the Databricks-managed model of MLflow to trace mannequin iterations and mannequin metrics by way of Experiments. The Function Engineering consumer helps registering one of the best mannequin to the Mannequin Registry in a “feature-aware” trend, enabling the appliance to lookup options at runtime. The Function Engineering consumer additionally incorporates a batch scoring technique that invokes the identical preprocessing steps used throughout coaching previous to performing inference. All of those options work collectively to create sturdy ML purposes which are simple to place into manufacturing and keep. Databricks additionally integrates with Git repositories for end-to-end code and mannequin administration by way of MLOps finest practices.

Function and Mannequin Particulars

The objective of our mannequin is to foretell unit gross sales based mostly on presently operating advertising campaigns and web site visitors and to research the influence of the campaigns and visitors on gross sales. The preliminary information is derived from orders, clickstream web site interactions, and advertising marketing campaign information. All information was aggregated by day per merchandise kind and area in order that forecasts could possibly be carried out on the each day degree. The joined information is saved as a characteristic desk, and a linear regression mannequin is skilled. This mannequin was logged and tracked utilizing MLflow and the Function Retailer Engineering consumer. The mannequin coefficients had been extracted to research the influence of web site visitors and advertising campaigns on gross sales.

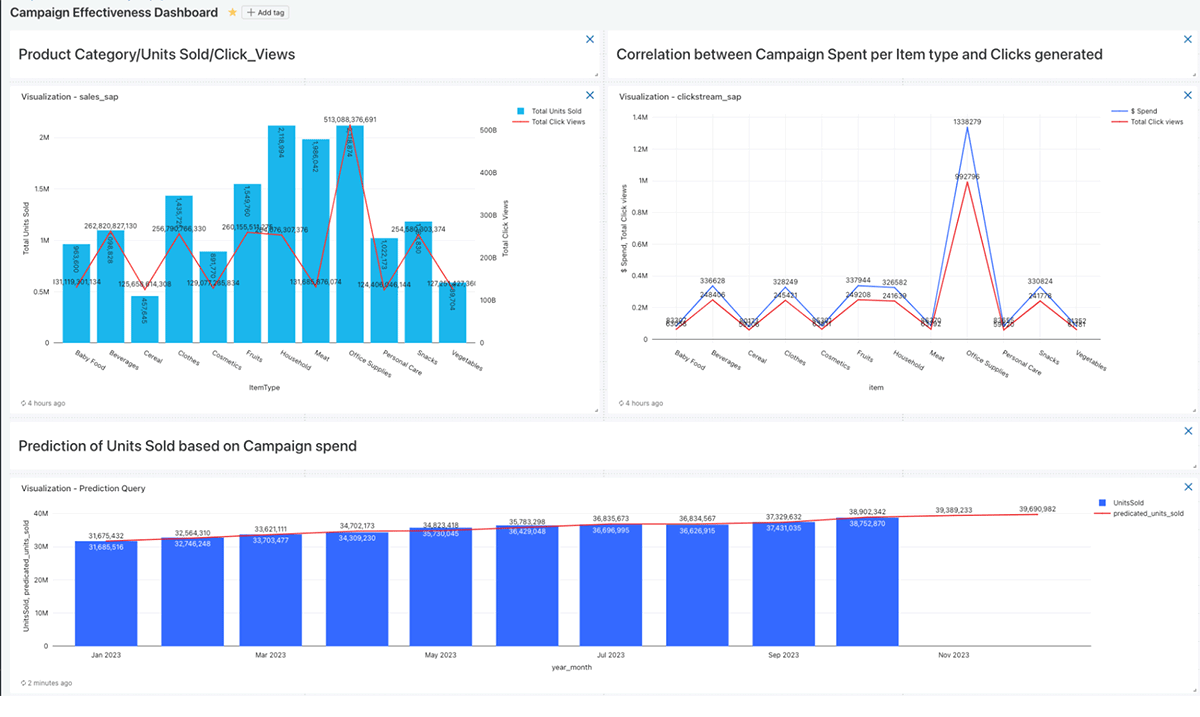

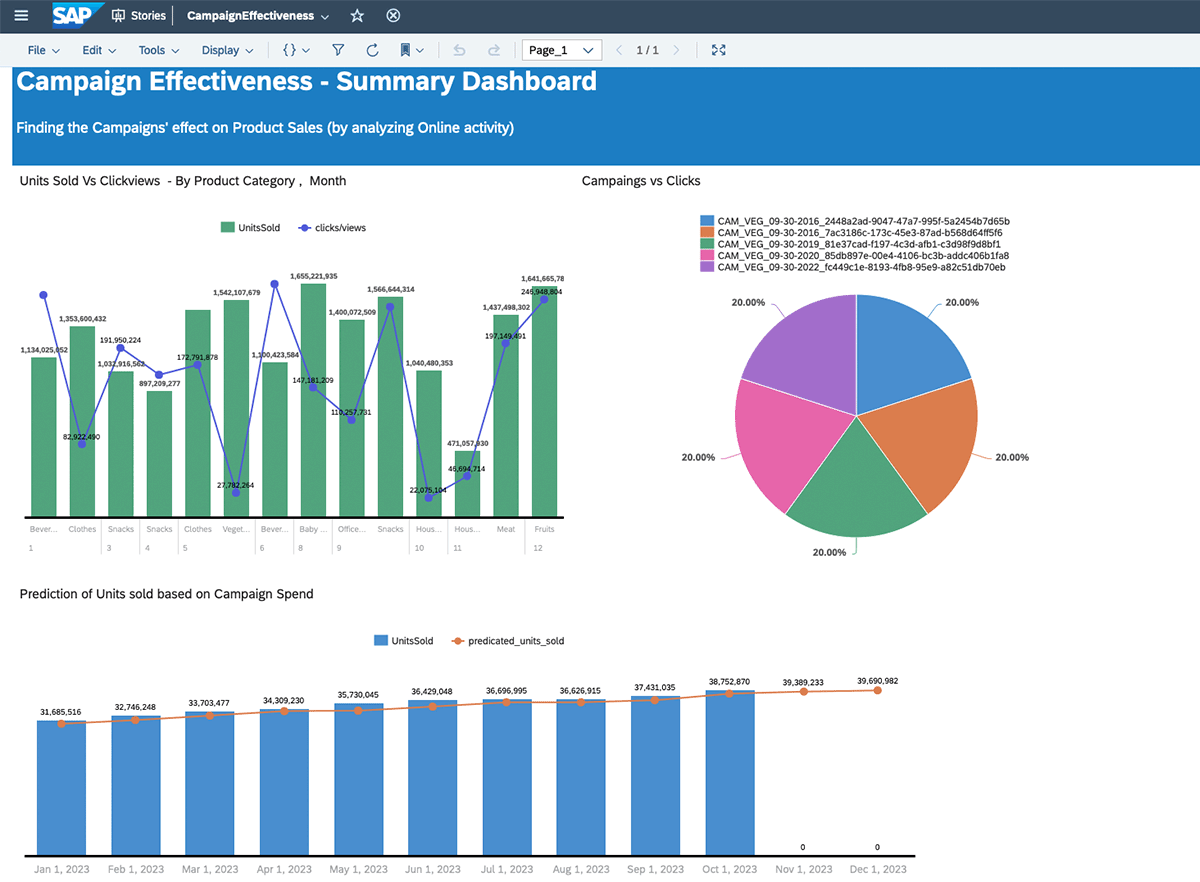

5. Visualization in Databricks SQL:

Databricks SQL stands out as a quickly evolving information warehouse, that includes sturdy dashboard capabilities for versatile visualizations. Customise and create spectacular dashboards, seamlessly transitioning from historic information evaluation to predictive insights publish ML evaluation completed within the earlier step. We lately introduced Lakeview Dashboards (weblog), which provide many main benefits over previous-generation counterparts. They boast improved visualizations and renders as much as 10 occasions sooner. Lakeview is optimized for sharing and distribution, with customers within the group who might not have direct entry to the Databricks. It simplifies design with a user-friendly interface and built-in datasets. Furthermore, it unifies with the platform that gives built-in lineage data for enhanced governance and transparency.

6. Deliver outcomes again to SAP Datasphere and evaluate gross sales towards marketing campaign metrics and gross sales predictions.

SAP FedML additionally offers the flexibility to write down the prediction outcomes again to SAP Datasphere, the place the outcomes could be laid towards the SAP historic gross sales information and SAP marketing campaign grasp information seamlessly within the unified semantic mannequin.

After the EDA and ML evaluation has been carried out on the dataframes. The inference outcome could be saved in SAP Datasphere.

Utilizing create_table API name we will create a desk in SAP Datasphere.

db.create_table("CREATE TABLE DS_SALES_PRED (CUSTOMERNAME Varchar(20),

PRODUCTLINE Varchar(20), STATUS Varchar(20), PRODUCTCODE Varchar(20),

SALES FLOAT, Predicted_SALES FLOAT)

")Write the prediction outcomes to DS_SALES_PRED desk in SAP Datasphere utilizing insert_into_table technique. Right here, the ml_output_dataframe is the output of the ML mannequin predictions. Examine the detailed machine studying pocket book for extra data.

db.insert_into_table('DS_SALES_PRED', ml_output_dataframe)7. Visualization in SAP SAP Analytics Cloud

This unified mannequin is uncovered to the SAP Analytics Cloud, which helps enterprise customers carry out environment friendly analytics by way of its highly effective self-serve software and visualization capabilities, infused with AI good insights.

Abstract:

The Databricks Knowledge Intelligence Platform and SAP Datasphere complement one another and might work collectively to resolve complicated enterprise issues. As an example, predicting and analyzing advertising marketing campaign effectiveness is crucial for companies, and each platforms can help in undertaking it. SAP Datasphere can mixture information from SAP’s vital programs, resembling SAP S/4HANA and SAP Advertising and marketing Cloud. Whereas, the Databricks Platform can combine with SAP Datasphere and ingest information from many alternative sources. Moreover, Databricks offers highly effective instruments for AI, which may also help with predictive analytics for advertising marketing campaign information. The in-depth evaluation and visualization could be completed utilizing the Databricks SQL Lakeview dashboard.

Examine the detailed Notebooks:

- Knowledge engineering pocket book: This pocket book covers SAP FedML to usher in information from SAP Datasphere, and modeling of all of the datasets Marketing campaign+Gross sales+Clickstream

- Machine Studying pocket book: This pocket book covers Machine Studying on Databricks like MLflow, Function Retailer ,mannequin coaching/Prediction.