As companies develop, information volumes scale from GBs to TBs (or extra), and latency calls for go from hours to minutes (or much less), making it more and more costlier to supply contemporary insights again to the enterprise. Traditionally, Python and Scala information engineers have turned to streaming to fulfill these calls for, effectively processing new information in real-time, however analytics engineers who wanted to scale SQL-based dbt pipelines did not have this feature.

Not! This weblog seeks as an example how we are able to use the brand new Streaming Tables and Materialized Views on Databricks to ship contemporary, real-time insights to companies with the simplicity of SQL and dbt.

Background

On the 2023 Information + AI Summit, we launched Streaming Tables and Materialized Views into Databricks SQL. This superior functionality gave Databricks SQL customers easy accessibility to highly effective new desk materializations first launched inside Delta Stay Tables, permitting them to incrementalize massive queries, stream straight from occasion information sources and extra.

Along with natively utilizing Streaming Tables and Materialized Views inside a Databricks atmosphere, additionally they work for dbt customers on Databricks. dbt-databricks has turn into some of the in style methods to construct information fashions on Databricks, leveraging the entire highly effective capabilities of Databricks SQL, together with the Photon compute engine, immediately scaling Serverless SQL Warehouses and the Unity Catalog governance mannequin, with the ubiquity of dbt’s transformation framework.

What’s modified in dbt-databricks?

As of dbt v1.6+, dbt-databricks has developed in three key aspects:

- New materializations: “streaming_table” and “materialized_view”

- New syntax to learn straight from cloud information storage with out staging your sources as a desk

- Entry to superior streaming ideas resembling window aggregations, watermarking and stream-stream joins

Notice: Preserve an eye fixed out for the upcoming dbt v1.7.3 launch which can additional refine the above capabilities!

Let’s check out how we are able to use these new options with the Airline Journeys demo.

The Airline Journeys demo

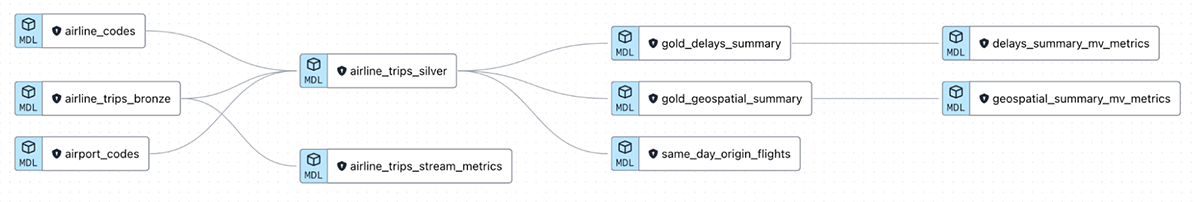

The Airline Journeys demo was created to display learn how to incrementally ingest and remodel reside occasion information for up-to-date enterprise insights on Databricks, be it a dashboard or an AI mannequin. The dataset represents all airline journeys being taken in america over time, capturing the delays to departures and arrivals for every journey.

An included helper pocket book establishes a simulated stream from this dataset whereas the dbt mission showcases an information mannequin that takes these uncooked json occasions and transforms them through streaming ETL right into a layer of Materialized Views, function tables and extra.

The repository is publicly obtainable right here, and leverages pattern information packaged in all Databricks workspaces out-of-the-box. Be happy to observe alongside!

Ingesting information from cloud information storage

One of many easiest methods to start out leveraging Streaming Tables is for information ingestion from cloud information storage, like S3 for AWS or ADLS for Azure. You will have an upstream information supply producing occasion information at a excessive quantity, and a course of to land these as uncooked recordsdata right into a storage location, sometimes json, csv, parquet or avro.

In our demo, think about we obtain a reside feed of each airline journey taken in america from an exterior social gathering, and we need to ingest this incrementally because it comes.

As a substitute of staging the recordsdata as an exterior desk, or utilizing a third social gathering instrument to materialize a Delta Desk for the info supply, we are able to merely use Streaming Tables to unravel this. Take the mannequin beneath for our bronze airline journeys feed:

{{

config(

materialized='streaming_table'

)

}}

choose

*

,_metadata.file_modification_time as file_modification_time

from stream read_files('{{var("input_path")}}/airways', format=>'json')The 2 key factors to notice are:

- The materialization technique is ready to ‘streaming_table’

- This may run a CREATE OR REFRESH STREAMING TABLE command in Databricks

- The syntax to learn from cloud storage leverages Auto Loader underneath the hood

- read_files() will checklist out new json recordsdata within the specified folder and begin processing them. Since we’re utilizing dbt, we have taken benefit of the var() operate in dbt to cross an s3 folder path dynamically (of the shape “s3://…”)

- The STREAM key phrase signifies to stream from this location. Alternatively, with out it we are able to nonetheless use read_files() with materialized=’desk’ to do a batch learn straight from the desired folder

As an apart, whereas Auto Loader requires the least setup, you can too stream straight from an occasion streaming platform like Kafka, Kinesis or Occasion Hubs for even decrease latency utilizing very related syntax. See right here for extra particulars.

Incrementally enriching information for the silver layer

Streaming doesn’t must cease on the ingestion step. If we need to carry out some joins downstream or add a surrogate key, however need to limit it to new information solely to avoid wasting on compute, we are able to proceed to make use of the Streaming Desk materialization. For instance, take the snippet from our subsequent mannequin for the silver, enriched airways journeys feed, the place we be part of mapping tables for airport codes into the uncooked information set:

{{

config(

materialized='streaming_table'

)

}}

...

SELECT

{{ dbt_utils.generate_surrogate_key([

'ArrTimestamp'

])

}} as delay_id

,...

FROM STREAM({{ref("airline_trips_bronze")}}) uncooked

INNER JOIN {{ref("airport_codes")}} ac

ON uncooked.Origin = ac.iata_code

...As soon as once more, we have made use of the Streaming Desk materialization, and have been in a position to leverage customary dbt performance for all of our logic. This contains:

- Leveraging the dbt_utils bundle for helpful shortcuts like producing a surrogate key

- Utilizing the ref() assertion to take care of full lineage

The one actual change to our SQL was the addition of the STREAM() key phrase across the ref() assertion for airline_trips_bronze, to point that this desk is being learn incrementally, whereas the airport_codes desk being joined is a mapping desk that’s learn in full. That is known as a stream-static be part of.

Crafting a compute-efficient gold layer with Materialized Views

With our enriched silver tables prepared, we are able to now take into consideration how we need to serve up aggregated insights to our finish enterprise shoppers. Sometimes if we use a desk materialization, we must recompute all historic outcomes each time.

To benefit from the Streaming Tables upstream that solely course of new information in every run, we flip as an alternative to Materialized Views for the duty!

The excellent news in Databricks is {that a} mannequin that builds a Materialized View seems to be no totally different than a mannequin that builds a desk! Take our instance for a gold layer Materialized View to calculate the share of delayed flights every day:

{{

config(

materialized='materialized_view'

)

}}

SELECT

airline_name

,ArrDate

,COUNT(*) AS no_flights

,SUM(IF(IsArrDelayed = TRUE,1,0)) AS tot_delayed

,ROUND(tot_delayed*100/no_flights,2) AS perc_delayed

FROM {{ ref('airline_trips_silver') }}

WHERE airline_name IS NOT NULL

GROUP BY 1,2All we modified was the materialization config!

Keep in mind, Materialized Views could be incrementally refreshed when there are modifications to the bottom tables. Within the above situation, as we stream new information, the Materialized View determines which teams require re-calculation and solely computes these, leaving unchanged aggregations as-is and decreasing total compute prices. That is simpler to visualise within the instance as we mixture over ArrDate, the arrival date of flights, that means new days of knowledge will naturally fall into new teams and present teams will stay unchanged.

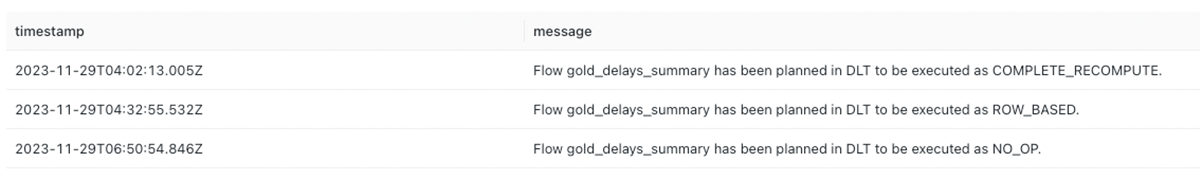

Analyzing the occasion logs of the Materialized View (pictured beneath) after a number of runs of the mannequin, we are able to see the incrementalization at work. The primary run is a full computation like all desk, however a second run to replace the aggregations with new information leverages a row-wise incremental refresh. A closing run of the mannequin recognised that no new information had been ingested upstream and easily did nothing.

What else can I count on within the demo repository?

We have coated the fundamentals of getting information straight from the occasion supply all the way in which to a BI-ready Materialized View, however the demo repository accommodates a lot extra.

Included within the repository are examples of learn how to monitor logs for Streaming Tables and Materialized Views to know how information is being processed, in addition to a sophisticated instance not coated on this weblog of learn how to be part of two streams collectively in a stream-stream be part of simply with SQL!

Clone within the repo to your Databricks atmosphere to get began, or join up dbt Cloud to Databricks at no further price with associate join. You too can be taught extra with the documentation for Materialized Views and Streaming Tables.