Streaming knowledge adoption continues to speed up with over 80% of Fortune 100 corporations already utilizing Apache Kafka to place knowledge to make use of in actual time. Streaming knowledge typically sinks to real-time search and analytics databases which act as a serving layer to be used circumstances together with fraud detection in fintech, real-time statistics in esports, personalization in eCommerce and extra. These use circumstances are latency delicate with even milliseconds of information delays leading to income loss or danger to the enterprise.

Consequently, prospects ask concerning the end-to-end latency they will obtain on Rockset or the time from when knowledge is generated to when it’s made out there for queries. As of immediately, Rockset releases a benchmark that achieves 70 ms of information latency on 20 MB/s of throughput on streaming knowledge.

Rockset’s skill to ingest and index knowledge inside 70ms is an enormous achievement that many giant enterprise prospects have been struggling to achieve for his or her mission-critical purposes. With this benchmark, Rockset offers confidence to enterprises constructing next-generation purposes on real-time streaming knowledge from Apache Kafka, Confluent Cloud, Amazon Kinesis and extra.

A number of latest product enhancements led Rockset to attain millisecond-latency streaming ingestion:

- Compute-compute separation: Rockset separates streaming ingest compute, question compute and storage for effectivity within the cloud. The brand new structure additionally reduces the CPU overhead of writes by eliminating duplicative ingestion duties.

- RocksDB: Rockset is constructed on RocksDB, a high-performance embedded storage engine. Rockset just lately upgraded to RocksDB 7.8.0+ which affords a number of enhancements that reduce write amplification.

- Information Parsing: Rockset has schemaless ingest and helps open knowledge codecs and deeply nested knowledge in JSON, Parquet, Avro codecs and extra. To run complicated analytics over this knowledge, Rockset converts the information at ingest time into a regular proprietary format utilizing environment friendly, custom-built knowledge parsers.

On this weblog, we describe the testing configuration, outcomes and efficiency enhancements that led to Rockset attaining 70 ms knowledge latency on 20 MB/s of throughput.

Efficiency Benchmarking for Actual-Time Search and Analytics

There are two defining traits of real-time search and analytics databases: knowledge latency and question latency.

Information latency measures the time from when knowledge is generated to when it’s queryable within the database. For real-time situations, each millisecond issues as it could possibly make the distinction between catching fraudsters of their tracks, conserving players engaged with adaptive gameplay and surfacing customized merchandise primarily based on on-line exercise and extra.

Question latency measures the time to execute a question and return a consequence. Functions wish to reduce question latency to create snappy, responsive experiences that hold customers engaged. Rockset has benchmarked question latency on the Star Schema Benchmark, an industry-standard benchmark for analytical purposes, and was capable of beat each ClickHouse and Druid, delivering question latencies as little as 17 ms.

On this weblog, we benchmarked knowledge latency at completely different ingestion charges utilizing Rockbench. Information latency has more and more grow to be a manufacturing requirement as an increasing number of enterprises construct purposes on real-time streaming knowledge. We’ve discovered from buyer conversations that many different knowledge methods battle underneath the burden of excessive throughput and can’t obtain predictable, performant knowledge ingestion for his or her purposes. The difficulty is a scarcity of (a) purpose-built methods for streaming ingest (b) methods that may scale ingestion to have the ability to course of knowledge at the same time as throughput from occasion streams will increase quickly.

The purpose of this benchmark is to showcase that it’s attainable to construct low-latency search and analytical purposes on streaming knowledge.

Utilizing RockBench for Measuring Throughput and Latency

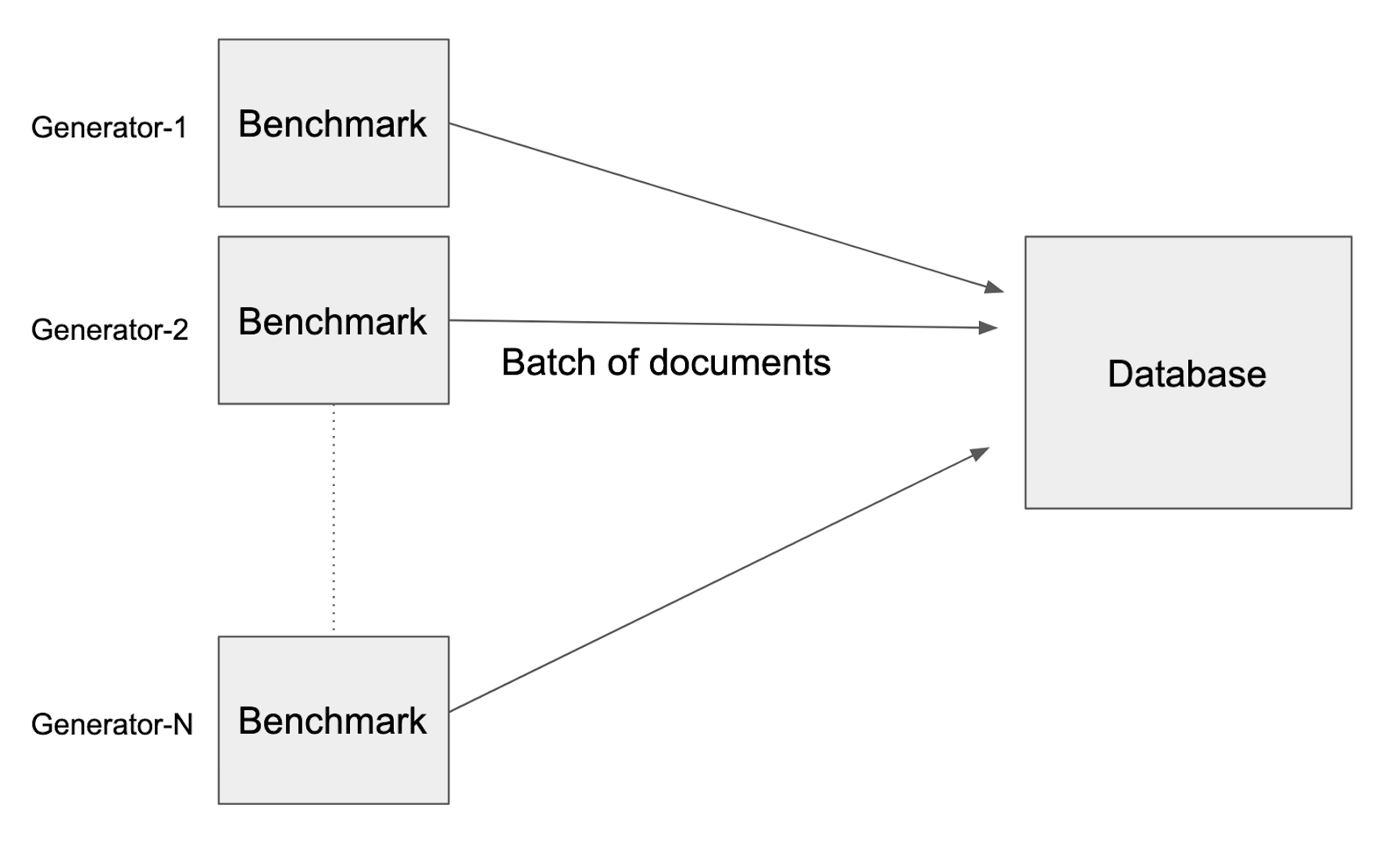

We evaluated Rockset’s streaming ingest efficiency utilizing RockBench, a benchmark which measures the throughput and end-to-end latency of databases.

RockBench has two parts: a knowledge generator and a metrics evaluator. The info generator writes occasions to the database each second; the metrics evaluator measures the throughput and end-to-end latency.

The info generator creates 1.25KB paperwork with every doc representing a single occasion. This interprets to eight,000 writes being the equal of 10 MB/s.

To reflect semi-structured occasions in practical situations, every doc has 60 fields with nested objects and arrays. The doc additionally incorporates a number of fields which might be used to calculate the end-to-end latency:

_id: The distinctive identifier of the doc_event_time: Displays the clock time of the generator machinegenerator_identifier: 64-bit random quantity

The _event_time of every doc is then subtracted from the present time of the machine to reach on the knowledge latency for every doc. This measurement additionally consists of round-trip latency—the time required to run the question and get outcomes from the database. This metric is revealed to a Prometheus server and the p50, p95 and p99 latencies are calculated throughout all evaluators.

On this efficiency analysis, the information generator inserts new paperwork to the database and doesn’t replace any present paperwork.

Rockset Configuration and Outcomes

All databases make tradeoffs between throughput and latency when ingesting streaming knowledge with increased throughput incurring latency penalties and vice versa.

We just lately benchmarked Rockset’s efficiency towards Elasticsearch at most throughput and Rockset achieved as much as 4x sooner streaming knowledge ingestion. For this benchmark, we minimized knowledge latency to show how Rockset performs to be used circumstances demanding the freshest knowledge attainable.

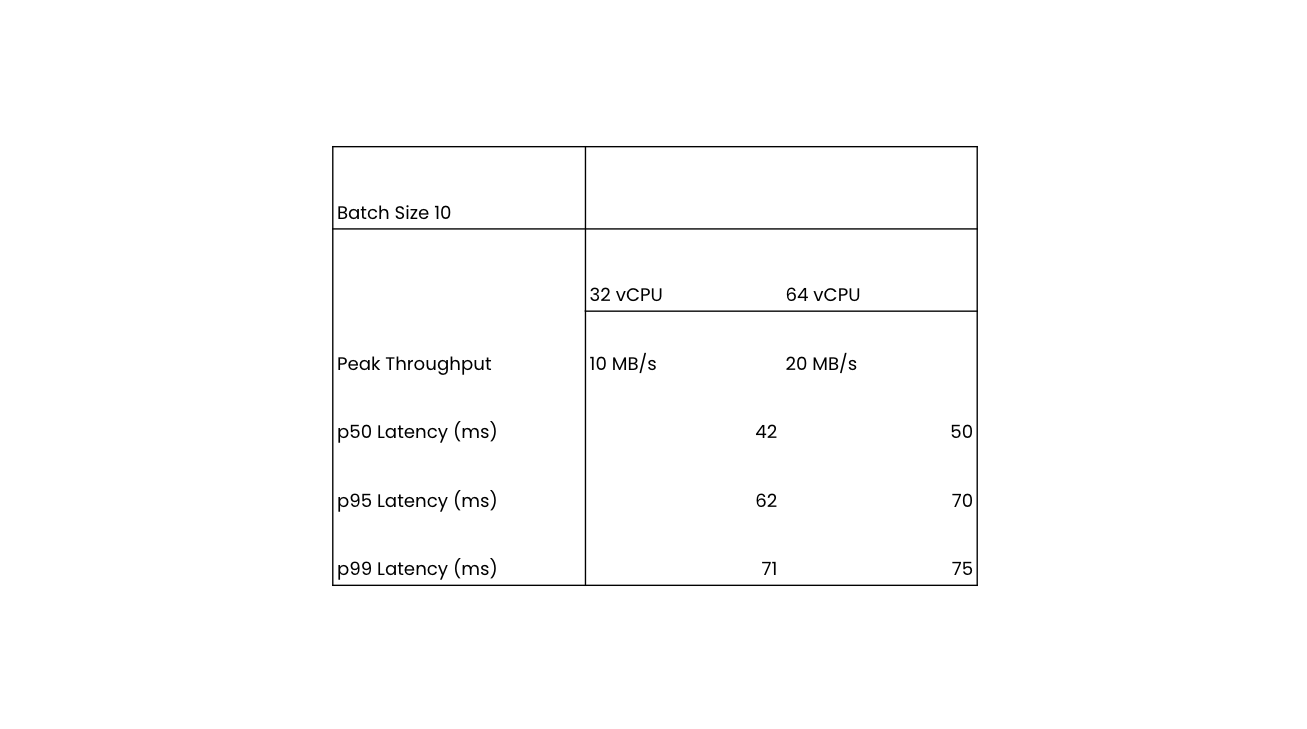

We ran the benchmark utilizing a batch dimension of 10 paperwork per write request on a beginning Rockset assortment dimension of 300 GB. The benchmark held the ingestion throughput fixed at 10 MB/s and 20 MB/s and recorded the p50, p95 and p99 knowledge latencies.

The benchmark was run on XL and 2XL digital cases or devoted allocations of compute and reminiscence sources. The XL digital occasion has 32 vCPU and 256 GB reminiscence and the 2XL has 64 vCPU and 512 GB reminiscence.

Listed here are the abstract outcomes of the benchmark at p50, p95 and p99 latencies on Rockset:

At p95 knowledge latency, Rockset was capable of obtain 70 ms on 20 MB/s throughput. The efficiency outcomes present that as throughput scales and the scale of the digital occasion will increase, Rockset is ready to keep comparable knowledge latencies. Moreover, the information latencies for the p95 and p99 averages are clustered shut collectively exhibiting predictable efficiency.

Rockset Efficiency Enhancements

There are a number of efficiency enhancements that allow Rockset to attain millisecond knowledge latency:

Compute-Compute Separation

Rockset just lately unveiled a brand new cloud structure for real-time analytics: compute-compute separation. The structure permits customers to spin up a number of, remoted digital cases on the identical shared knowledge. With the brand new structure in place, customers can isolate the compute used for streaming ingestion from the compute used for queries, guaranteeing not simply excessive efficiency, however predictable, environment friendly excessive efficiency. Customers now not must overprovision compute or add replicas to beat compute competition.

One of many advantages of this new structure is that we have been capable of eradicate duplicate duties within the ingestion course of so that every one knowledge parsing, knowledge transformation, knowledge indexing and compaction solely occur as soon as. This considerably reduces the CPU overhead required for ingestion, whereas sustaining reliability and enabling customers to attain even higher price-performance.

RocksDB Improve

Rockset makes use of RocksDB as its embedded storage engine underneath the hood. The crew at Rockset created and open-sourced RocksDB whereas at Fb and it’s presently utilized in manufacturing at Linkedin, Netflix, Pinterest and extra web-scale corporations. Rockset chosen RocksDB for its efficiency and skill to deal with steadily mutating knowledge effectively. Rockset leverages the most recent model of RocksDB, model 7.8.0+, to cut back the write amplification by greater than 10%.

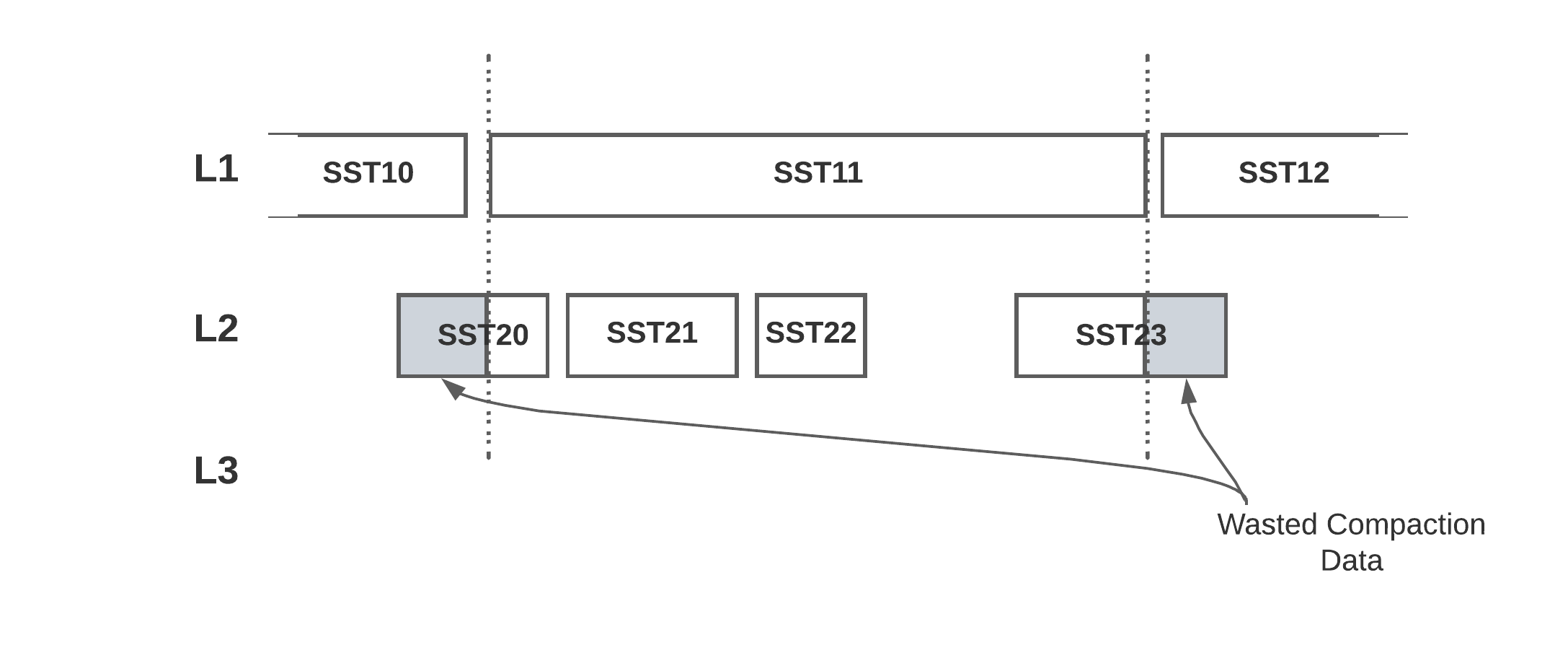

Earlier variations of RocksDB used a partial merge compaction algorithm, which picks one file from the supply degree and compacts to the subsequent degree. In comparison with a full merge compaction, this produces smaller compaction dimension and higher parallelism. Nevertheless, it additionally ends in write amplification.

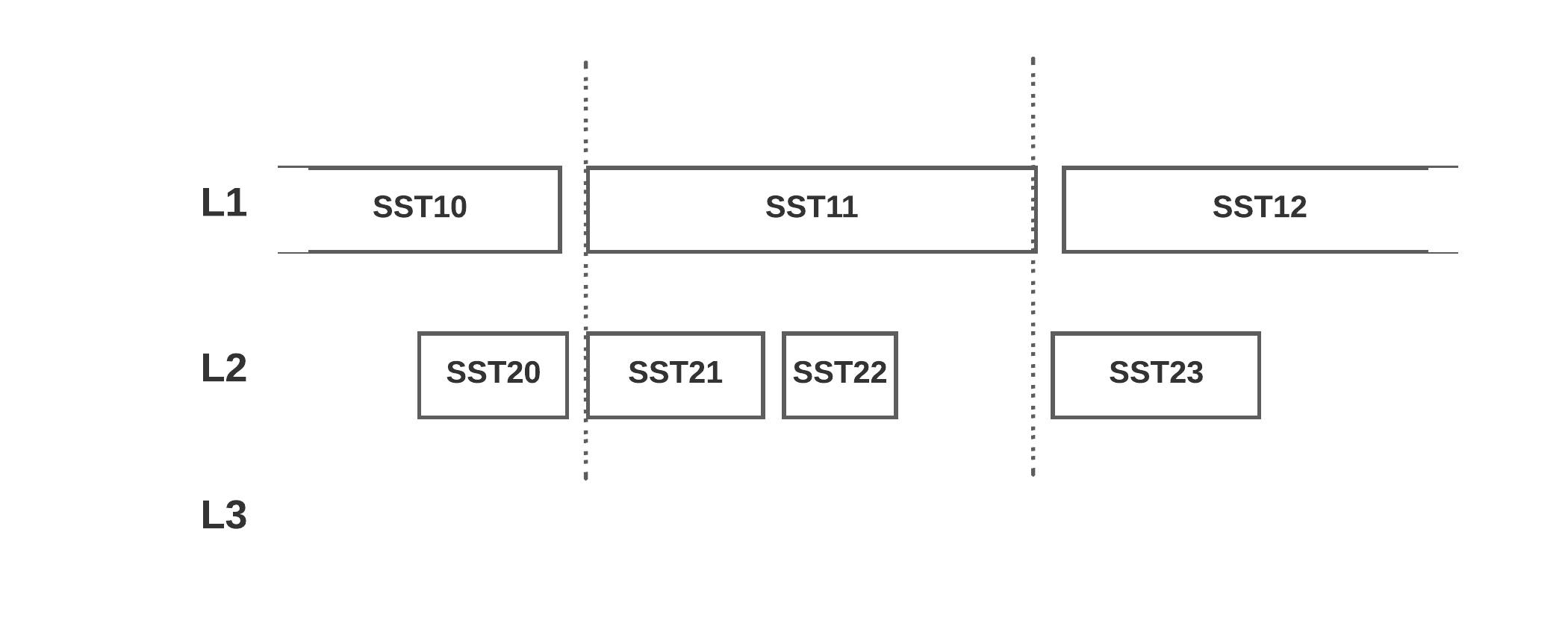

In RocksDB model 7.8.0+, the compaction output file is minimize earlier and permits bigger than targeted_file_size to align compaction recordsdata to the subsequent degree recordsdata. This reduces write amplification by 10+ %.

By upgrading to this new model of RocksDB, the discount in write amplification means higher ingest efficiency, which you’ll be able to see mirrored within the benchmark outcomes.

Customized Parsers

Rockset has schemaless ingest and helps all kinds of information codecs together with JSON, Parquet, Avro, XML and extra. Rockset’s skill to natively assist SQL on semi-structured knowledge minimizes the necessity for upstream pipelines that add knowledge latency. To make this knowledge queryable, Rockset converts the information into a regular proprietary format at ingestion time utilizing knowledge parsers.

Information parsers are chargeable for downloading and parsing knowledge to make it out there for indexing. Rockset’s legacy knowledge parsers leveraged open-source parts that didn’t effectively use reminiscence or compute. Moreover, the legacy parsers transformed knowledge to an middleman format earlier than once more changing knowledge to Rockset’s proprietary format. With a purpose to reduce latency and compute, the information parsers have been rewritten in a {custom} format. Customized knowledge parsers are twice as quick, serving to to attain the information latency outcomes captured on this benchmark.

How Efficiency Enhancements Profit Prospects

Rockset delivers predictable, excessive efficiency ingestion that permits prospects throughout industries to construct purposes on streaming knowledge. Listed here are just a few examples of latency-sensitive purposes constructed on Rockset in insurance coverage, gaming, healthcare and monetary providers industries:

- Insurance coverage {industry}: The digitization of the insurance coverage {industry} is prompting insurers to ship insurance policies which might be tailor-made to the danger profiles of consumers and tailored in realm time. A fortune 500 insurance coverage firm supplies instantaneous insurance coverage quotes primarily based on a whole lot of danger components, requiring lower than 200 ms knowledge latency with the intention to generate real-time insurance coverage quotes.

- Gaming {industry}: Actual-time leaderboards increase gamer engagement and retention with dwell metrics. A number one esports gaming firm requires 200 ms knowledge latency to indicate how video games progress in actual time.

- Monetary providers: Monetary administration software program helps corporations and people observe their monetary well being and the place their cash is being spent. A Fortune 500 firm makes use of real-time analytics to offer a 360 diploma of funds, displaying the most recent transactions in underneath 500 ms.

- Healthcare {industry}: Well being info and affected person profiles are always altering with new take a look at outcomes, medicine updates and affected person communication. A number one healthcare participant helps scientific groups monitor and observe sufferers in actual time, with a knowledge latency requirement of underneath 2 seconds.

Rockset scales ingestion to assist excessive velocity streaming knowledge with out incurring any unfavorable impression on question efficiency. Consequently, corporations throughout industries are unlocking the worth of real-time streaming knowledge in an environment friendly, accessible method. We’re excited to proceed to push the decrease limits of information latency and share the most recent efficiency benchmark with Rockset attaining 70 ms knowledge latency on 20 MB/s of streaming knowledge ingestion.

You can also expertise these efficiency enhancements robotically and with out requiring infrastructure tuning or guide upgrades by beginning a free trial of Rockset immediately.

Richard Lin and Kshitij Wadhwa, software program engineers at Rockset, carried out the information latency investigation and testing on which this weblog is predicated.