In a earlier weblog put up, we explored the facility of Cloudera Observability in offering high-level actionable insights and summaries for Hive service customers. On this weblog, we are going to delve deeper into the perception Cloudera Observability brings to queries executed on Hive.

As a fast recap, Cloudera Observability is an utilized observability answer that gives visibility into Cloudera deployments and its varied providers. The software permits automated actions to stop adverse penalties like extreme useful resource consumption and funds overruns. Amongst different capabilities, Cloudera Observability delivers complete options to troubleshoot and optimize Hive queries. Moreover, it offers insights from deep analytics for quite a lot of supported engines utilizing question plans, system metrics, configuration, and far more.

A vital purpose for a Hive SQL developer is making certain that queries run effectively. If there are points within the question execution, it must be attainable to debug and diagnose these rapidly. With regards to particular person queries, the next questions usually crop up:

- What if my question efficiency deviates from the anticipated path?

- When my question goes astray, how do I detect deviations from the anticipated efficiency? Are there any baselines for varied metrics about my question? Is there a approach to evaluate completely different executions of the identical question?

- Am I overeating, or do I would like extra sources?

- What number of CPU/reminiscence sources are consumed by my question? And the way a lot was accessible for consumption when the question ran? Are there any automated well being checks to validate the sources consumed by my question?

- How do I detect issues on account of skew?

- Are there any automated well being checks to detect points which may outcome from skew in knowledge distribution?

- How do I make sense of the stats?

- How do I exploit system/service/platform metrics to debug Hive queries and enhance their efficiency?

- I need to carry out an in depth comparability of two completely different runs; the place ought to I begin?

- What data ought to I exploit? How do I evaluate the configurations, question plans, metrics, knowledge volumes, and so forth?

Let’s test how Cloudera Observability solutions the above questions and helps you detect issues with particular person queries.

What if my question efficiency deviates from the anticipated path?

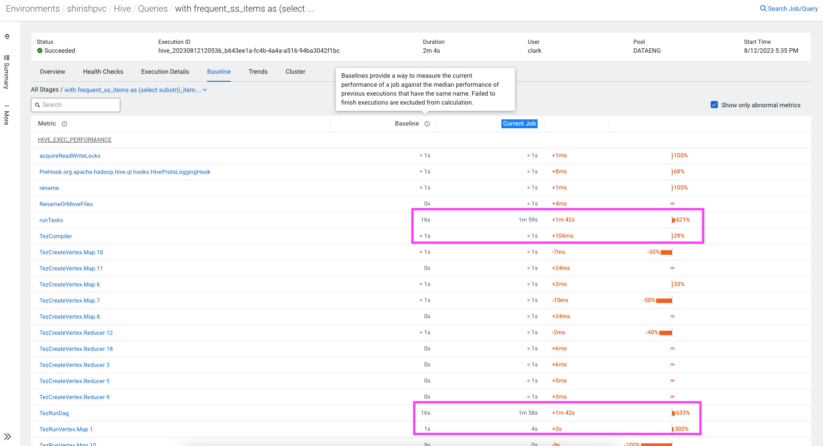

Think about a periodic ETL or analytics job you run on Hive service for months abruptly turns into gradual. It’s a state of affairs that’s not unusual, contemplating the multitude of things that have an effect on your queries. Ranging from the best, a job may decelerate as a result of your enter or output knowledge quantity elevated, knowledge distribution is now completely different due to the underlying knowledge adjustments, concurrent queries are affecting the usage of shared sources, or system {hardware} points equivalent to a gradual disk. It may very well be a tedious job to seek out out the place precisely your queries slowed down. This requires an understanding of how a question is executed internally and completely different metrics that customers ought to contemplate.

Enter Cloudera Observability’s baselining characteristic, your troubleshooting associate. From execution instances to intricate particulars regarding the Hive question and its execution plan, each very important facet is taken into account for baselining. This baseline is meticulously fashioned utilizing historic knowledge from prior question executions. So while you detect efficiency deviations on your Hive queries, this characteristic turns into your information, pointing you to metrics of curiosity.

Am I overeating, or do I would like extra sources?

As an SQL developer, putting a steadiness between question execution and optimum use of sources is important. Naturally, you’d need a easy approach to learn the way many sources have been consumed by your question and what number of have been accessible. Moreover, you additionally need to be a great neighbor when utilizing shared system sources and never monopolize their use.

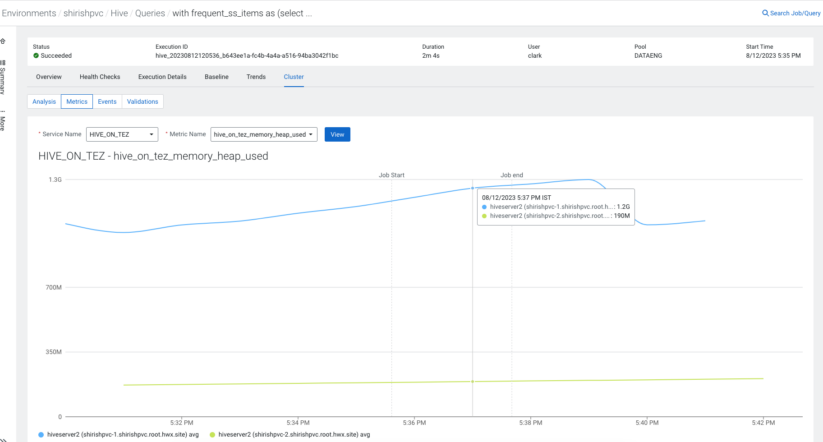

The “Cluster Metrics” characteristic in Cloudera Observability helps you obtain this.

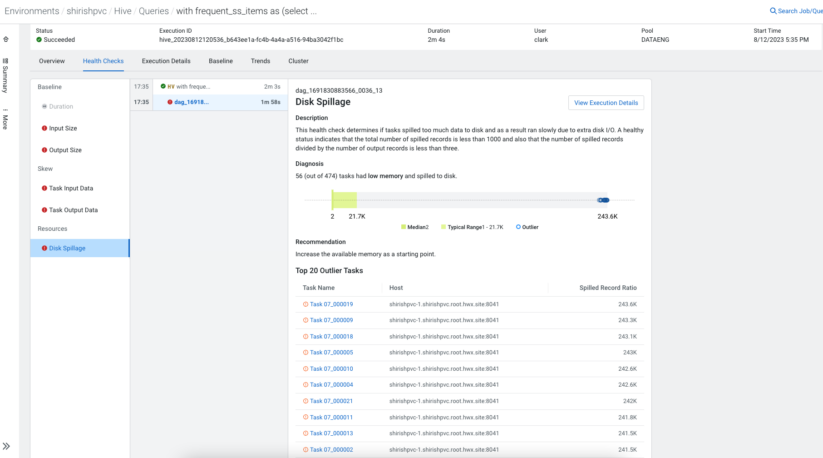

Challenges may come up in case you have fewer sources than your question wants. Cloudera Observability steps in with a number of automated question well being checks that make it easier to determine the issues on account of useful resource shortage.

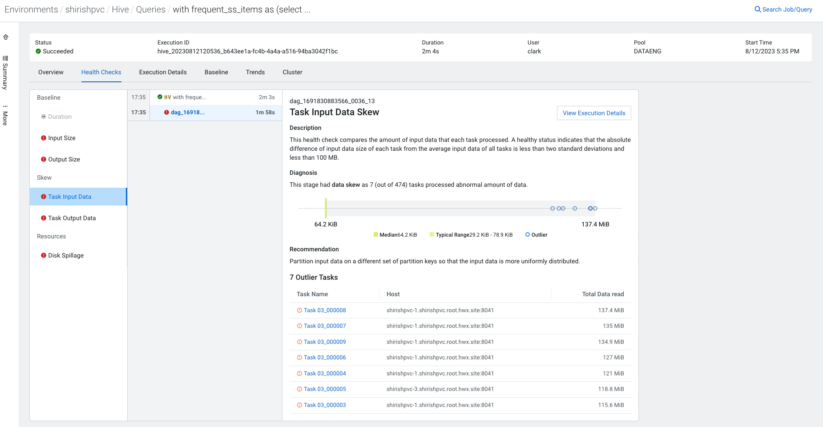

How do I detect issues on account of skew?

Within the realm of distributed databases (and Hive isn’t any exception), there’s an important rule that knowledge must be distributed evenly. The non-uniform distribution of the information set is named knowledge “skew.” Information skew may cause efficiency points and result in non-optimized utilization of accessible sources. As such, the power to detect points on account of skew and supply suggestions to resolve these helps Hive customers significantly. Cloudera Observability comes armed with a number of built-in well being checks to detect issues on account of skew to assist customers optimize queries.

How do I make sense of the stats?

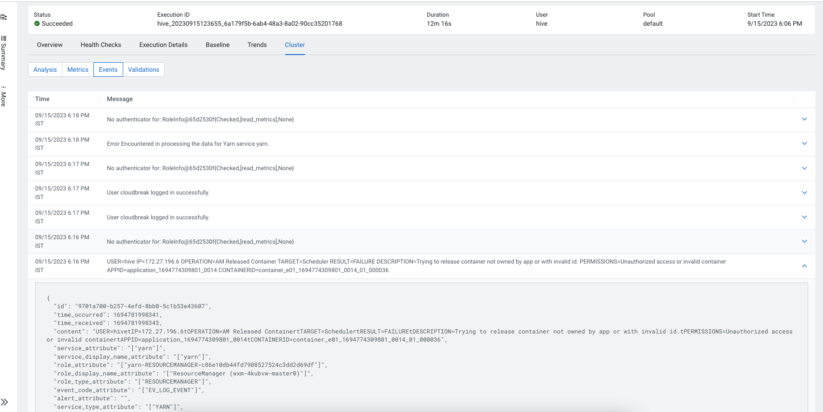

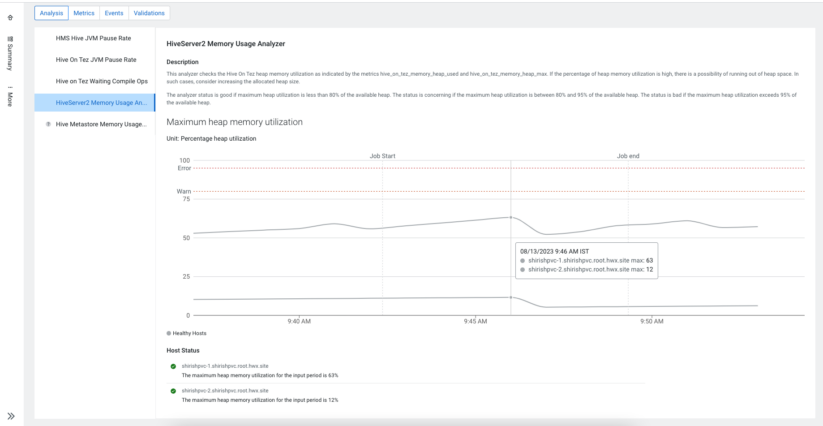

In as we speak’s tech world, metrics have grow to be the soul of observability, flowing from working methods to complicated setups like distributed methods. Nevertheless, with hundreds of metrics being generated each minute, it turns into difficult to seek out out the metrics that have an effect on your question jobs.

The Cloudera platform offers many such metrics to make it observable and help in debugging. Cloudera Observability goes a step additional and offers built-in analyzers that carry out well being checks on these metrics and spot any points. With the assistance of those analyzers, it’s simple to identify system and cargo points. Moreover, Cloudera Observability offers you the power to look metric values for essential Hive metrics that will have affected your question execution. It additionally offers fascinating occasions that will have occurred in your clusters whereas the question ran.

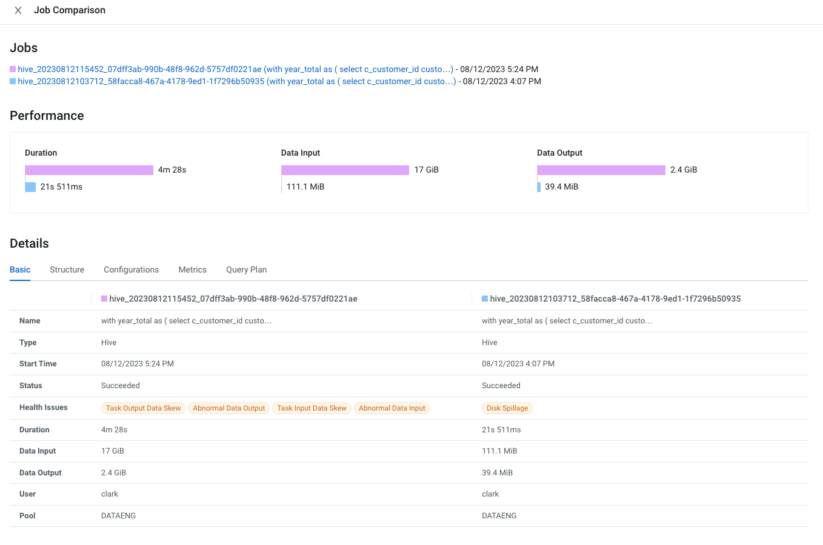

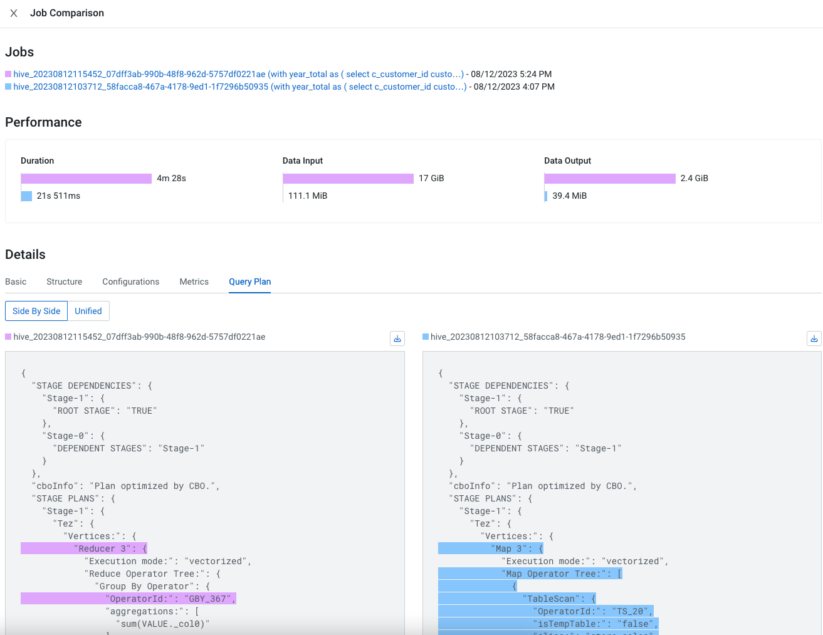

I need to carry out an in depth comparability of two completely different runs; the place ought to I begin?

It’s commonplace to look at a degradation in question efficiency for varied causes. As a developer, you’re on a mission to check two completely different runs and spot the variations. However the place would you begin? There’s a lot to seek out out and evaluate. For instance, ranging from essentially the most easy metrics like execution length or enter/output knowledge sizes, to complicated ones like variations between question plans, Hive configuration when the question was executed, the DAG construction, question execution metrics, and extra. A built-in characteristic that achieves that is of nice use, and Cloudera Observability does this exactly for you.

With the question comparability characteristic in Cloudera Observability, you possibly can evaluate the entire above elements between two executions of the question. Now it’s easy to identify adjustments between the 2 executions and take applicable actions.

As illustrated, gaining perception into your Cloudera Hive queries is a breeze with Cloudera Observability. Analyzing and troubleshooting Hive queries has by no means been this easy, enabling you to spice up efficiency and catch any points with a eager eye.

To seek out out extra about Cloudera Observability, go to our web site. To get began, get in contact along with your Cloudera account supervisor or contact us straight.