Generative Synthetic Intelligence (AI) represents a cutting-edge frontier within the subject of machine studying and AI. In contrast to conventional AI fashions targeted on interpretation and evaluation, generative AI is designed to create new content material and generate novel information outputs. This consists of the synthesis of pictures, textual content, sound, and different digital media, typically mimicking human-like creativity and intelligence. By leveraging advanced algorithms and neural networks, similar to Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), generative AI can produce unique, reasonable content material, typically indistinguishable from human-generated work.

Within the period of digital transformation, information privateness has emerged as a pivotal concern. As AI applied sciences, particularly generative AI, closely depend on huge datasets for coaching and functioning, the safeguarding of non-public and delicate data is paramount. The intersection of generative AI and information privateness raises important questions: How is information getting used? Can people’ privateness be compromised? What measures are in place to forestall misuse? The significance of addressing these questions lies not solely in moral compliance but in addition in sustaining public belief in AI applied sciences.

This text goals to delve into the intricate relationship between generative AI and information privateness. It seeks to light up the challenges posed by the combination of those two domains, exploring how generative AI impacts information privateness and vice versa. By inspecting the present panorama, together with the technological challenges, moral issues, and regulatory frameworks, this text endeavors to supply a complete understanding of the topic. Moreover, it’ll spotlight potential options and future instructions, providing insights for researchers, practitioners, and policymakers within the subject. The scope of this dialogue extends from technical points of AI fashions to broader societal and authorized implications, guaranteeing a holistic view of the generative AI–information privateness nexus.

The Intersection of Generative AI and Knowledge Privateness

Generative AI capabilities by studying from giant datasets to create new, unique content material. This course of entails coaching AI fashions, similar to GANs or VAEs, on in depth information units. These fashions encompass two components: the generator, which creates content material, and the discriminator, which evaluates it. By way of iterative processes, the generator learns to supply more and more reasonable outputs that may idiot the discriminator. This skill to generate new information factors from present information units is what units generative AI aside from different AI applied sciences.

Knowledge is the cornerstone of any generative AI system. The standard and amount of the info utilized in coaching immediately affect the mannequin’s efficiency and the authenticity of its outputs. These fashions require various and complete datasets to be taught and mimic patterns precisely. The info can vary from textual content and pictures to extra advanced information varieties like biometric data, relying on the appliance.

The information privateness considerations in AI embrace:

- Knowledge Assortment and Utilization: The gathering of enormous datasets for coaching generative AI raises considerations about how information is sourced and used. Points similar to knowledgeable consent, information possession, and the moral use of private data are central to this dialogue.

- Potential for Knowledge Breaches: With giant repositories of delicate data, generative AI programs can change into targets for cyberattacks, resulting in potential information breaches. Such breaches may outcome within the unauthorized use of non-public information and important privateness violations.

- Privateness of People in Coaching Datasets: Guaranteeing the anonymity of people whose information is utilized in coaching units is a significant concern. There’s a danger that generative AI may inadvertently reveal private data or be used to recreate identifiable information, posing a menace to particular person privateness.

Understanding these points is essential for addressing the privateness challenges related to generative AI. The stability between leveraging information for technological development and defending particular person privateness rights stays a key difficulty on this subject. As generative AI continues to evolve, the methods for managing information privateness should additionally adapt, guaranteeing that technological progress doesn’t come on the expense of non-public privateness.

Challenges in Knowledge Privateness with Generative AI

Anonymity and Reidentification Dangers

One of many main challenges within the realm of generative AI is sustaining the anonymity of people whose information is utilized in coaching fashions. Regardless of efforts to anonymize information, there may be an inherent danger of reidentification. Superior AI fashions can unintentionally be taught and replicate distinctive, identifiable patterns current within the coaching information. This case poses a major menace, as it could expose private data, undermining efforts to guard particular person identities.

Unintended Knowledge Leakage in AI Fashions

Knowledge leakage refers back to the unintentional publicity of delicate data via AI fashions. Generative AI, because of its skill to synthesize reasonable information based mostly on its coaching, can inadvertently reveal confidential data. For instance, a mannequin skilled on medical information may generate outputs that carefully resemble actual affected person information, thus breaching confidentiality. This leakage isn’t all the time a results of direct information publicity however can happen via the replication of detailed patterns or data inherent within the coaching information.

Moral Dilemmas in Knowledge Utilization

Using generative AI introduces advanced moral dilemmas, significantly concerning the consent and consciousness of people whose information is used. Questions come up concerning the possession of knowledge and the moral implications of utilizing private data to coach AI fashions with out express consent. These dilemmas are compounded when contemplating information sourced from publicly accessible datasets or social media, the place the unique context and consent for information use could be unclear.

Compliance with World Knowledge Privateness Legal guidelines

Navigating the various information privateness legal guidelines throughout completely different jurisdictions presents one other problem for generative AI. Legal guidelines such because the Basic Knowledge Safety Regulation (GDPR) within the European Union and the California Client Privateness Act (CCPA) in the USA set stringent necessities for information dealing with and consumer consent. Guaranteeing compliance with these legal guidelines, particularly for AI fashions used throughout a number of areas, requires cautious consideration and adaptation of knowledge practices.

Every of those challenges underscores the complexity of managing information privateness within the context of generative AI. Addressing these points necessitates a multifaceted method, involving technological options, moral issues, and regulatory compliance. As generative AI continues to advance, it’s crucial that these privateness challenges are met with sturdy and evolving methods to safeguard particular person privateness and preserve belief in AI applied sciences.

Technological and Regulatory Options

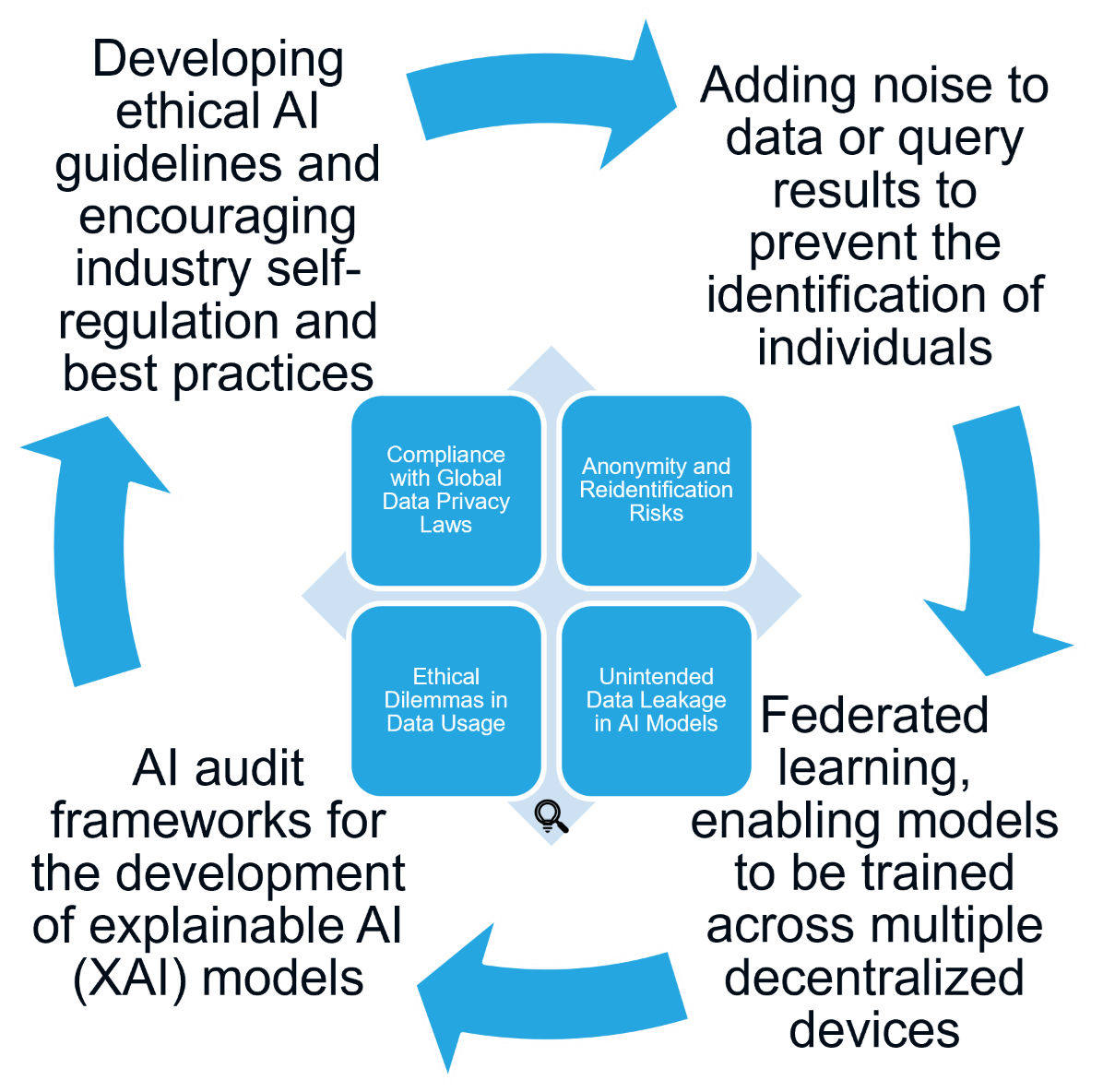

Within the area of generative AI, a variety of technological options are being explored to handle information privateness challenges. Amongst these, differential privateness stands out as a key method, as illustrated in Determine 1. It entails including noise to information or question outcomes to forestall the identification of people, thereby permitting using information in AI functions whereas guaranteeing privateness. One other revolutionary method is federated studying, which allows fashions to be skilled throughout a number of decentralized units or servers holding native information samples. This technique ensures that delicate information stays on the consumer’s system, enhancing privateness. Moreover, homomorphic encryption is gaining consideration because it permits for computations to be carried out on encrypted information. This implies AI fashions can be taught from information with out accessing it in its uncooked type, providing a brand new degree of safety.

Determine 1. Knowledge Privateness Solutioning in Generative AI

The regulatory panorama can also be evolving to maintain tempo with these technological developments. AI auditing and transparency instruments have gotten more and more essential. AI audit frameworks assist in assessing and documenting information utilization, mannequin choices, and potential biases in AI programs, guaranteeing accountability and transparency. Moreover, the event of explainable AI (XAI) fashions is essential for constructing belief in AI programs. These fashions present insights into how and why choices are made, particularly in delicate functions.

Laws and coverage play a vital function in safeguarding information privateness within the context of generative AI. Updating and adapting present privateness legal guidelines, just like the GDPR and CCPA, to handle the distinctive challenges posed by generative AI is crucial. This entails clarifying guidelines round AI information utilization, consent, and information topic rights. Furthermore, there’s a rising want for AI-specific rules that handle the nuances of AI know-how, together with information dealing with, bias mitigation, and transparency necessities. The institution of worldwide collaboration and requirements is vital because of the world nature of AI. This collaboration is vital in establishing a standard framework for information privateness in AI, facilitating cross-border cooperation and compliance.

Lastly, creating moral AI pointers and inspiring business self-regulation and greatest practices are pivotal. Establishments and organizations can develop moral pointers for AI improvement and utilization, specializing in privateness, equity, and accountability. Such self-regulation throughout the AI business, together with the adoption of greatest practices for information privateness, can considerably contribute to the accountable improvement of AI applied sciences.

Future Instructions and Alternatives

Within the realm of privacy-preserving AI applied sciences, the longer term is wealthy with potential for improvements. One key space of focus is the event of extra subtle information anonymization strategies. These strategies goal to make sure the privateness of people whereas sustaining the utility of knowledge for AI coaching, putting a stability that’s essential for moral AI improvement. Alongside this, the exploration of superior encryption strategies, together with cutting-edge approaches like quantum encryption, is gaining momentum. These strategies promise to supply extra sturdy safeguards for information utilized in AI programs, enhancing safety towards potential breaches.

One other promising avenue is the exploration of decentralized information architectures. Applied sciences like blockchain provide new methods to handle and safe information in AI functions. They carry the advantages of elevated transparency and traceability, that are very important in constructing belief and accountability in AI programs.

As AI know-how progresses, it’ll inevitably work together with new and extra advanced sorts of information, similar to biometric and behavioral data. This development requires a proactive method in anticipating and getting ready for the privateness implications of those evolving information varieties. The event of worldwide information privateness requirements turns into important on this context. Such requirements want to handle the distinctive challenges posed by AI and the worldwide nature of knowledge and know-how, guaranteeing a harmonized method to information privateness throughout borders.

AI functions in delicate domains like healthcare and finance warrant particular consideration. In these areas, privateness considerations are particularly pronounced because of the extremely private nature of the info concerned. Guaranteeing the moral use of AI in these domains is not only a technological problem however a societal crucial.

The collaboration between know-how, authorized, and coverage sectors is essential in navigating these challenges. Encouraging interdisciplinary analysis that brings collectively specialists from numerous fields is vital to creating complete and efficient options. Public-private partnerships are additionally very important, selling the sharing of greatest practices, sources, and data within the AI and privateness subject. Moreover, implementing academic and consciousness campaigns is essential to tell the general public and policymakers about the advantages and dangers of AI. These campaigns emphasize the significance of information privateness, serving to to foster a well-informed dialogue about the way forward for AI and its function in society.

Conclusion

The combination of generative AI with sturdy information privateness measures presents a dynamic and evolving problem. The long run panorama will likely be formed by technological developments, regulatory adjustments, and the continual must stability innovation with moral issues. The sphere can navigate these challenges by fostering collaboration, adapting to rising dangers, and prioritizing privateness and transparency. As AI continues to permeate numerous points of life, guaranteeing its accountable and privacy-conscious improvement is crucial for its sustainable and helpful integration into society.

The publish The Moral Algorithm: Balancing AI Innovation with Knowledge Privateness appeared first on Datafloq.