MongoDB has grown from a fundamental JSON key-value retailer to some of the fashionable NoSQL database options in use at the moment. It’s extensively supported and gives versatile JSON doc storage at scale. It additionally gives native querying and analytics capabilities. These attributes have brought on MongoDB to be extensively adopted particularly alongside JavaScript internet functions.

As succesful as it’s, there are nonetheless cases the place MongoDB alone cannot fulfill the entire necessities for an software, so getting a replica of the info into one other platform by way of a change knowledge seize (CDC) resolution is required. This can be utilized to create knowledge lakes, populate knowledge warehouses or for particular use instances like offloading analytics and textual content search.

On this put up, we’ll stroll by means of how CDC works on MongoDB and the way it may be carried out, after which delve into the explanation why you would possibly need to implement CDC with MongoDB.

Bifurcation vs Polling vs Change Knowledge Seize

Change knowledge seize is a mechanism that can be utilized to maneuver knowledge from one knowledge repository to a different. There are different choices:

- You possibly can bifurcate knowledge coming in, splitting the info into a number of streams that may be despatched to a number of knowledge sources. Usually, this implies your functions would submit new knowledge to a queue. This isn’t an excellent choice as a result of it limits the APIs that your software can use to submit knowledge to be people who resemble a queue. Functions have a tendency to want the assist of upper stage APIs for issues like ACID transactions. So, this implies we typically need to enable our software to speak on to a database. The applying might submit knowledge by way of a micro-service or software server that talks on to the database, however this solely strikes the issue. These companies would nonetheless want to speak on to the database.

- You can periodically ballot your entrance finish database and push knowledge into your analytical platform. Whereas this sounds easy, the small print get difficult, significantly if you want to assist updates to your knowledge. It seems that is onerous to do in observe. And you’ve got now launched one other course of that has to run, be monitored, scale and so forth.

So, utilizing CDC avoids these issues. The applying can nonetheless leverage the database options (perhaps by way of a service) and you do not have to arrange a polling infrastructure. However there’s one other key distinction — utilizing CDC provides you with the freshest model of the info. CDC allows true real-time analytics in your software knowledge, assuming the platform you ship the info to can eat the occasions in actual time.

Choices For Change Knowledge Seize on MongoDB

Apache Kafka

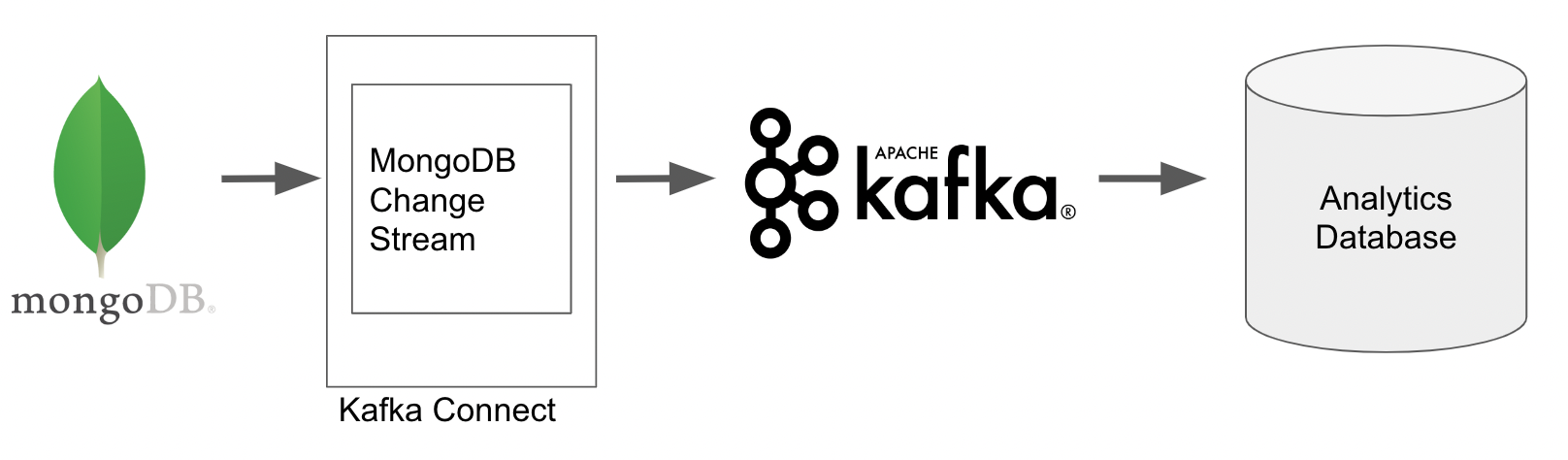

The native CDC structure for capturing change occasions in MongoDB makes use of Apache Kafka. MongoDB gives Kafka supply and sink connectors that can be utilized to jot down the change occasions to a Kafka matter after which output these modifications to a different system akin to a database or knowledge lake.

The out-of-the-box connectors make it pretty easy to arrange the CDC resolution, nonetheless they do require using a Kafka cluster. If this isn’t already a part of your structure then it might add one other layer of complexity and value.

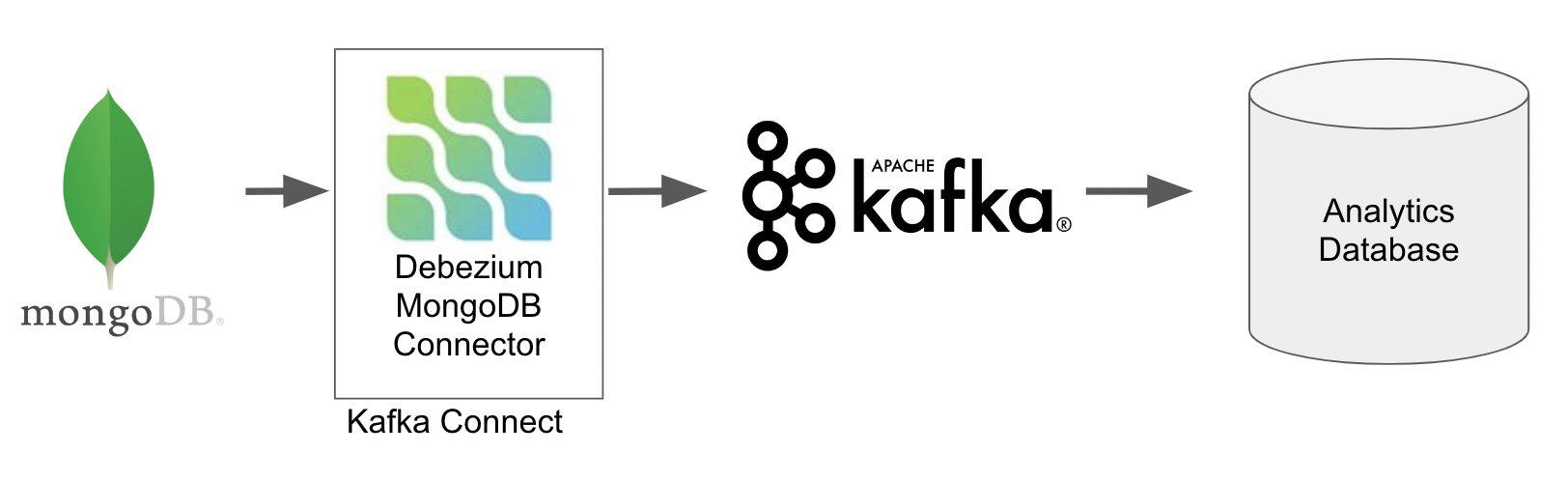

Debezium

It’s also potential to seize MongoDB change knowledge seize occasions utilizing Debezium. If you’re acquainted with Debezium, this may be trivial.

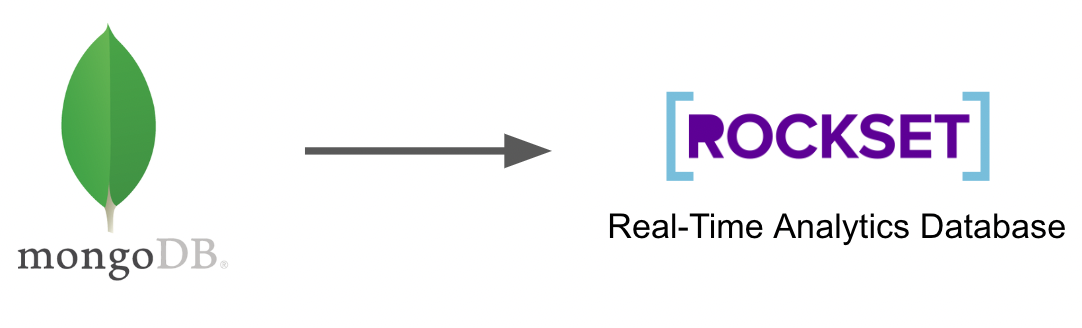

MongoDB Change Streams and Rockset

In case your purpose is to execute real-time analytics or textual content search, then Rockset’s out-of-the-box connector that leverages MongoDB change streams is an effective selection. The Rockset resolution requires neither Kafka nor Debezium. Rockset captures change occasions instantly from MongoDB, writes them to its analytics database, and routinely indexes the info for quick analytics and search.

Your selection to make use of Kafka, Debezium or a completely built-in resolution like Rockset will rely in your use case, so let’s check out some use instances for CDC on MongoDB.

Use Instances for CDC on MongoDB

Offloading Analytics

One of many major use instances for CDC on MongoDB is to dump analytical queries. MongoDB has native analytical capabilities permitting you to construct up complicated transformation and aggregation pipelines to be executed on the paperwork. Nevertheless, these analytical pipelines, on account of their wealthy performance, are cumbersome to jot down as they use a proprietary question language particular to MongoDB. This implies analysts who’re used to utilizing SQL could have a steep studying curve for this new language.

Paperwork in MongoDB also can have complicated constructions. Knowledge is saved as JSON paperwork that may comprise nested objects and arrays that each one present additional intricacies when build up analytical queries on the info akin to accessing nested properties and exploding arrays to research particular person components.

Lastly, performing giant analytical queries on a manufacturing entrance finish occasion can negatively affect consumer expertise, particularly if the analytics is being run incessantly. This might considerably decelerate learn and write speeds that builders typically need to keep away from, particularly as MongoDB is commonly chosen significantly for its quick write and browse operations. Alternatively, it could require bigger and bigger MongoDB machines and clusters, rising value.

To beat these challenges, it’s common to ship knowledge to an analytical platform by way of CDC in order that queries will be run utilizing acquainted languages akin to SQL with out affecting efficiency of the front-end system. Kafka or Debezium can be utilized to extract the modifications after which write them to an appropriate analytics platform, whether or not it is a knowledge lake, knowledge warehouse or a real-time analytics database.

Rockset takes this a step additional by not solely instantly consuming CDC occasions from MongoDB, but additionally supporting SQL queries natively (together with JOINs) on the paperwork, and gives performance to govern complicated knowledge constructions and arrays, all inside SQL queries. This permits real-time analytics as a result of the necessity to remodel and manipulate the paperwork earlier than queries is eradicated.

Search Choices on MongoDB

One other compelling use case for CDC on MongoDB is to facilitate textual content searches. Once more, MongoDB has carried out options akin to textual content indexes that assist this natively. Textual content indexes enable sure properties to be listed particularly for search functions. This implies paperwork will be retrieved based mostly on proximity matching and never simply precise matches. You may also embrace a number of properties within the index akin to a product title and an outline, so each are used to find out whether or not a doc matches a selected search time period.

Whereas that is highly effective, there should be some cases the place offloading to a devoted database for search is perhaps preferable. Once more, efficiency would be the major motive particularly if quick writes are essential. Including textual content indexes to a set in MongoDB will naturally add an overhead on each insertion as a result of indexing course of.

In case your use case dictates a richer set of search capabilities, akin to fuzzy matching, then you could need to implement a CDC pipeline to repeat the required textual content knowledge from MongoDB into Elasticsearch. Nevertheless, Rockset remains to be an choice in case you are proud of proximity matching, need to offload search queries, and in addition retain the entire real-time analytics advantages mentioned beforehand. Rockset’s search functionality can be SQL based mostly, which once more would possibly scale back the burden of manufacturing search queries as each Elasticsearch and MongoDB use bespoke languages.

Conclusion

MongoDB is a scalable and highly effective NoSQL database that gives plenty of performance out of the field together with quick learn (get by major key) and write speeds, JSON doc manipulation, aggregation pipelines and textual content search. Even with all this, a CDC resolution should allow larger capabilities and/or scale back prices, relying in your particular use case. Most notably, you would possibly need to implement CDC on MongoDB to cut back the burden on manufacturing cases by offloading load intensive duties, akin to real-time analytics, to a different platform.

MongoDB gives Kafka and Debezium connectors out of the field to help with CDC implementations; nonetheless, relying in your current structure, this will likely imply implementing new infrastructure on prime of sustaining a separate database for storing the info.

Rockset skips the requirement for Kafka and Debezium with its inbuilt connector, based mostly on MongoDB change streams, decreasing the latency of knowledge ingestion and permitting real-time analytics. With computerized indexing and the power to question structured or semi-structured natively with SQL, you’ll be able to write highly effective queries on knowledge with out the overhead of ETL pipelines, that means queries will be executed on CDC knowledge inside one to 2 seconds of it being produced.

Lewis Gavin has been a knowledge engineer for 5 years and has additionally been running a blog about abilities throughout the Knowledge neighborhood for 4 years on a private weblog and Medium. Throughout his pc science diploma, he labored for the Airbus Helicopter crew in Munich enhancing simulator software program for navy helicopters. He then went on to work for Capgemini the place he helped the UK authorities transfer into the world of Large Knowledge. He’s at present utilizing this expertise to assist remodel the info panorama at easyfundraising.org.uk, an internet charity cashback website, the place he’s serving to to form their knowledge warehousing and reporting functionality from the bottom up.