Maintaining with an business as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a helpful roundup of current tales on the planet of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

This week in AI, OpenAI signed up its first greater training buyer: Arizona State College.

ASU will collaborate with OpenAI to carry ChatGPT, OpenAI’s AI-powered chatbot, to the college’s researchers, workers and college — operating an open problem in February to ask college and workers to submit concepts for tactics to make use of ChatGPT.

The OpenAI-ASU deal illustrates the shifting opinions round AI in training because the tech advances sooner than curriculums can sustain. Final summer season, colleges and faculties rushed to ban ChatGPT over plagiarism and misinformation fears. Since then, some have reversed their bans, whereas others have begun internet hosting workshops on GenAI instruments and their potential for studying.

The controversy over the function of GenAI in training isn’t more likely to be settled anytime quickly. However — for what it’s price — I discover myself more and more within the camp of supporters.

Sure, GenAI is a poor summarizer. It’s biased and poisonous. It makes stuff up. However it can be used for good.

Take into account how a instrument like ChatGPT would possibly assist college students scuffling with a homework task. It might clarify a math downside step-by-step or generate an essay define. Or it might floor the reply to a query that’d take far longer to Google.

Now, there’s cheap considerations over dishonest — or a minimum of what is likely to be thought of dishonest inside the confines of as we speak’s curriculums. I’ve anecdotally heard of scholars, notably college students in school, utilizing ChatGPT to write down massive chunks of papers and essay questions on take-home exams.

This isn’t a brand new downside — paid essay-writing companies have been round for ages. However ChatGPT dramatically lowers the barrier to entry, some educators argue.

There’s proof to counsel that these fears are overblown. However setting that apart for a second, I say we step again and take into account what drives college students to cheat within the first place. College students are sometimes rewarded for grades, not effort or understanding. The motivation construction’s warped. Is it any marvel, then, that children view college assignments as bins to examine slightly than alternatives to be taught?

So let college students have GenAI — and let educators pilot methods to leverage this new tech to succeed in college students the place they’re. I don’t have a lot hope for drastic training reform. However maybe GenAI will function a launchpad for lesson plans that get children enthusiastic about topics they by no means would’ve explored beforehand.

Listed here are another AI tales of notice from the previous few days:

Microsoft’s studying tutor: Microsoft this week made Studying Coach, its AI instrument that gives learners with personalised studying apply, out there for free of charge to anybody with a Microsoft account.

Algorithmic transparency in music: EU regulators are calling for legal guidelines to drive higher algorithmic transparency from music streaming platforms. Additionally they wish to deal with AI-generated music — and deepfakes.

NASA’s robots: NASA lately confirmed off a self-assembling robotic construction that, Devin writes, would possibly simply develop into a vital a part of transferring off-planet.

Samsung Galaxy, now AI-powered: At Samsung’s Galaxy S24 launch occasion, the corporate pitched the varied ways in which AI might enhance the smartphone expertise, together with by way of reside translation for calls, instructed replies and actions and a brand new solution to Google search utilizing gestures.

DeepMind’s geometry solver: DeepMind, the Google AI R&D lab, this week unveiled AlphaGeometry, an AI system that the lab claims can remedy as many geometry issues as the common Worldwide Mathematical Olympiad gold medalist.

OpenAI and crowdsourcing: In different OpenAI information, the startup is forming a brand new crew, Collective Alignment, to implement concepts from the general public about how to make sure its future AI fashions “align to the values of humanity.” On the identical time, it’s altering its coverage to permit army purposes of its tech. (Speak about combined messaging.)

A Professional plan for Copilot: Microsoft has launched a consumer-focused paid plan for Copilot, the umbrella model for its portfolio of AI-powered, content-generating applied sciences, and loosened the eligibility necessities for enterprise-level Copilot choices. It’s additionally launched new options without spending a dime customers, together with a Copilot smartphone app.

Misleading fashions: Most people be taught the ability of deceiving different people. So can AI fashions be taught the identical? Sure, the reply appears — and terrifyingly, they’re exceptionally good at it. in line with a brand new examine from AI startup Anthropic.

Tesla’s staged robotics demo: Elon Musk’s Optimus humanoid robotic from Tesla is doing extra stuff — this time folding a t-shirt on a desk in a growth facility. However because it seems, the robotic’s something however autonomous at present stage.

Extra machine learnings

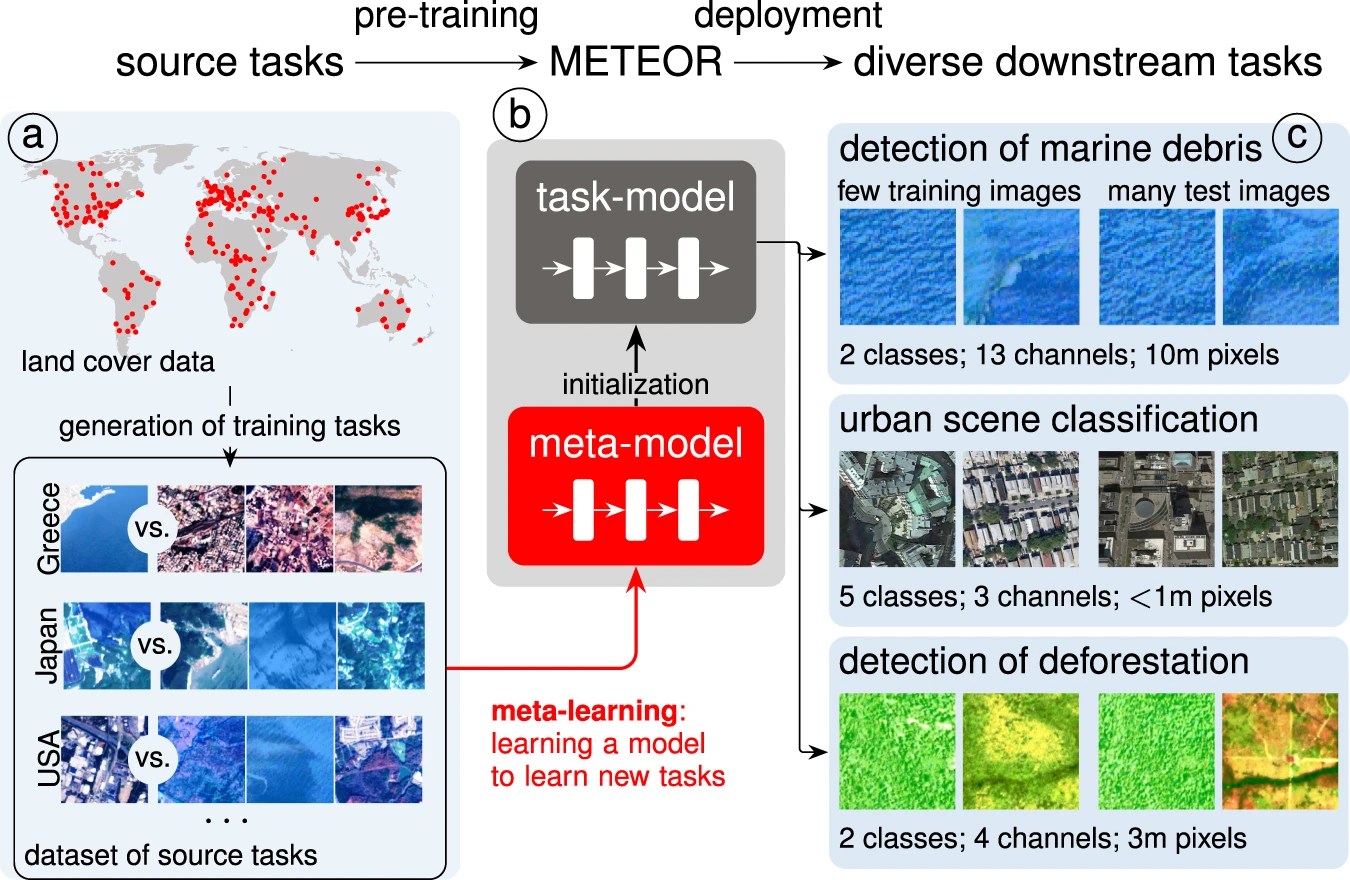

One of many issues holding again broader purposes of issues like AI-powered satellite tv for pc evaluation is the need of coaching fashions to acknowledge what could also be a reasonably esoteric form or idea. Figuring out the define of a constructing: straightforward. Figuring out particles fields after flooding: not really easy! Swiss researchers at EPFL are hoping to make it simpler to do that with a program they name METEOR.

Picture Credit: EPFL

“The issue in environmental science is that it’s usually not possible to acquire a large enough dataset to coach AI packages for our analysis wants,” mentioned Marc Rußwurm, one of many undertaking’s leaders. Their new construction for coaching permits a recognition algorithm to be skilled for a brand new job with simply 4 or 5 consultant photographs. The outcomes are similar to fashions skilled on way more information. Their plan is to graduate the system from lab to product with a UI for bizarre folks (that’s to say, non-AI-specialist researchers) to make use of it. You possibly can learn the paper they revealed right here.

Going the opposite path — creating imagery — is a subject of intense analysis, since doing it effectively might scale back the computation load for generative AI platforms. The commonest methodology is known as diffusion, which regularly refines a pure noise supply right into a goal picture. Los Alamos Nationwide Lab has a brand new method they name Blackout Diffusion, which as a substitute begins from a pure black picture.

That removes the necessity for noise to start with, however the true advance is within the framework happening in “discrete areas” slightly than steady, enormously decreasing the computational load. They are saying it performs effectively, and at decrease value, however it’s positively removed from vast launch. I’m not certified to judge the effectiveness of this method (the mathematics is way past me) however nationwide labs don’t are inclined to hype up one thing like this with out motive. I’ll ask the researchers for more information.

AI fashions are sprouting up all around the pure sciences, the place their capability to sift sign out of noise each produces new insights and saves cash on grad scholar information entry hours.

Australia is making use of Pano AI’s wildfire detection tech to its “Inexperienced Triangle,” a serious forestry area. Like to see startups being put to make use of like this — not solely might it assist forestall fires, however it produces priceless information for forestry and pure useful resource authorities. Each minute counts with wildfires (or bushfires, as they name them down there), so early notifications may very well be the distinction between tens and hundreds of acres of injury.

Permafrost discount as measured by the outdated mannequin, left, and the brand new mannequin, proper.

Los Alamos will get a second point out (I simply realized as I am going over my notes) since they’re additionally engaged on a brand new AI mannequin for estimating the decline of permafrost. Current fashions for this have a low decision, predicting permafrost ranges in chunks about 1/3 of a sq. mile. That’s definitely helpful, however with extra element you get much less deceptive outcomes for areas which may seem like 100% permafrost on the bigger scale however are clearly lower than that while you look nearer. As local weather change progresses, these measurements should be precise!

Biologists are discovering attention-grabbing methods to check and use AI or AI-adjacent fashions within the many sub-fields of that area. At a current convention written up by my friends at GeekWire, instruments to trace zebras, bugs, even particular person cells have been being proven off in poster classes.

And on the physics aspect and chemistry aspect, Argonne NL researchers are taking a look at how greatest to bundle hydrogen to be used as gasoline. Free hydrogen is notoriously troublesome to include and management, so binding it to a particular helper molecule retains it tame. The issue is hydrogen binds to just about every part, so there are billions and billions of prospects for helper molecules. However sorting by way of enormous units of information is a machine studying specialty.

““We have been in search of natural liquid molecules that maintain on to hydrogen for a very long time, however not so strongly that they may not be simply eliminated on demand,” mentioned the undertaking’s Hassan Harb. Their system sorted by way of 160 billion molecules, and by utilizing an AI screening methodology they have been in a position to look by way of 3 million a second — so the entire ultimate course of took about half a day. (After all, they have been utilizing fairly a big supercomputer.) They recognized 41 of the perfect candidates, which is a piddling quantity for the experimental crew to check within the lab. Hopefully they discover one thing helpful — I don’t wish to must take care of hydrogen leaks in my subsequent automobile.

To finish on a phrase of warning, although: a examine in Science discovered that machine studying fashions used to foretell how sufferers would reply to sure therapies was extremely correct… inside the pattern group they have been skilled on. In different instances, they principally didn’t assist in any respect. This doesn’t imply they shouldn’t be used, however it helps what lots of people within the enterprise have been saying: AI isn’t a silver bullet, and it have to be examined totally in each new inhabitants and utility it’s utilized to.