(MeSSrro/Shutterstock)

The info panorama skilled vital adjustments in 2023, presenting new alternatives (and potential challenges) for knowledge engineering groups.

I imagine we are going to see the next this yr within the areas of analytics, OLAP, knowledge engineering, and the serving layer, empowering groups with higher protocols and extra decisions amongst a sea of instruments.

Knowledge Lake Predictions

Shifting on from Hadoop: In 2023, instruments reminiscent of DuckDB (C++), Polars (Rust) and Apache Arrow (Go, Rust, Javascript, …) turned highly regarded, beginning to present cracks within the full dominance of the JVM and C/Python within the analytics house.

I imagine the tempo of innovation exterior the JVM will speed up, sending the present Hadoop-based architectures into the legacy drawer.

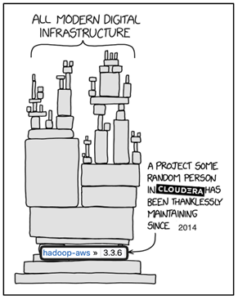

Whereas most corporations already aren’t utilizing Hadoop straight, a lot of the present state-of-the-art remains to be constructed on Hadoop’s scaffolding: Apache Spark fully depends on Hadoop’s I/O implementation to entry its underlying knowledge. Many lakehouse architectures are primarily based both on Apache Hive-style tables, or much more straight, on the Hive Metastore and its interface to create a tabular abstraction on high of its storage layer.

Supply: XKCD, beneath Inventive Commons Attribution-NonCommercial 2.5 License.

Barely modified by OuncesKatz. Cloudera’s emblem is trademarked to Cloudera Inc.

Whereas Hadoop and Hive aren’t inherently dangerous, they now not symbolize the state-of-the-art. For as soon as, they’re fully primarily based on the JVM, which is extremely performant these days, however nonetheless not the only option in the event you’re trying to get the very best out of CPUs which might be merely not getting any sooner.

Moreover, Apache Hive, which marked an enormous step ahead in large knowledge processing by abstracting away the underlying distributed nature of Hadoop and exposing a well-known SQL(-ish) desk abstraction on high of a distributed file system, is beginning to present its age and limitations: lack of transactionality and concurrency management, lack of separation between metadata and knowledge, and different classes we’ve discovered over the 15+ years of its existence.

I imagine this yr we’ll see Apache Spark transferring on from these roots: Databricks already has a JVM-free implementation of Apache Spark (Photon), whereas new desk codecs reminiscent of Apache Iceberg are additionally stepping away from our collective Hive roots by implementing an open specification for desk catalogs, in addition to offering a extra fashionable strategy to the I/O layer.

Battle of the Meta-Shops

With Hive slowly however steadily changing into a factor of the previous and Open Desk codecs reminiscent of Delta Lake and Iceberg changing into ubiquitous, a central element in any knowledge structure can be being displaced – the “meta-store”. That layer of indirection between recordsdata on an object retailer or filesystem – and the tables and entities that they symbolize.

Whereas the desk codecs are open, evidently their meta-stores are rising more and more proprietary and locked down.

Databricks is aggressively pushing customers to its Unity Catalog, AWS has Glue, and Snowflake has its personal catalog implementation, too. These are usually not interoperable, and in some ways develop into a way of vendor lock-in for customers trying to leverage the openness afforded by the brand new desk codecs. I imagine that sooner or later, the pendulum will swing again – as customers will push in the direction of extra standardization and suppleness.

Massive Knowledge Engineering as a Apply Will Mature

As analytics and knowledge engineering develop into extra prevalent, the mass physique of collective data is rising and greatest practices are starting to emerge.

Knowledge mesh and knowledge producds will emerge in 2024, the creator predicts (Chombosan/Shutterstock)

In 2023 we noticed instruments that promote a structured dev-test-release strategy to knowledge engineering changing into extra mainstream. dbt is vastly common and established. Observability and monitoring at the moment are additionally seen as extra than simply nice-to-haves, judging by the success of instruments reminiscent of Nice Expectations, Monte Carlo, and different high quality and observability platforms. lakeFS advocates for versioning of the information itself to permit git-like branching and merging, permitting to construct strong, repeatable dev-test-release pipelines.

Moreover, we’re additionally now seeing patterns such because the Knowledge Mesh and Knowledge Merchandise being promoted by everybody, from Snowflake and Databricks to startups popping up to fill the hole in tooling that also exists round these patterns.

I imagine we’ll see a surge of instruments that intention to assist us obtain these targets in 2024. From data-centric monitoring and logging to testing harnesses and higher CI/CD choices – there’s quite a lot of catching as much as do with software program engineering practices, and that is the suitable time to shut these gaps.

The Serving Layer Predictions

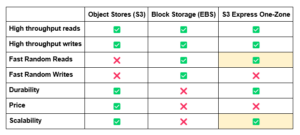

Cloud native purposes will transfer a bigger share of their state to object storage: On the finish of 2023, AWS introduced one of many greatest options since its inception in 2006 coming to S3, its core storage service.

That characteristic, “S3 Specific One-Zone,” permits customers to make use of the identical* customary object retailer API as supplied by S3, however with a constant single-digit millisecond latency to entry knowledge. At roughly half the price for API calls.

This marks a dramatic change. Till now, the use instances for object storage had been considerably slim: whereas they permit storing just about infinite quantities of knowledge, you’d need to settle with longer entry occasions, even in the event you’re solely trying to learn small quantities of knowledge.

This trade-off clearly made them highly regarded for analytics and large knowledge processing, the place latency is usually much less essential than total throughput, however it meant that low latency methods reminiscent of databases, HPC and user-facing purposes couldn’t actually depend on them as a part of their vital path.

In the event that they made any use of the thing retailer, it might sometimes be within the type of an archival or backup storage tier. If you would like quick entry, you need to go for a block gadget, connected to your occasion in some kind, and forgo the advantages of scalability and sturdiness that object shops present.

I imagine S3 Specific One-Zone is step one towards altering that.

With constant, low latency reads, it’s now theoretically potential to construct totally object-store-backed databases that don’t depend on block storage in any respect. S3 is the brand new disk drive.

With that in thoughts, I predict that in 2024 we are going to see extra operational databases beginning to undertake that idea in follow: permitting databases to run on fully ephemeral compute environments, relying solely on the thing retailer for persistence.

Operational Databases Will Start to Disaggregate

With the earlier prediction in thoughts, we are able to take this strategy a step additional: what if we standardized the storage layer for OLTP the identical manner we standardized it for OLAP?

One of many greatest guarantees of the Knowledge Lake is the flexibility to separate storage and compute, in order that knowledge being written by one expertise could possibly be learn by one other.

This provides builders the liberty to decide on the best-of-breed stack that most closely fits their use case. It took us some time to get there, however with applied sciences reminiscent of Apache Parquet, Delta Lake, and Apache Iceberg, that is now doable.

What if we handle to standardize the codecs used for operational knowledge entry as properly? Let’s think about a key/worth abstraction (maybe much like LSM sstables?) that enables storing sorted key worth pairs, optimally laid out for object storage.

We are able to deploy a stateless RDBMS to supply question parsing/planning/execution capabilities on high, whilst an on-demand lambda perform. One other system may use that very same storage abstraction to retailer an inverted index for search, or a vector similarity index for a cool generative AI software.

Whereas I don’t imagine a yr from now we’ll be operating all our databases as lambda capabilities, I do assume we are going to see a shift from “object shops as an archive tier”, to extra “object retailer because the system of document” taking place in operational databases as properly.

Last Ideas

I’m optimistic that 2024 will proceed to evolve the information panorama in principally the suitable instructions: higher abstractions, improved interfaces between completely different components of the stack, and new capabilities unlocked by technological evolution.

Sure, it gained’t at all times be excellent, and ease of use will probably be traded off for much less flexibility, however having seen this ecosystem develop during the last 20 years, I believe we’re in higher form than we’ve ever been.

Now we have extra selection, higher protocols and instruments – and a decrease barrier of entry than ever earlier than. And I don’t assume that’s prone to change.

Concerning the creator: OuncesKatz is the co-founder and CTO of Treeverse, the corporate behind open supply lakeFS, which offers model management for knowledge lakes utilizing Git-like operations.

management for knowledge lakes utilizing Git-like operations.

Associated Objects:

2024 and the Hazard of the Logarithmic AI Wave

Knowledge Administration Predictions for 2024

Fourteen Massive Knowledge Predictions for 2024