(Roman Samborskyi/Shutterstock)

For those who’re making an attempt to pin down the principles and laws for synthetic intelligence in america, good luck. They’re evolving shortly in the meanwhile, which makes them a transferring goal for American companies that need to keep on the straight and slim. Nevertheless, the final thrust of the laws, in addition to work carried out by the Europeans, provides us a sign of the place they might possible find yourself within the US.

Most eyes are on the European Union’s AI Act with regards to setting a world normal for AI regulation. The regulation, which was first proposed in April 2021, was initially accredited by the European Parliament in June 2023, adopted by the approval of sure guidelines final month. The act nonetheless has yet one more vote to clear this 12 months, after which there can be a 12-24 month ready interval earlier than the regulation started to be enforced.

The EU AI Act would create a standard regulatory and authorized framework for using AI in Europe. It might management each how AI is developed and what corporations can use it for, and would set penalties for failure to stick to necessities.

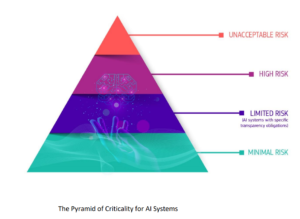

There are three broad classes of AI utilization that the AI Act is searching for to manage, in response to Traci Gusher, Americas Knowledge and Analytics Chief for EY. On the high of the checklist are AI makes use of that may be banned, like distant biometric authentication, resembling facial recognition.

Traci Gusher, Americas Knowledge and Analytics Chief for EY, talking at Tabor Communications’ HPC + AI on Wall Road, September 2023

“So the concept that you might be distant wherever on the planet taking a look at a face that anyone in Europe and establish them for insert right here causes, was a part of what was urged as being inappropriate use of synthetic intelligence,” Gusher mentioned throughout final fall’s 2023 HPC + AI on Wall Road convention in New York Metropolis.

The second class is high-risk AI utilization, or AI makes use of that may be applicable but in addition should be extra intently ruled, and should require approval and monitoring by regulators, Gusher mentioned.

“These embrace the place you’re shedding AI on issues like monitoring infrastructure, in utilization in academic and faculties and regulation enforcement and various different areas,” she mentioned. “And what this actually says is that, in case you’re utilizing AI for considered one of most of these functions, it must be ruled extra intently, and actually, it needs to be registered with the EU.”

The third space of the EU Act pertains to GenAI, and covers transparency, which isn’t precisely a GenAI sturdy go well with. In accordance with Gusher, the EU AI Act will put sure limits on Gen AI utilization.

“Actually the crux of what the regulation dictates for generative AI is that it must be clear,” she says. “There’s plenty of completely different items of the laws that that which might be listed right here in addition to among the frameworks and pointers to speak about transparency, nevertheless it received actually particular in saying that generative AI is getting used within the manufacturing of textual content, of voice, of video, of something, it has to obviously state that it’s utilizing it.”

Europe is main the best way in AI regulation across the globe with the AI Act, simply because it did with the World Knowledge Privateness Regulation (GDPR) a number of years in the past. However the EU AI Act shouldn’t be the one recreation on the town with regards to AI regulation, Gusher mentioned throughout her presentation.

China can be transferring ahead with its personal AI regulation. Dubbed the Web Info Service Algorithmic Advice Administration Provisions, the regulation is de facto geared toward guaranteeing that AI and machine studying makes use of are carried out in an moral method, that they uphold Chinese language morality, are accountable and clear, and “actively unfold constructive vitality.”

The Chinese language IISARMP (for lack of a greater title) went into impact in March 2022, in response to an August 2023 Latham & Watkins transient. The Folks’s Republic of China added one other regulation in January 2023 to manage “deep synthesis” and accounted for generative AI with one other new regulation in July 2023.

AI can be coming underneath regulation within the Nice White North. The Canadian legislature remains to be contemplating Invoice C-27, a far-reaching addition to the regulation that may implement sure information privateness necessities in addition to regulate AI. It’s unclear whether or not the regulation will go into impact earlier than the Canadian election in 2025.

The laws will not be as far alongside within the US. The Nationwide AI Initiative Act of 2020, which was signed into regulation by President Trump, aimed primarily at rising AI funding, opening up federal computing sources for AI, setting AI technical requirements, constructing an AI workforce, and fascinating with allies on AI. In accordance with Gusher, it has not resulted in AI regulation.

President Biden went a bit additional together with his October 2023 government order, which required builders of basis mannequin to inform the federal government when coaching the mannequin and sharing the outcomes of the assessments. It additionally requires the Division of Commerce to develop requirements for detecting AI-generated content material, resembling by way of watermarks. Nevertheless, the chief order lacks the power of regulation.

There have been some makes an attempt to move a nationwide regulation. Senators Dick Blumenthal and Josh Hawley launched a bipartisan AI framework final August, nevertheless it hasn’t moved ahead but. In lieu of recent legal guidelines handed by Congress, numerous states have picked up their AI regulation pens. In accordance with Gusher, no fewer than 25 states, plus the District of Colombia, have launched AI payments, and 14 of them have adopted resolutions or enacted laws.

Gusher factors out that not all AI guidelines could come from the nationwide or state legislatures. For instance, one 12 months in the past, the federal authorities’s Nationwide Institute of Requirements and Expertise (NIST) revealed an AI threat administration framework that goals to assist organizations undertake AI in a managed and managed method.

The NIST Threat Administration Framework (RMF), which you’ll be able to obtain right here, encourages adopters to get a deal with on AI by adopting its suggestions in 4 areas, together with Map, Measure, Handle, and Govern. After mapping out their AI tech and utilization, they devise methods to measure dangers in a qualitative and quantitative means. These measurements assist the organizations devise methods to handle the dangers in an ongoing trend. Collectively these capabilities give the organizations the means to manipulate their AI and its dangers.

Whereas the NIST RMF is voluntary and never backed by any authorities power, it’s nonetheless gaining fairly a little bit of momentum when it comes to adoption throughout business, Gusher mentioned.

“The NIST farmwork is de facto the one that everybody is rooting in, when it comes to beginning to consider what sorts of requirements and frameworks must be utilized to the factitious intelligence that they’re constructing inside their organizations,” she mentioned. “It is a fairly strong and complete framework that provides us an excellent roadmap to the varieties of issues that organizations want to consider, in addition to the issues that they’re going to have to observe and govern. And it’s actually the framework that’s being acknowledged as the idea for what is going to assist organizations put together for impending regulation.”

Ultimately, AI regulation will come to the US, Gusher mentioned. When it does, it is going to most definitely observe within the footsteps of GDPR, which has been replicated by quite a few states within the US, together with California with its CCPA (and subsequent CPRA). No matter AI regulation ultimately emerges within the US, Gusher mentioned it is going to possible resemble the EU AI Act.

“Europe was actually the primary group of nations to align round a typical for information privateness and buyer safety for information privateness globally. The results of which is that not solely is it the usual in Europe, nevertheless it has been used as a foundation and the replication for laws world wide,” she mentioned.

“The identical varieties of factor we count on to occur with synthetic intelligence regulation, the place as soon as the EU lands on and deploys this– the finalization of this laws is scheduled to be accomplished by the tip of this 12 months [2023] and enacted subsequent 12 months [2024],” she continued. “As soon as we’ve a transparent grasp on precisely what will be deployed in Europe, we are going to count on that we are going to begin to see different nations observe go well with outdoors of Europe.”

Associated Gadgets:

European Policymakers Approve Guidelines for AI Act

Biden’s Government Order on AI and Knowledge Privateness Will get Largely Favorable Reactions

NIST Places AI Threat Administration on the Map with New Framework