Databricks not too long ago introduced the Information Intelligence Platform, a pure evolution of the lakehouse structure we pioneered. The thought of a Information Intelligence Platform is that of a single, unified platform that deeply understands a corporation’s distinctive knowledge, empowering anybody to simply entry the information they want and shortly construct turnkey customized AI functions.

Each dashboard, app, and mannequin constructed on a Information Intelligence Platform requires dependable knowledge to operate correctly, and dependable knowledge requires the very best knowledge engineering practices. Information engineering is core to every part we do – Databricks has empowered knowledge engineers with finest practices for years via Spark, Delta Lake, Workflows, Delta Reside Tables, and newer Gen AI options like Databricks Assistant.

Within the Age of AI, Information Engineering finest practices turn out to be much more mission important. So whereas we’re dedicated to democratizing entry to the explicitly Generative AI performance that may proceed unlocking the intelligence of the Information Intelligence Platform, we’re simply as dedicated to innovating at our core via foundational knowledge engineering choices. In any case, practically 3/4 of executives imagine unreliable knowledge is the one largest menace to the success of their AI initiatives.

At Information + AI Summit in June 2023, we introduced a slate of recent function launches throughout the Information Engineering and Streaming portfolio, and we’re happy to recap a number of new developments within the portfolio from the months since then. Right here they’re:

Analyst Recognition

Databricks was not too long ago named a frontrunner in The Forrester Wave™: Cloud Information Pipelines, This autumn 2023. In Forrester’s 26-criterion analysis of cloud knowledge pipeline suppliers, they recognized probably the most vital ones and researched, analyzed, and scored them. This report reveals how every supplier measures up and helps knowledge administration professionals choose the fitting one for his or her wants.

You’ll be able to learn this report, together with Forrester’s tackle main distributors’ present providing(s), technique, and market presence, right here.

We’re happy with this recognition and placement, and we imagine the Databricks Information Intelligence Platform is the very best place to construct knowledge pipelines, together with your knowledge, for all of your AI and analytics initiatives. In executing on this imaginative and prescient, listed here are quite a few new options and performance from the final 6 months.

Information Ingestion

In November we introduced our acquisition of Arcion, a number one supplier for real-time knowledge replication applied sciences. Arcion’s capabilities will allow Databricks to offer native options to copy and ingest knowledge from numerous databases and SaaS functions, enabling clients to deal with the actual work of making worth and AI-driven insights from their knowledge.

Arcion’s code-optional, low-maintenance Change Information Seize (CDC) expertise will assist to energy new Databricks platform capabilities that allow downstream analytics, streaming, and AI use circumstances via native connectors to enterprise database techniques similar to Oracle, SQL Server and SAP, in addition to SaaS functions similar to Salesforce and Workday.

Spark Structured Streaming

Spark Structured Streaming is the very best engine for stream processing, and Databricks is the very best place to run your Spark workloads. The next enhancements to Databricks streaming, constructed on the Spark Structured Streaming engine, increase on years of streaming innovation to empower use circumstances like real-time analytics, real-time AI + ML, and real-time operational functions.

Apache Pulsar help

Apache Pulsar is an all-in-one messaging and streaming platform and a prime 10 Apache Software program Basis venture.

One of many tenets of Undertaking Lightspeed is ecosystem growth – with the ability to connect with a wide range of widespread streaming sources. Along with preexisting help for message queues like Kafka, Kinesis, Occasion Hubs, Azure Synapse and Google Pub/Sub, In Databricks Runtime 14.1 and above, you need to use Structured Streaming to stream knowledge from Apache Pulsar on Databricks. Because it does with these different messaging/streaming sources, Structured Streaming supplies exactly-once processing semantics for knowledge learn from Pulsar sources.

You’ll be able to see all of the sources right here, and the documentation on the Pulsar connector particularly right here.

UC View as streaming supply

In Databricks Runtime 14.1 and above, you need to use Structured Streaming to carry out streaming reads from views registered with Unity Catalog. See the documentation for extra data.

AAD auth help

Databricks now helps Azure Energetic Listing authentication – a required authentication protocol for a lot of organizations – for utilizing the Databricks Kafka connector with Azure Occasion Hubs.

Delta Reside Tables

Delta Reside Tables (DLT) is a declarative ETL framework for the Databricks Information Intelligence Platform that helps cost-effectively simplify streaming and batch ETL. Creating knowledge pipelines with DLT permits customers to easily outline the transformations to carry out – job orchestration, cluster administration, monitoring, knowledge high quality and error dealing with are mechanically taken care of.

Append Flows API

The append_flow API permits for the writing of a number of streams to a single streaming desk in a pipeline (for instance, from a number of Kafka subjects). This permits customers to:

- Add and take away streaming sources that append knowledge to an present streaming desk with out requiring a full refresh; and

- Replace a streaming desk by appending lacking historic knowledge (backfilling).

See the documentation for actual syntax and examples right here.

UC Integration

Unity Catalog is the important thing to unified governance on the Information Intelligence Platform throughout all of your knowledge and AI belongings, together with DLT pipelines. There have been a number of latest enhancements to the Delta Reside Tables + Unity Catalog Integration. The next are actually supported:

- Writing tables to Unity Catalog schemas with customized storage areas

- Cloning DLT pipelines from the Hive Metastore to Unity Catalog, making it simpler and faster emigrate HMS pipelines to Unity Catalog

You’ll be able to evaluate the total documentation of what’s supported right here.

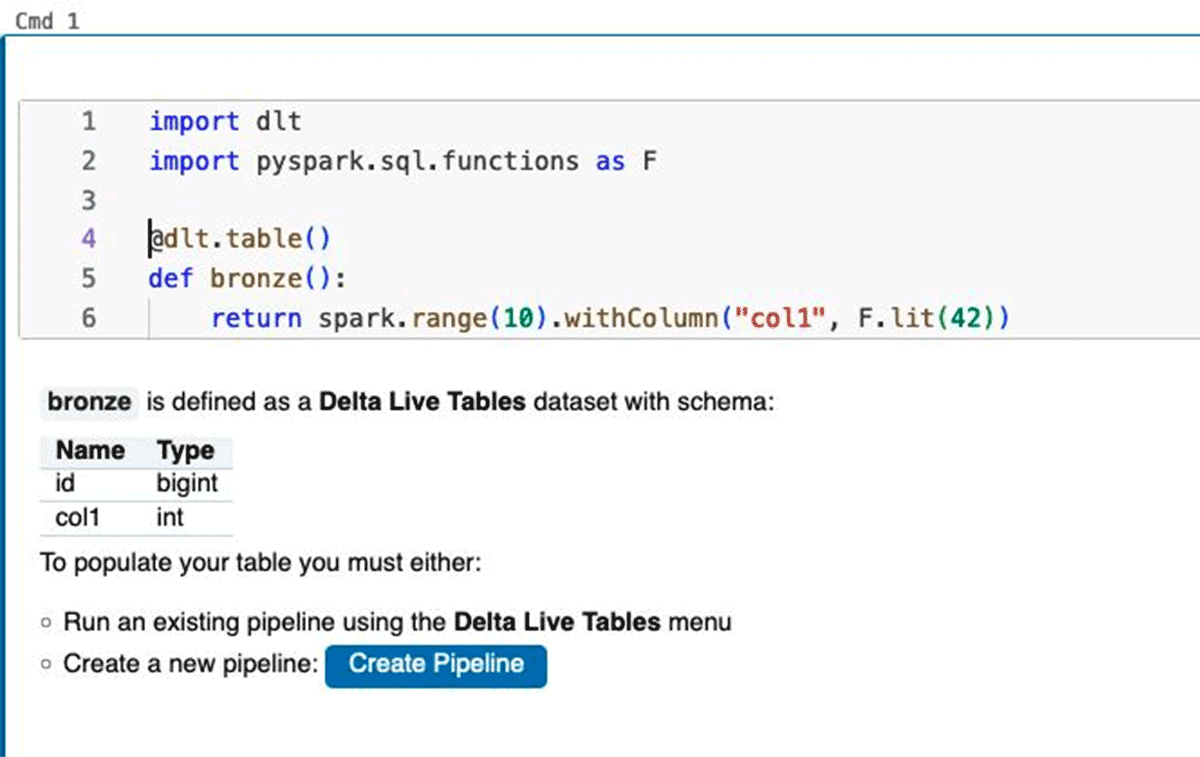

Developer Expertise + Pocket book Enhancements

Utilizing the interactive cluster with Databricks Runtime 13.3 and up, customers can now catch syntax and evaluation errors of their pocket book code early, by executing particular person pocket book cells defining DLT code in a daily DBR cluster – with out having to truly course of any knowledge. This permits points like syntax errors, improper desk names or improper column names to be caught early – within the growth course of – with out truly having to execute a pipeline run. Customers are additionally in a position to incrementally construct and resolve the schemas for DLT datasets throughout pocket book cell executions.

Google Cloud GA

DLT pipelines are actually usually out there on Google Cloud, empowering knowledge engineers to construct dependable, streaming and batch knowledge pipelines effortlessly throughout GCP cloud environments. By increasing the provision of DLT pipelines to Google Cloud, Databricks reinforces its dedication to offering a partner-friendly ecosystem whereas providing clients the pliability to decide on the cloud platform that most accurately fits their wants. Together with the Pub/Sub streaming connector, we’re making it simpler to work with streaming knowledge pipelines on Google Cloud.

Databricks Workflows

Enhanced Management Stream

An necessary a part of knowledge orchestration is management move – the administration of job dependencies and management of how these duties are executed. Because of this we have made some necessary additions to regulate move capabilities in Databricks Workflows together with the introduction of conditional execution of duties and of job parameters. Conditional execution of duties refers to customers’ means so as to add circumstances that have an effect on the way in which a workflow executes by including “if/else” logic to a workflow and defining extra subtle multi-task dependencies. Job parameters let you outline key/worth pairs which can be made out there to each job in a workflow and may help management the way in which a workflow runs by including extra granular configurations. You’ll be able to study extra about these new capabilities in this weblog.

Modular Orchestration

As our clients construct extra advanced workflows with bigger numbers of duties and dependencies, it turns into more and more more durable to keep up, check, and monitor these workflows. Because of this we have added modular orchestration to Databricks Workflows – the power to interrupt down advanced workflows into “baby jobs” which can be invoked as duties inside the next degree “mum or dad job”. This functionality lets organizations break down DAGs to be owned by totally different groups, introduce reusability of workflows, and by so sooner growth and extra dependable operations for orchestrating giant workflows.

Abstract of Current Innovation

This concludes the abstract of options we have launched within the Information Engineering and Streaming portfolio since Information + AI Summit 2023. Under is a full stock of launches we have blogged about over the past 12 months – for the reason that starting of 2023:

You can even keep tuned to the quarterly roadmap webinars to study what’s on the horizon for the Information Engineering portfolio. It is an thrilling time to be working with knowledge, and we’re excited to accomplice with Information Engineers, Analysts, ML Engineers, Analytics Engineers, and extra to democratize knowledge and AI inside your organizations!