We’re introducing a brand new Rockset Integration for Apache Kafka that provides native help for Confluent Cloud and Apache Kafka, making it less complicated and sooner to ingest streaming knowledge for real-time analytics. This new integration comes on the heels of a number of new product options that make Rockset extra inexpensive and accessible for real-time analytics together with SQL-based rollups and transformations.

With the Kafka Integration, customers not must construct, deploy or function any infrastructure element on the Kafka facet. Right here’s how Rockset is making it simpler to ingest occasion knowledge from Kafka with this new integration:

- It’s managed totally by Rockset and will be setup with just some clicks, conserving with our philosophy on making real-time analytics accessible.

- The combination is steady so any new knowledge within the Kafka subject will get listed in Rockset, delivering an end-to-end knowledge latency of two seconds.

- The combination is pull-based, making certain that knowledge will be reliably ingested even within the face of bursty writes and require no tuning on the Kafka facet.

- There is no such thing as a must pre-create a schema to run real-time analytics on occasion streams from Kafka. Rockset indexes your entire knowledge stream so when new fields are added, they’re instantly uncovered and made queryable utilizing SQL.

- We’ve additionally enabled the ingest of historic and real-time streams in order that prospects can entry a 360 view of their knowledge, a typical real-time analytics use case.

On this weblog, we introduce how the Kafka Integration with native help for Confluent Cloud and Apache Kafka works and stroll via tips on how to run real-time analytics on occasion streams from Kafka.

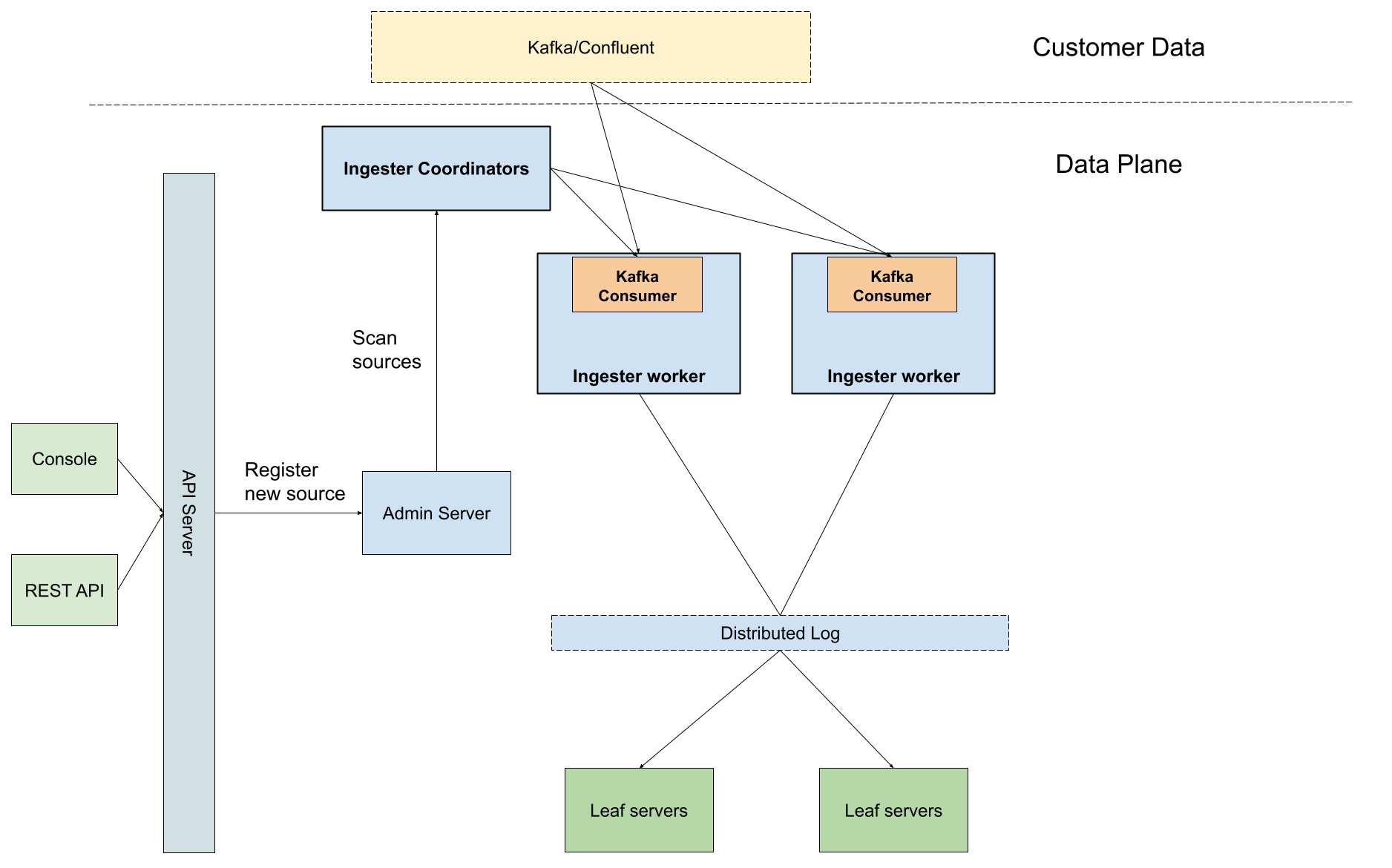

A Fast Dip Underneath the Hood

The brand new Kafka Integration adopts the Kafka Client API , which is a low-level, vanilla Java library that could possibly be simply embedded into purposes to tail knowledge from a Kafka subject in actual time.

There are two Kafka client modes:

- subscription mode, the place a bunch of customers collaborate in tailing a typical set of Kafka matters in a dynamic means, counting on Kafka brokers to offer rebalancing, checkpointing, failure restoration, and many others

- assign mode, the place every particular person client specifies assigned subject partitions and manages the progress checkpointing manually

Rockset adopts the assign mode as we’ve already constructed a general-purpose tailer framework based mostly on the Aggregator Leaf Tailer Structure (ALT) to deal with the heavy-lifting, similar to progress checkpointing and customary failure instances. The consumption offsets are utterly managed by Rockset, with out saving any info inside consumer’s cluster. Every ingestion employee receives its personal subject partition task and final processed offsets throughout the initialization from the ingestion coordinator, after which leverages the embedded client to fetch Kafka subject knowledge.

The above diagram reveals how the Kafka client is embedded into the Rockset tailer framework. A buyer creates a brand new Kafka assortment via the API server endpoint and Rockset shops the gathering metadata contained in the admin server. Rockset’s ingester coordinator is notified of recent sources. When any new Kafka supply is noticed, the coordinator spawns an affordable variety of employee duties outfitted with Kafka customers to begin fetching knowledge from the shopper’s Kafka subject.

Kafka and Rockset for Actual-Time Analytics

As quickly as occasion knowledge lands in Kafka, Rockset mechanically indexes it for sub-second SQL queries. You’ll be able to search, combination and be a part of knowledge throughout Kafka matters and different knowledge sources together with knowledge in S3, MongoDB, DynamoDB, Postgres, and extra. Subsequent, merely flip the SQL question into an API to serve knowledge in your utility.

A pattern structure for real-time analytics on streaming knowledge from Apache Kafka

Let’s stroll via a step-by-step instance of analyzing real-time order knowledge utilizing a mock dataset from Confluent Cloud’s datagen. On this instance, we’ll assume that you have already got a Kafka cluster and subject setup.

An Simple 5 Minutes to Get Setup

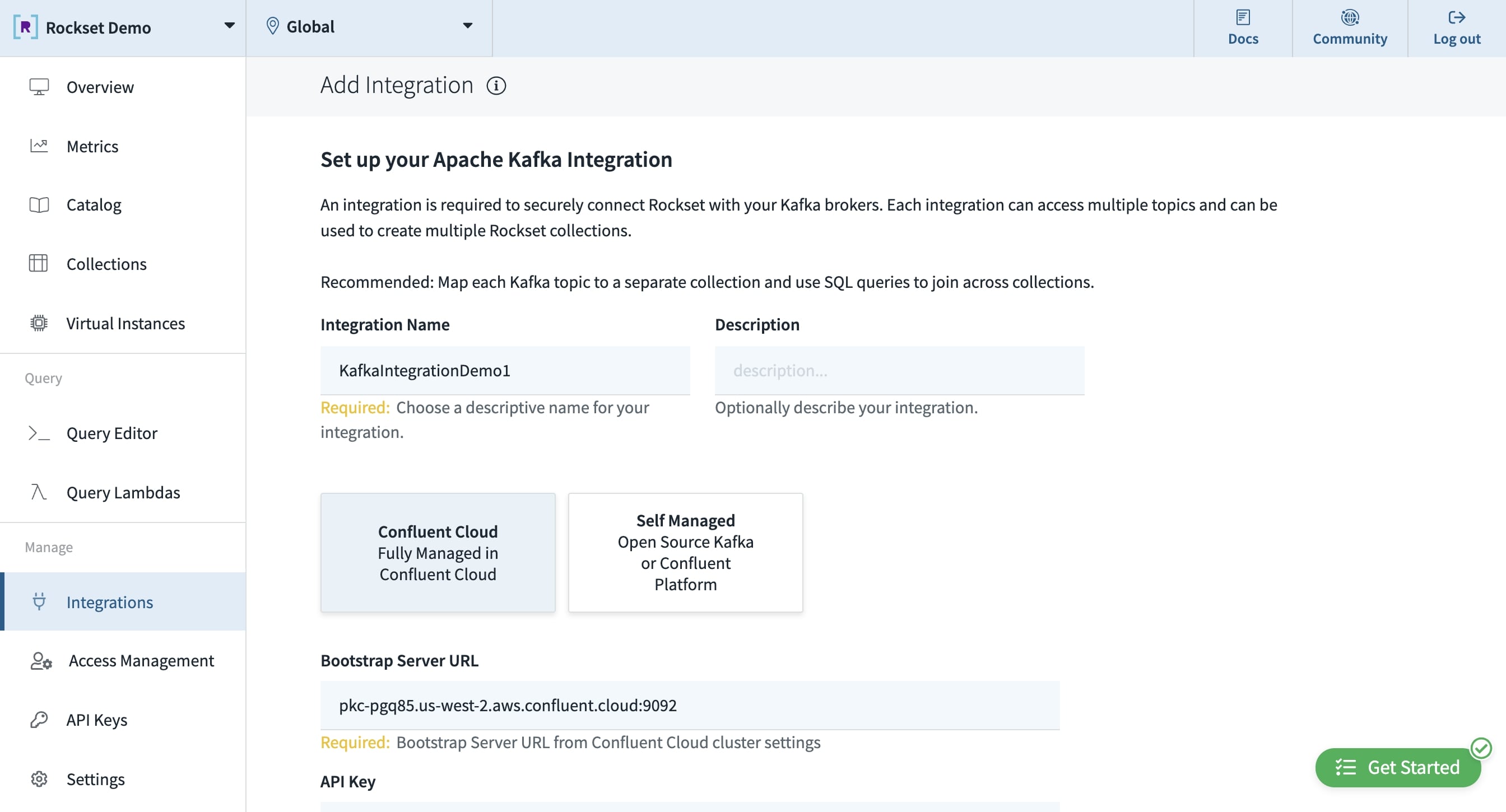

Setup the Kafka Integration

To setup Rockset’s Kafka Integration, first choose the Kafka supply from between Apache Kafka and Confluent Cloud. Enter the configuration info together with the Kafka offered endpoint to attach and the API key/secret, for those who’re utilizing the Confluent platform. For the primary model of this launch, we’re solely supporting JSON knowledge (keep tuned for Avro!).

The Rockset console the place the Apache Kafka Integration is setup.

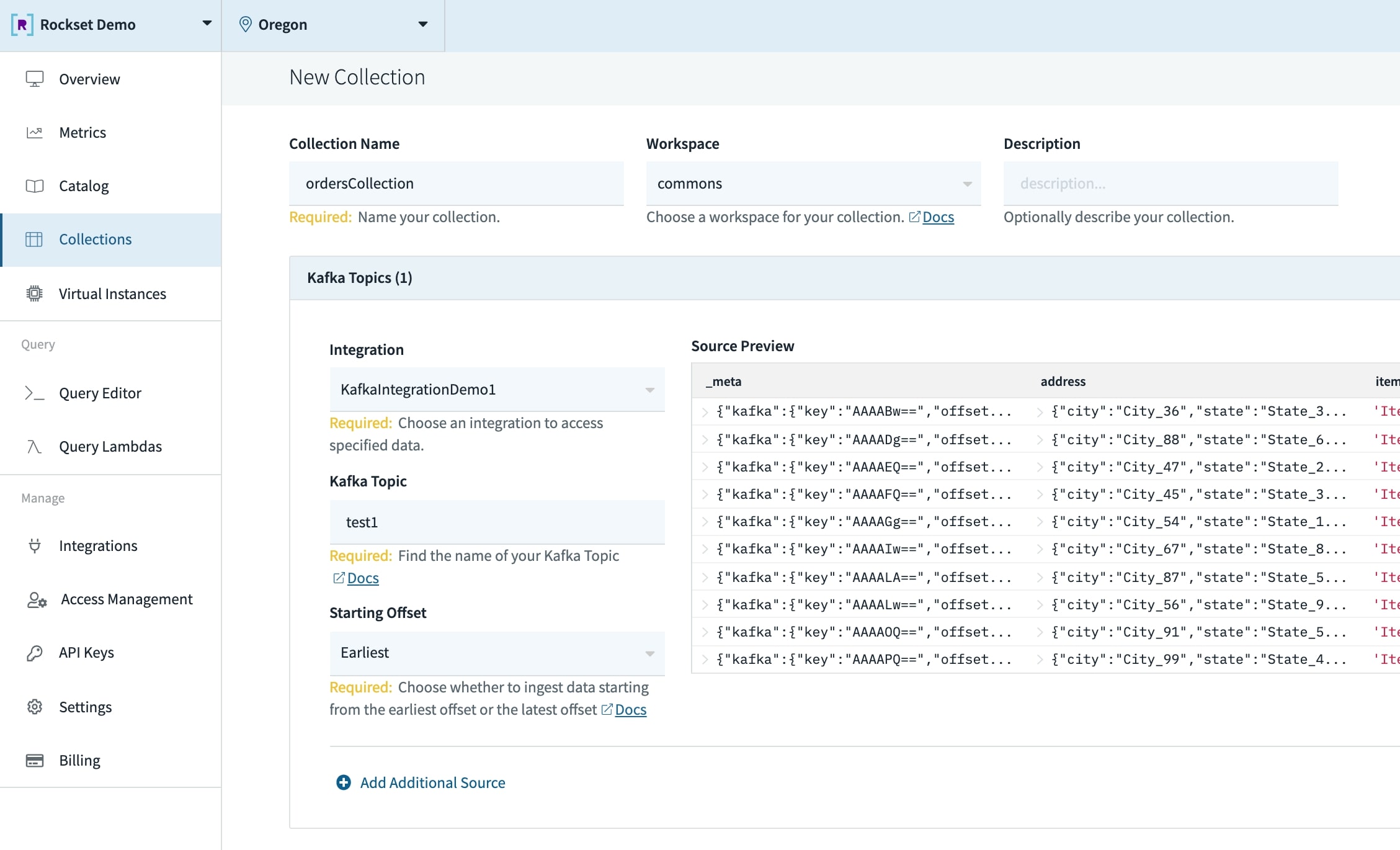

Create a Assortment

A set in Rockset is much like a desk within the SQL world. To create a set, merely add in particulars together with the title, description, integration and Kafka subject. The beginning offset lets you backfill historic knowledge in addition to seize the newest streams.

A Rockset assortment that’s pulling knowledge from Apache Kafka.

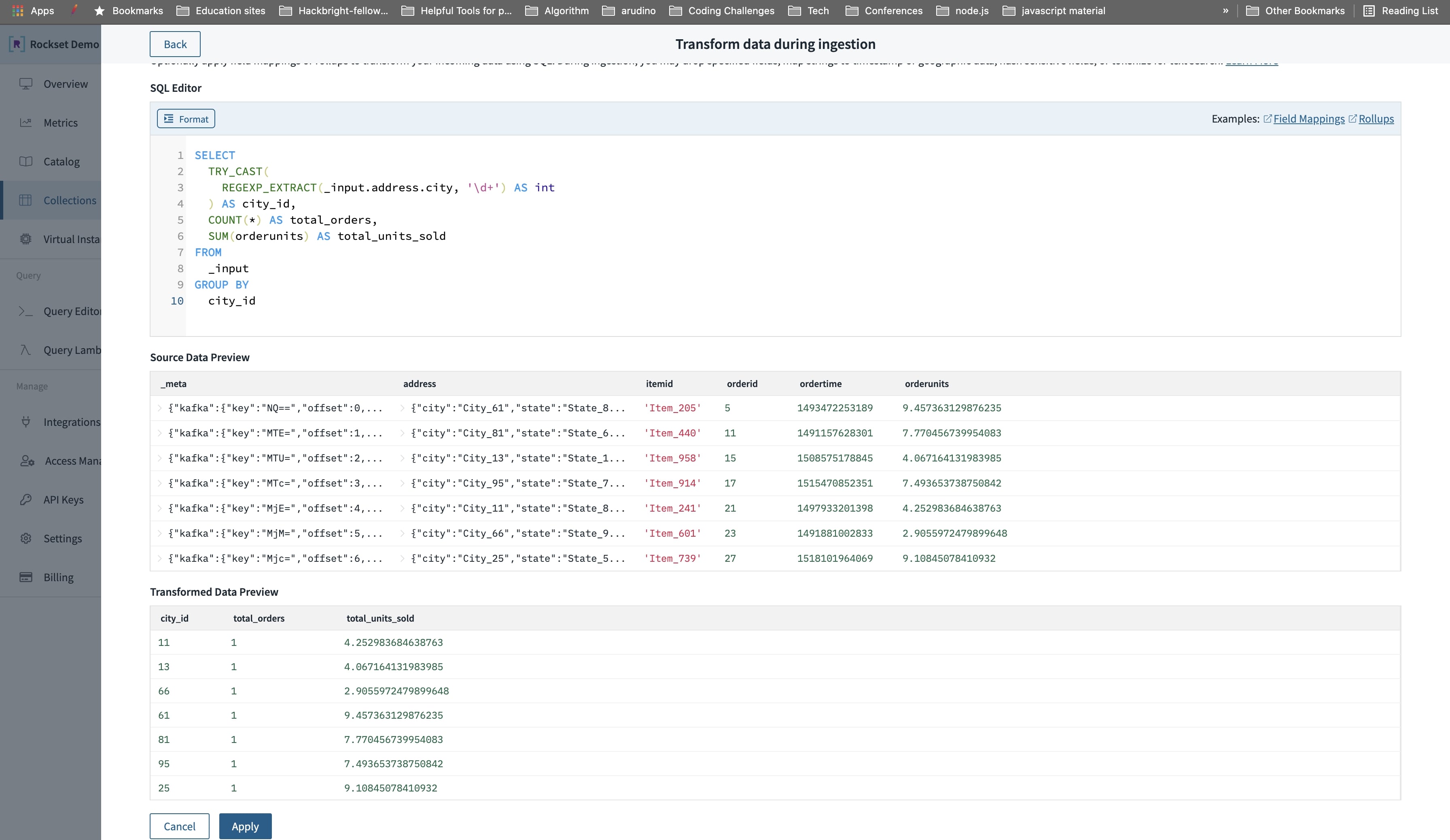

Remodel and Rollup Knowledge

You’ve the choice at ingest time to additionally rework and rollup occasion knowledge utilizing SQL to scale back the storage measurement in Rockset. Rockset rollups are capable of help advanced SQL expressions and rollup knowledge accurately and precisely even for out of order knowledge.

On this instance, we’ll do a rollup to combination the whole models offered (SUM(orderunits)) and complete orders made (COUNT(*)) in a selected metropolis.

A SQL based mostly rollup at ingest time within the Rockset console.

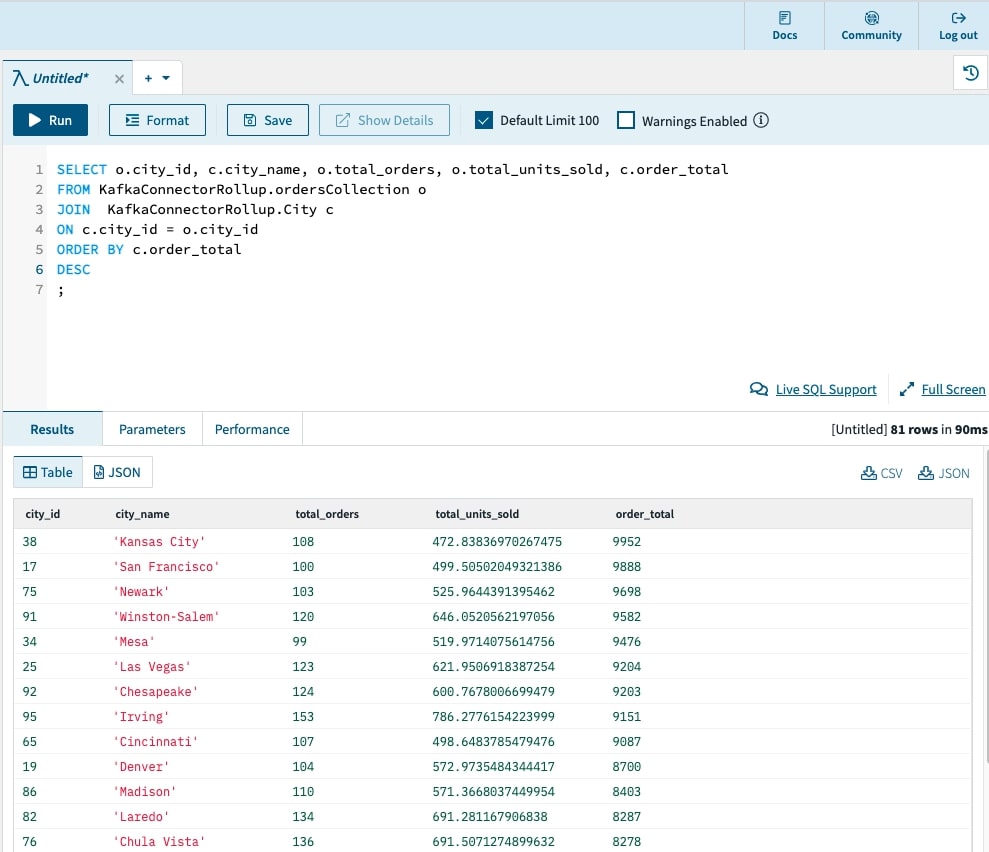

Question Occasion Knowledge Utilizing SQL

As quickly as the info is ingested, Rockset will index the info in a Converged Index for quick analytics at scale. This implies you may question semi-structured, deeply nested knowledge utilizing SQL while not having to do any knowledge preparation or efficiency tuning.

On this instance, we’ll write a SQL question to search out the town with the very best order quantity. We’ll additionally be a part of the Kafka knowledge with a CSV in S3 of the town IDs and their corresponding names.

🙌 The SQL question on streaming knowledge returned in 91 Milliseconds!

We’ve been capable of go from uncooked occasion streams to a quick SQL question in 5 minutes 💥. We additionally recorded an end-to-end demonstration video so you may higher visualize this course of.

Embedded content material: https://youtu.be/jBGyyVs8UkY

Unlock Streaming Knowledge for Actual-Time Analytics

We’re excited to proceed to make it simple for builders and knowledge groups to investigate streaming knowledge in actual time. If you happen to’ve wished to make the transfer from batch to real-time analytics, it’s simpler now than ever earlier than. And, you may make that transfer at this time. Contact us to hitch the beta for the brand new Kafka Integration.

About Boyang Chen – Boyang is a workers software program engineer at Rockset and an Apache Kafka Committer. Previous to Rockset, Boyang spent two years at Confluent on varied technical initiatives, together with Kafka Streams, exactly-once semantics, Apache ZooKeeper removing, and extra. He additionally co-authored the paper Consistency and Completeness: Rethinking Distributed Stream Processing in Apache Kafka . Boyang has additionally labored on the adverts infrastructure staff at Pinterest to rebuild the entire budgeting and pacing pipeline. Boyang has his bachelors and masters levels in laptop science from the College of Illinois at Urbana-Champaign.