Apache Druid is a distributed real-time analytics database generally used with person exercise streams, clickstream analytics, and Web of issues (IoT) gadget analytics. Druid is usually useful in use instances that prioritize real-time ingestion and quick queries.

Druid’s record of options consists of individually compressed and listed columns, numerous stream ingestion connectors and time-based partitioning. It’s recognized to carry out effectively when used as designed: to carry out quick queries on massive quantities of information. Nevertheless, utilizing Druid will be problematic when used outdoors its regular parameters — for instance, to work with nested knowledge.

On this article, we’ll talk about ingesting and utilizing nested knowledge in Apache Druid. Druid doesn’t retailer nested knowledge within the type usually present in, say, a JSON dataset. So, ingesting nested knowledge requires us to flatten our knowledge earlier than or throughout ingestion.

Flattening Your Knowledge

We will flatten knowledge earlier than or throughout ingestion utilizing Druid’s area flattening specification. We will additionally use different instruments and scripts to assist flatten nested knowledge. Our last necessities and import knowledge construction decide the flattening selection.

A number of textual content processors assist flatten knowledge, and probably the most widespread is jq. jq is like JSON’s grep, and a jq command is sort of a filter that outputs to the usual output. Chaining filters via piping permits for highly effective processing operations on JSON knowledge.

For the next two examples, we’ll create the governors.json file. Utilizing your favourite textual content editor, create the file and duplicate the next traces into it:

[

{

"state": "Mississippi",

"shortname": "MS",

"info": {"governor": "Tate Reeves"},

"county": [

{"name": "Neshoba", "population": 30000},

{"name": "Hinds", "population": 250000},

{"name": "Atlanta", "population": 19000}

]

},

{

"state": "Michigan",

"shortname": "MI",

"information": {"governor": "Gretchen Whitmer"},

"county": [

{"name": "Missauki", "population": 15000},

{"name": "Benzie", "population": 17000}

]

}

]

With jq put in, run the next from the command line:

$ jq --arg delim '_' 'scale back (tostream|choose(size==2)) as $i ({};

.[[$i[0][]|tostring]|be part of($delim)] = $i[1]

)' governors.json

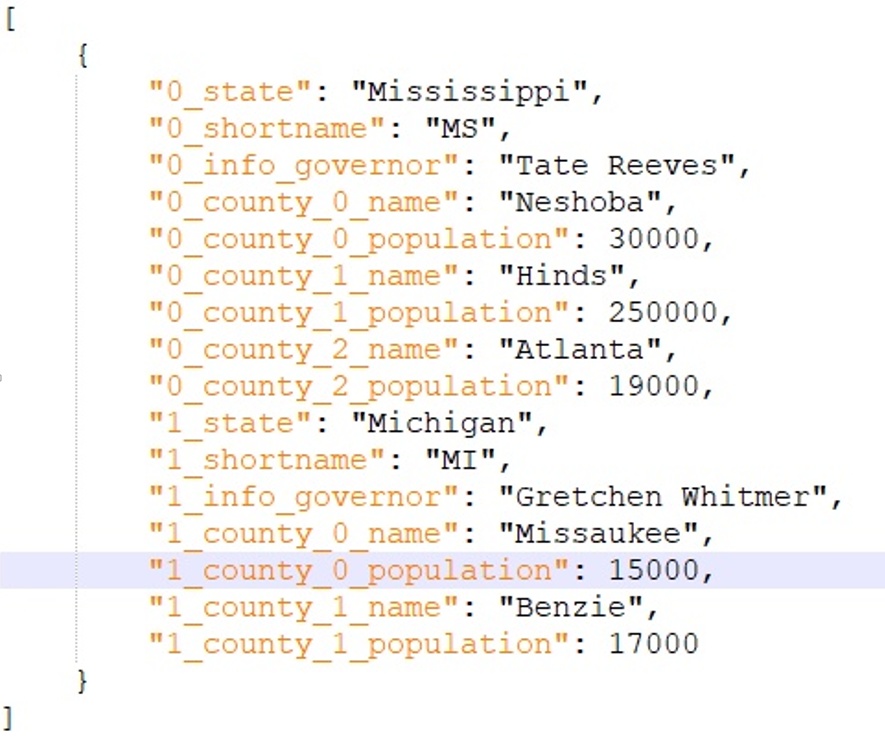

The outcomes are:

Probably the most versatile data-flattening technique is to jot down a script or program. Any programming language will do for this. For demonstration functions, let’s use a recursive technique in Python.

def flatten_nested_json(nested_json):

out = {}

def flatten(njson, title=""):

if kind(njson) is dict:

for path in njson:

flatten(njson[path], title + path + ".")

elif kind(njson) is record:

i = 0

for path in njson:

flatten(path, title + str(i) + ".")

i += 1

else:

out[name[:-1]] = njson

flatten(nested_json)

return out

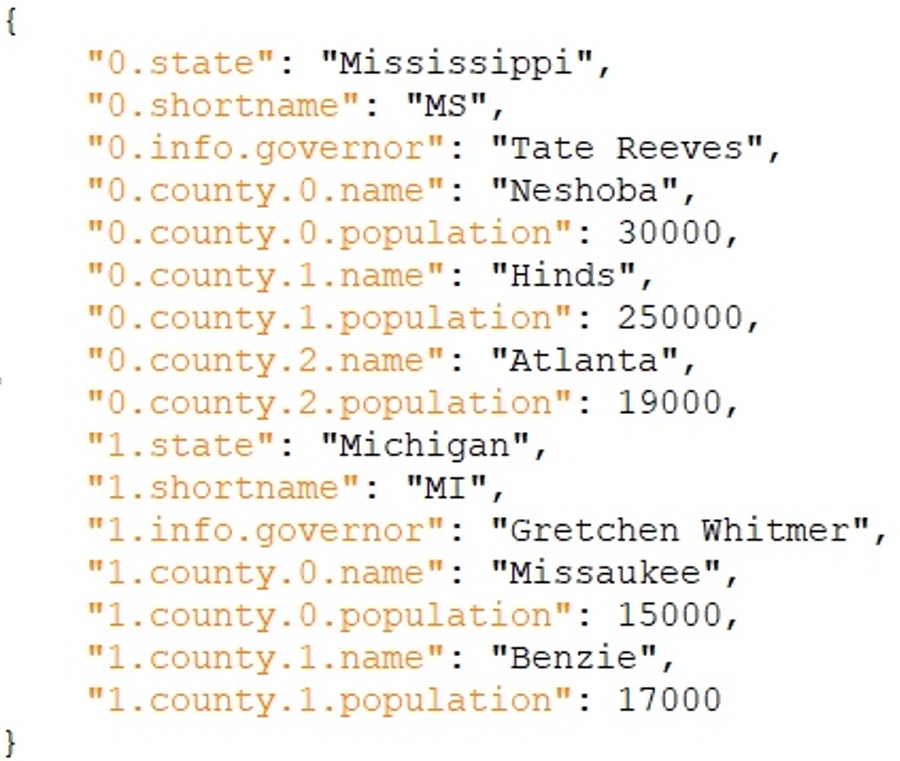

The outcomes appear like this:

Flattening can be achieved through the ingestion course of. The FlattenSpec is a part of Druid’s ingestion specification. Druid applies it first through the ingestion course of.

The column names outlined right here can be found to different components of the ingestion specification. The FlattenSpec solely applies when the information format is JSON, Avro, ORC, or Parquet. Of those, JSON is the one one which requires no additional extensions in Druid. On this article, we’re discussing ingestion from JSON knowledge sources.

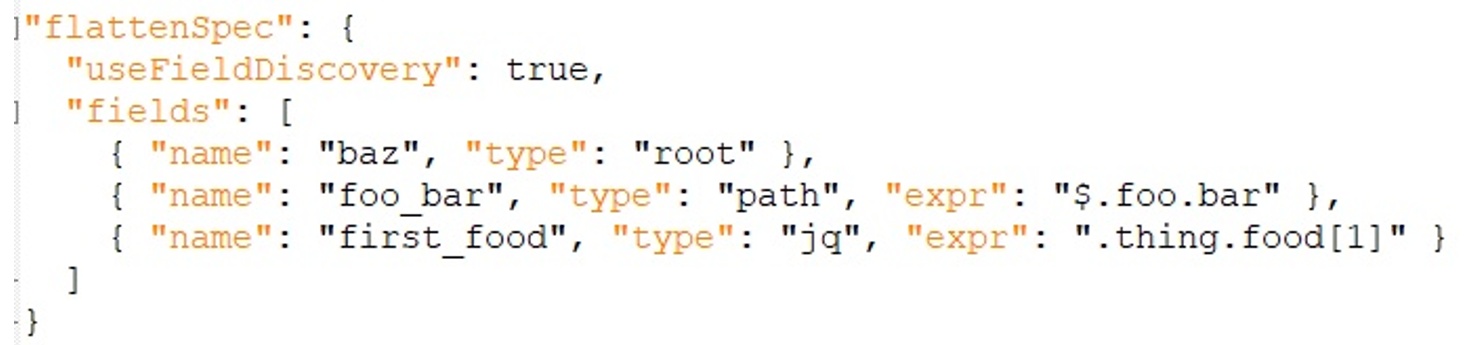

The FlattenSpec takes the type of a JSON construction. The next instance is from the Druid documentation and covers all of our dialogue factors within the specification:

The useFieldDiscovery flag is ready to true above. This enables the ingestion specification to entry all fields on the basis node. If this flag have been to be false, we’d add an entry for every column we wished to import.

Along with root, there are two different area definition varieties. The path area definition accommodates an expression of kind JsonPath. The “jq” kind accommodates an expression with a subset of jq instructions referred to as jackson-jq. The ingestion course of makes use of these instructions to flatten our knowledge.

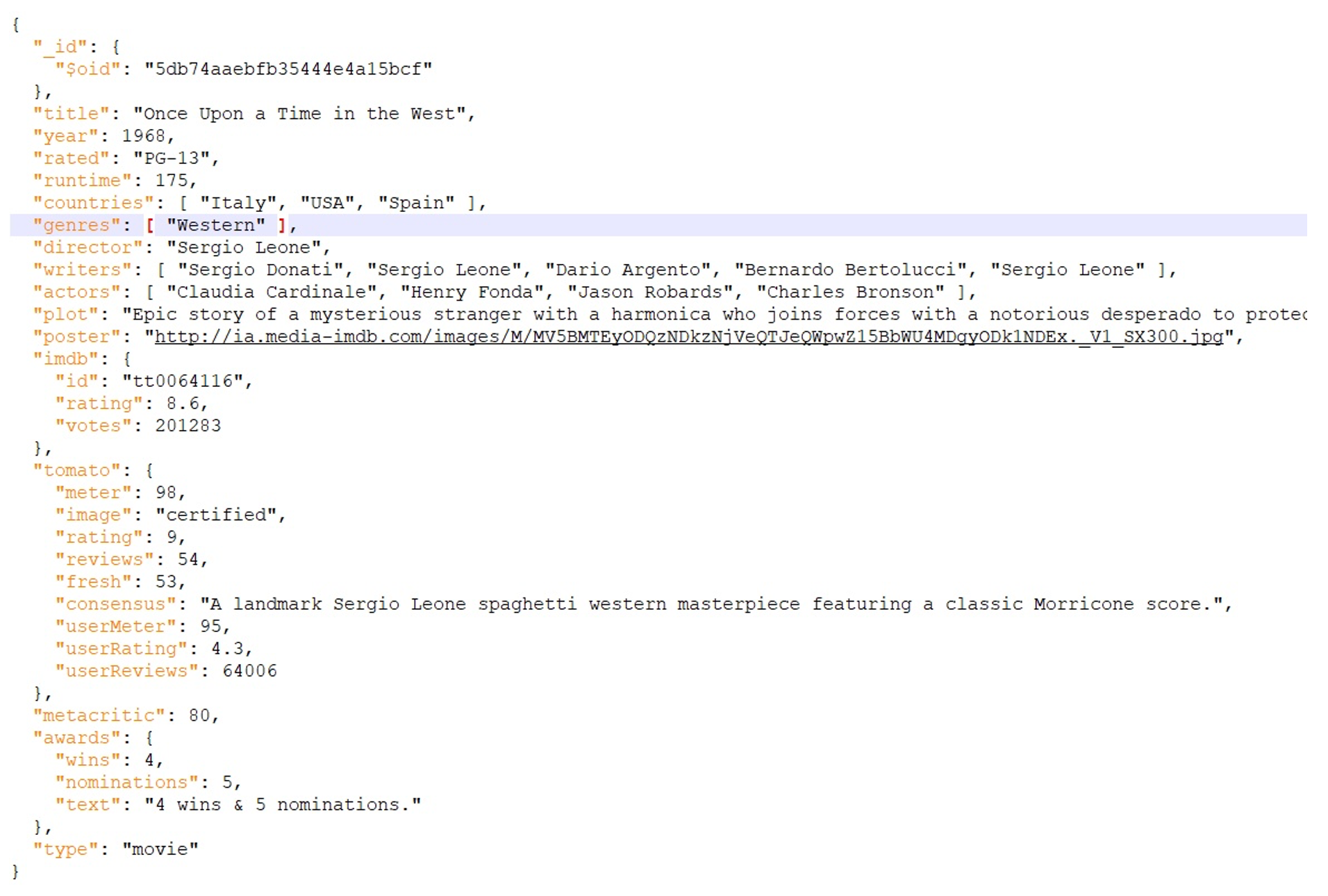

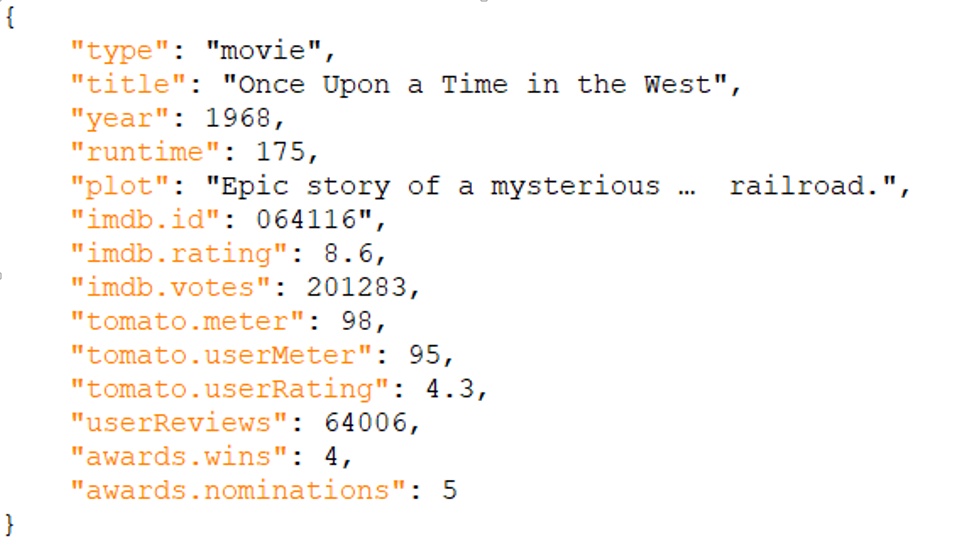

To discover this in additional depth, we’ll use a subset of IMDB, transformed to JSON format. The info has the next construction:

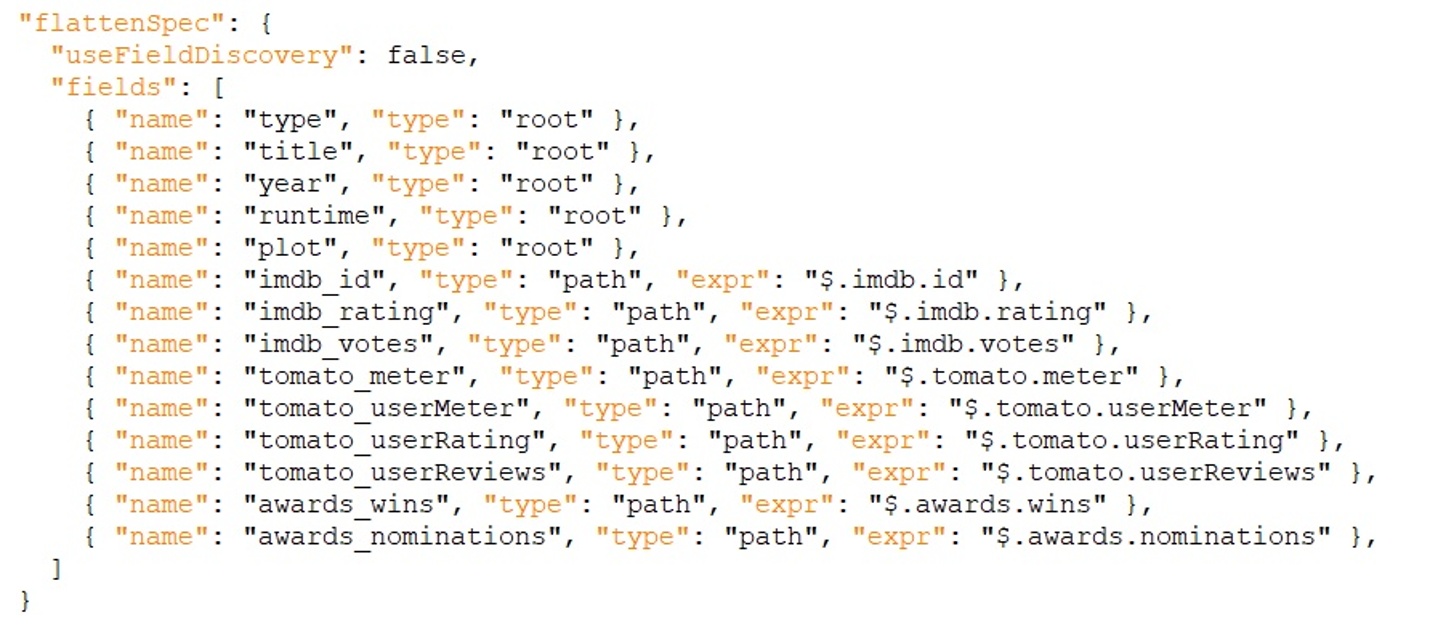

Since we aren’t importing all of the fields, we don’t use the automated area discovery choice.

Our FlattenSpec seems to be like this:

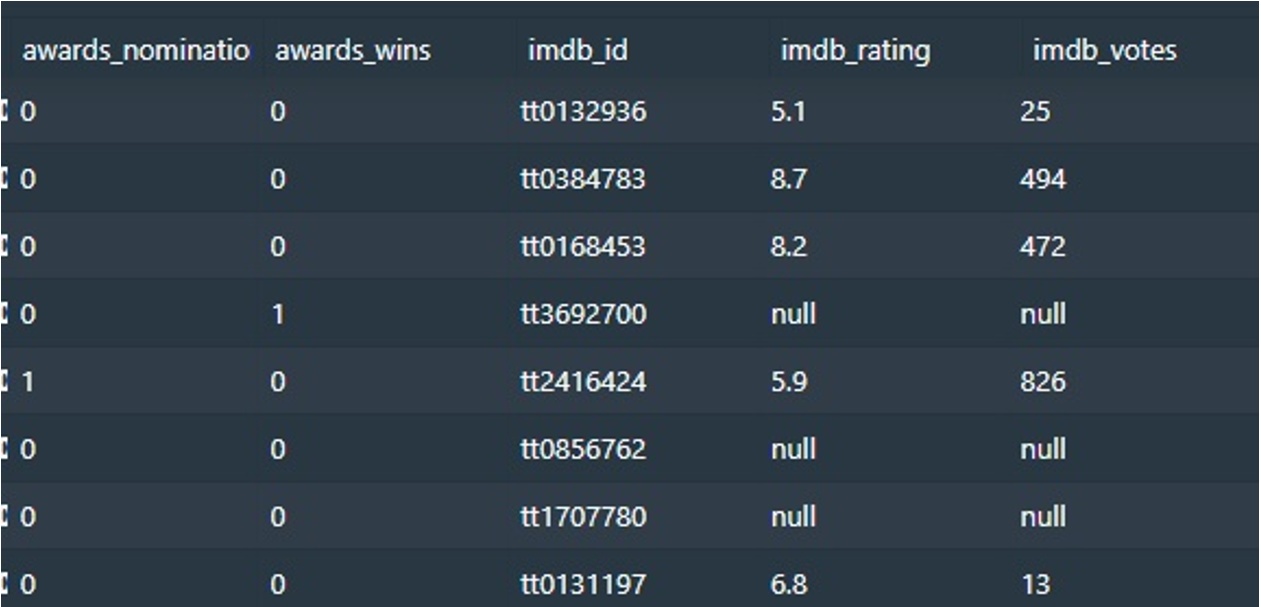

The newly created columns within the ingested knowledge are displayed under:

Querying Flattened Knowledge

On the floor, it appears that evidently querying denormalized knowledge shouldn’t current an issue. But it surely might not be as simple because it appears. The one non-simple knowledge kind Druid helps is multi-value string dimensions.

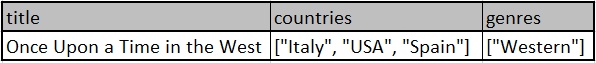

The relationships between our columns dictate how we flatten your knowledge. For instance, take into account a knowledge construction to find out these three knowledge factors:

- The distinct depend of flicks launched in Italy OR launched within the USA

- The distinct depend of flicks launched in Italy AND launched within the USA

- The distinct depend of flicks which are westerns AND launched within the USA

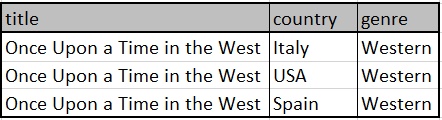

Easy flattening of the nation and style columns produces the next:

With the above construction, it’s not potential to get the distinct depend of flicks which are launched in Italy AND launched within the USA as a result of there aren’t any rows the place nation = “Italy” AND nation = “USA”.

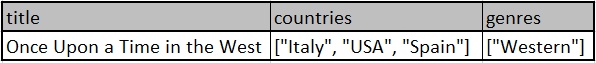

An alternative choice is to import knowledge as multi-value dimensions:

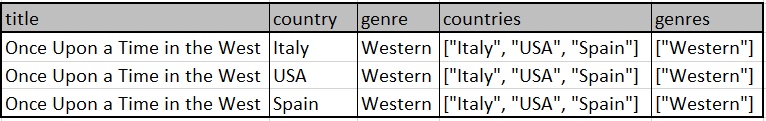

On this case, we will decide the “Italy” AND/OR “USA” quantity utilizing the LIKE operator, however not the connection between nations and genres. One group proposed an alternate flattening, the place Druid imports each the information and record:

On this case, all three distinct counts are potential utilizing:

- Nation = ‘Italy’ OR County = ‘USA’

- International locations LIKE ‘Italy’ AND International locations LIKE ‘USA’

- Style = ‘Western’ AND International locations LIKE ‘USA’

Options to Flattening Knowledge

In Druid, it’s preferable to make use of flat knowledge sources. But, flattening could not all the time be an choice. For instance, we could need to change dimension values post-ingestion with out re-ingesting. Underneath these circumstances, we need to use a lookup for the dimension.

Additionally, in some circumstances, joins are unavoidable as a result of nature and use of the information. Underneath these circumstances, we need to break up the information into a number of separate recordsdata throughout ingestion. Then, we will adapt the affected dimension to hyperlink to the “exterior” knowledge whether or not by lookup or be part of.

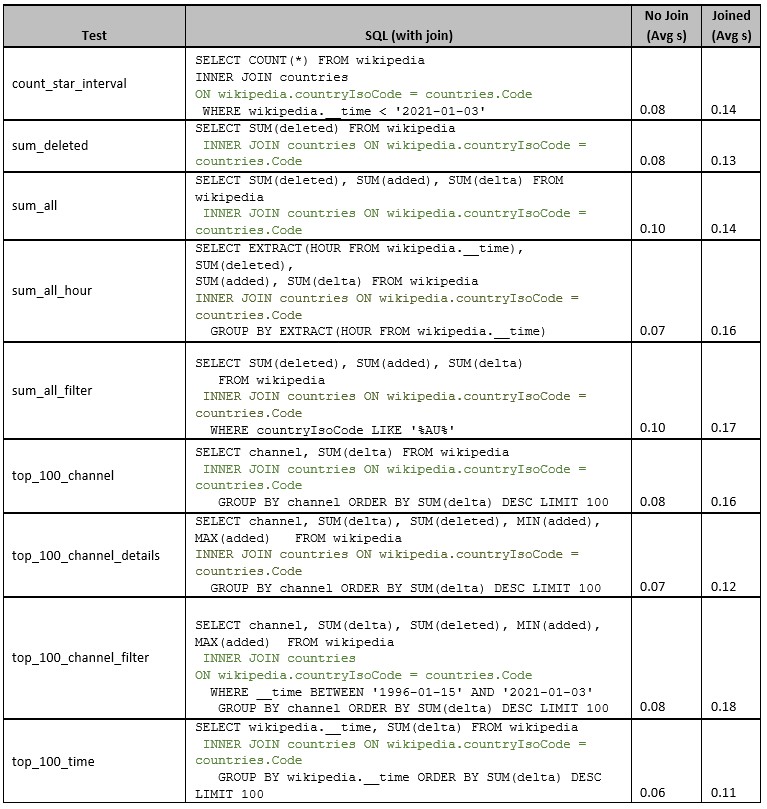

The memory-resident lookup is quick by design. All lookup tables should slot in reminiscence, and when this isn’t potential, a be part of is unavoidable. Sadly, joins come at a efficiency value in Druid. To point out this value, we’ll carry out a easy be part of on a knowledge supply. Then we’ll measure the time to run the question with and with out the be part of.

To make sure this check was measurable, we put in Druid on an outdated 4GB PC working Ubuntu Server. We then ran a sequence of queries tailored from these Xavier Léauté used when benchmarking Druid in 2014. Though this isn’t the most effective method to becoming a member of knowledge, it does present how a easy be part of impacts efficiency.

Because the chart demonstrates, every be part of makes the question run a number of seconds slower — as much as twice as sluggish as queries with out joins. This delay provides up as your variety of joins will increase.

Nested Knowledge in Druid vs Rockset

Apache Druid is nice at doing what it was designed to do. Points happen when Druid works outdoors these parameters, akin to when utilizing nested knowledge.

Out there options to deal with nested knowledge in Druid are, at finest, clunky. A change within the enter knowledge requires adapting your ingestion technique. That is true whether or not utilizing Druid’s native flattening or some type of pre-processing.

Distinction this with Rockset, a real-time analytics database that totally helps the ingestion and querying of nested knowledge, making it out there for quick queries. The flexibility to deal with nested knowledge as is saves loads of knowledge engineering effort in flattening knowledge, or in any other case working round this limitation, as we explored earlier within the weblog.

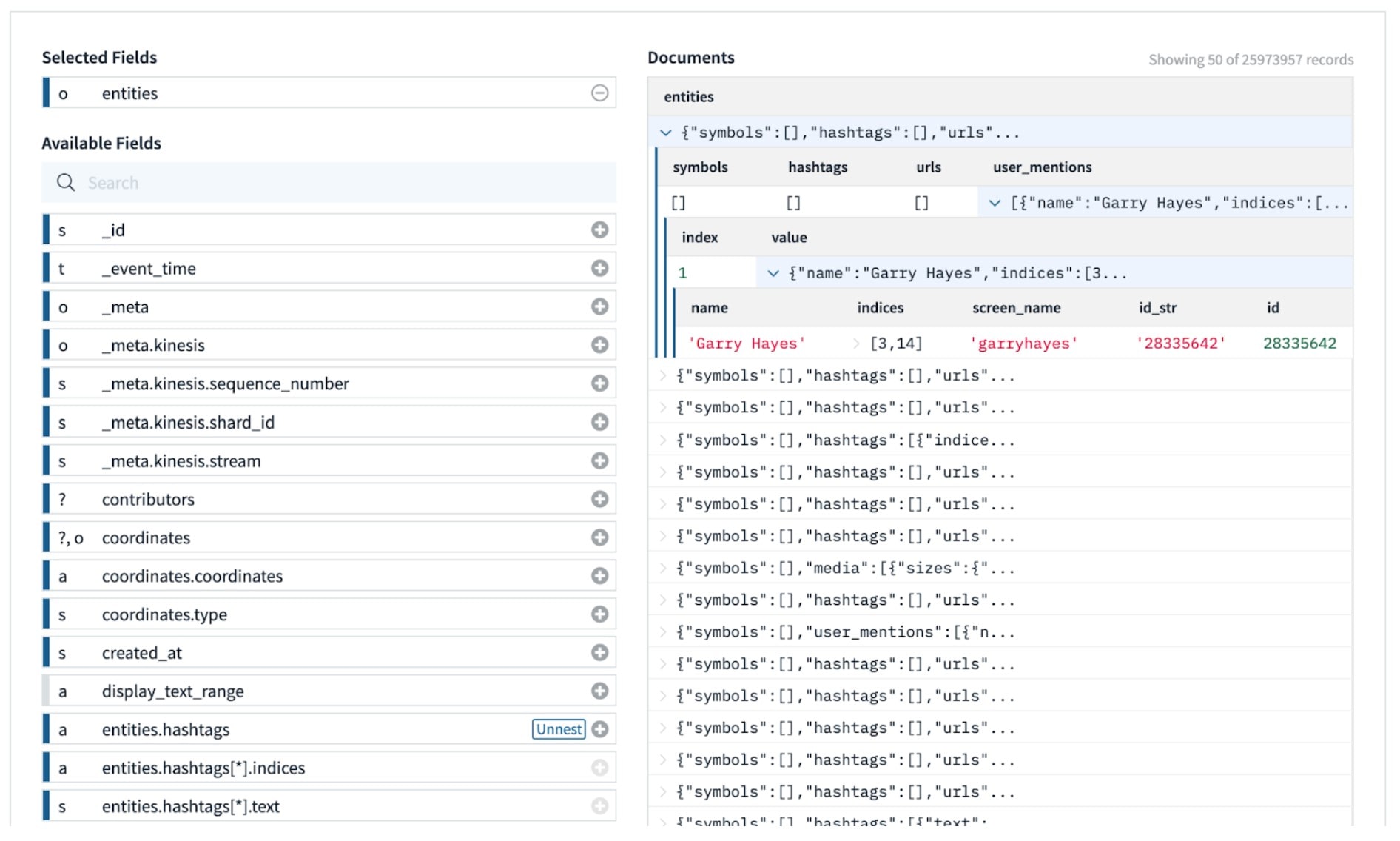

Rockset indexes each particular person area with out the person having to carry out any guide specification. There is no such thing as a requirement to flatten nested objects or arrays at ingestion time. An instance of how nested objects and arrays are introduced in Rockset is proven under:

In case your want is for flat knowledge ingestion, then Druid could also be an acceptable selection. When you want deeply nested knowledge, nested arrays, or real-time outcomes from normalized knowledge, take into account a database like Rockset as an alternative. Be taught extra about how Rockset and Druid evaluate.