Final week, we walked you thru how one can scale your Amazon RDS MySQL analytical workload with Rockset. This week will proceed with the identical Amazon RDS MySQL that we created final week, and add Airbnb information to a brand new desk.

Importing information to Amazon RDS MySQL

To get began:

- Let’s first obtain the Airbnb CSV file.

Word: be sure you rename the CSV file to sfairbnb.csv -

Entry the MySQL server through your terminal:

$ mysql -u admin -p -h Yourendpoint -

We’ll want to change to the fitting database:

$ use rocksetdemo1 - We’ll have to create a desk

Embedded content material: https://gist.github.com/nfarah86/df2926f5c193cfdcb4d09ce86d63bde7

-

Add the info to the desk:

LOAD DATA native infile '/yourpath/sfairbnb.csv' -> into desk sfairbnb -> fields terminated by ',' -> enclosed by '"' -> traces terminated by 'n' -> ignore 1 rows;

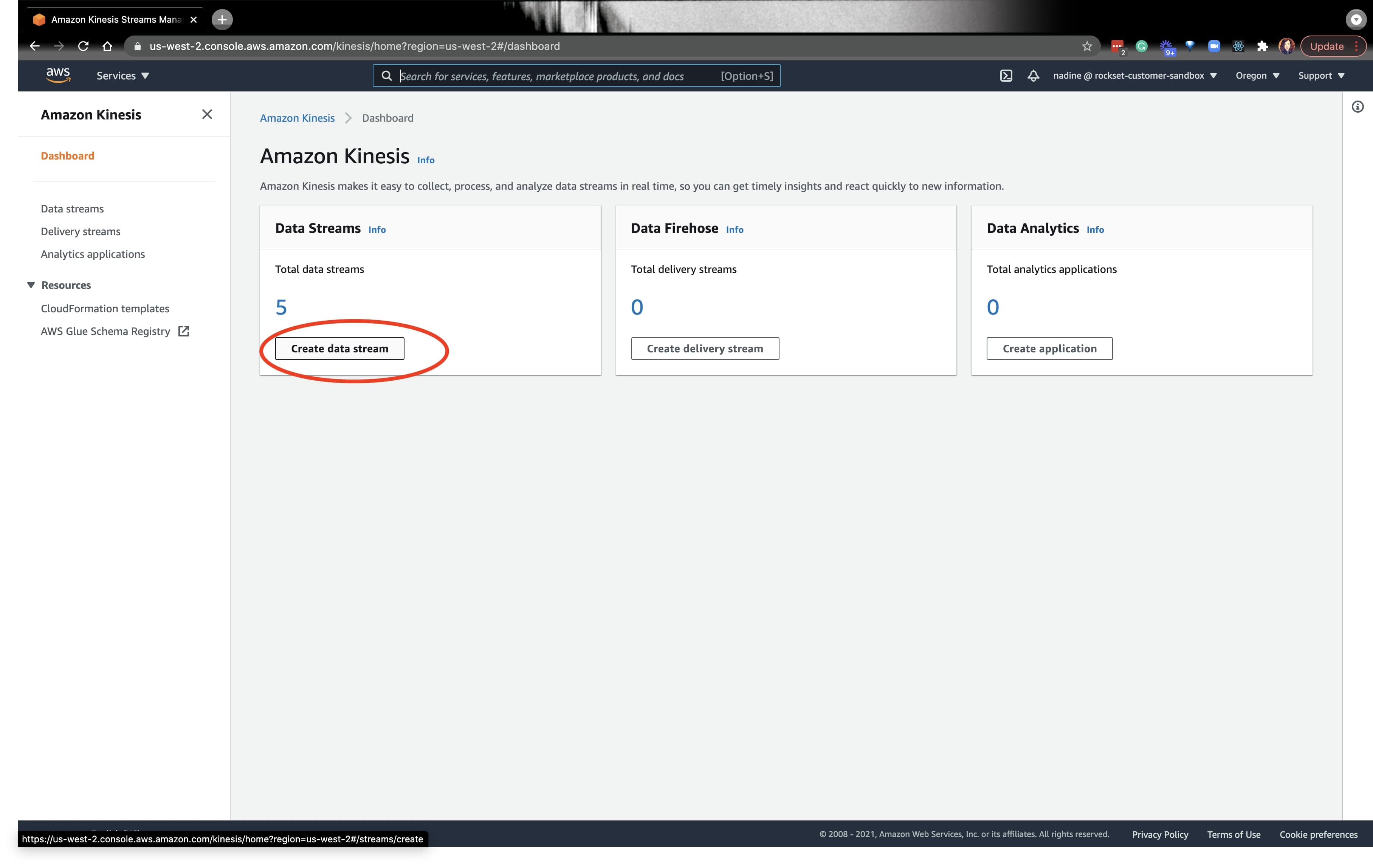

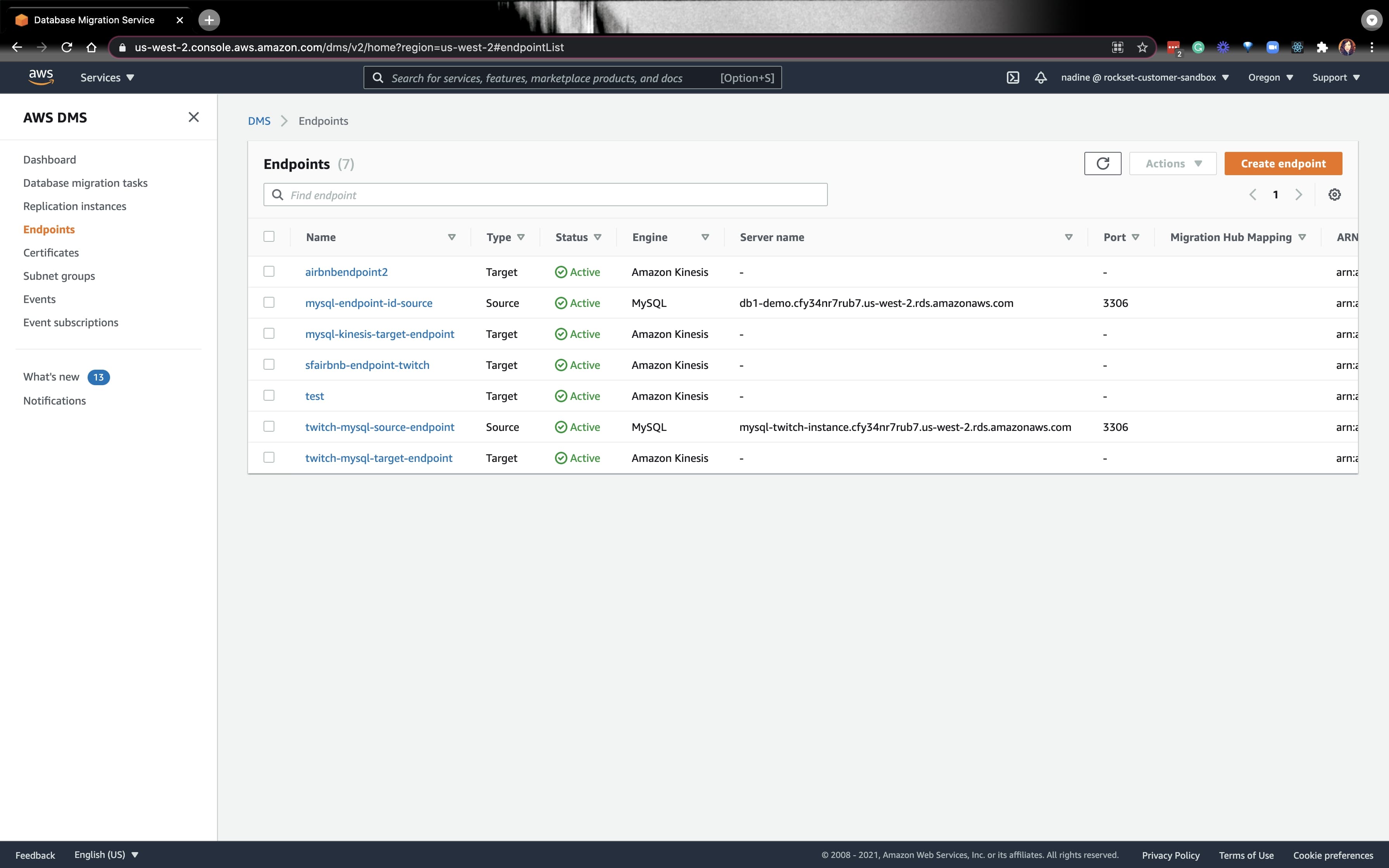

Organising a New Kinesis Stream and DMS Goal Endpoint

As soon as the info is loaded into MySQL, we are able to navigate to the AWS console and create one other Kinesis information stream. We’ll have to create a Kinesis stream and a DMS Goal Endpoint for each MySQL database desk on a MySQL server. Since we won’t be making a new MySQL server, we don’t have to create a DMS Supply Endpoint. Thus, we are able to use the identical DMS Supply Endpoint from final week.

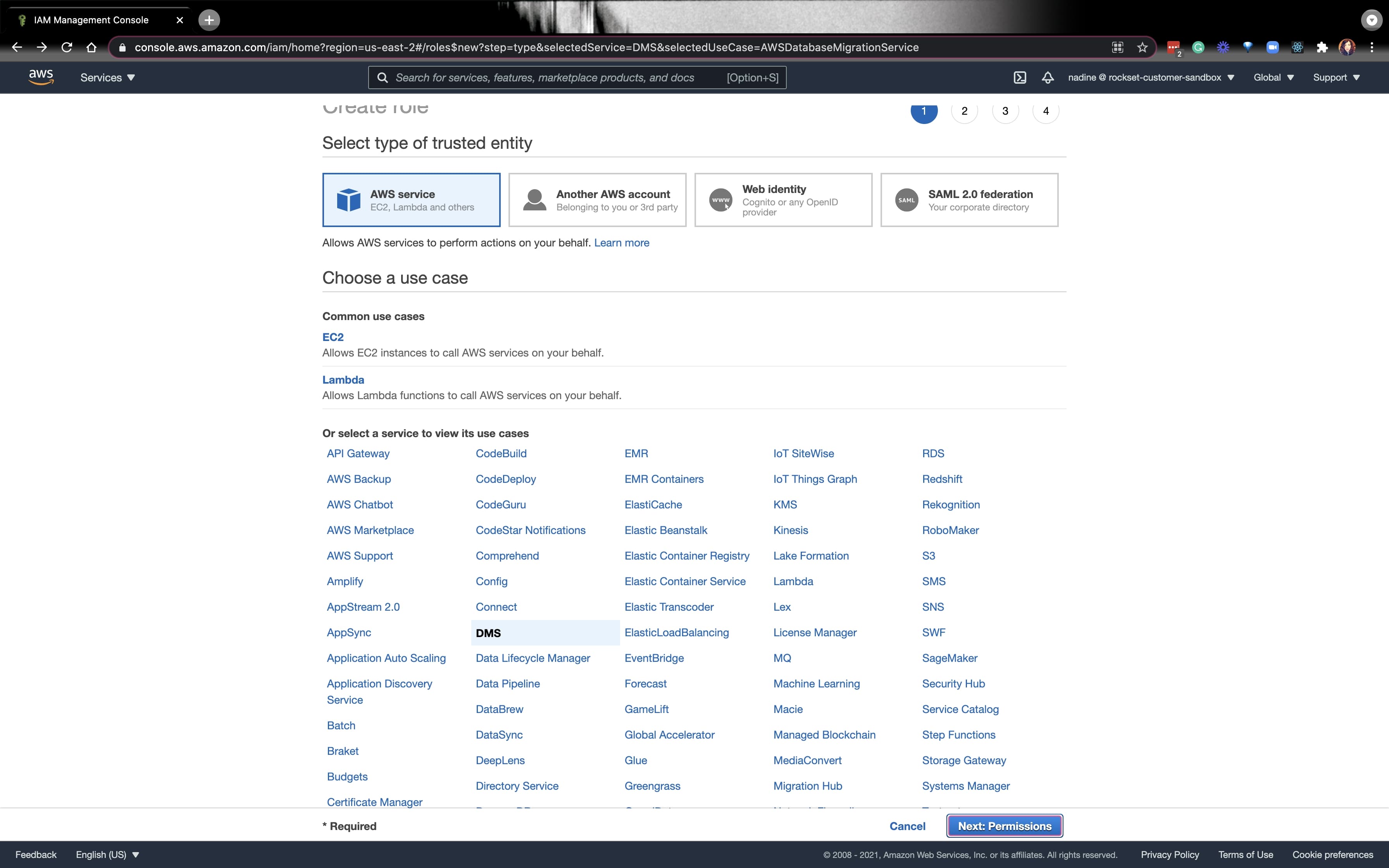

From right here, we’ll have to create a task that’ll give the Kinesis Stream full entry. Navigate to the AWS IAM console and create a brand new function for an AWS service, and click on on DMS. Click on on Subsequent: Permissions on the underside proper.

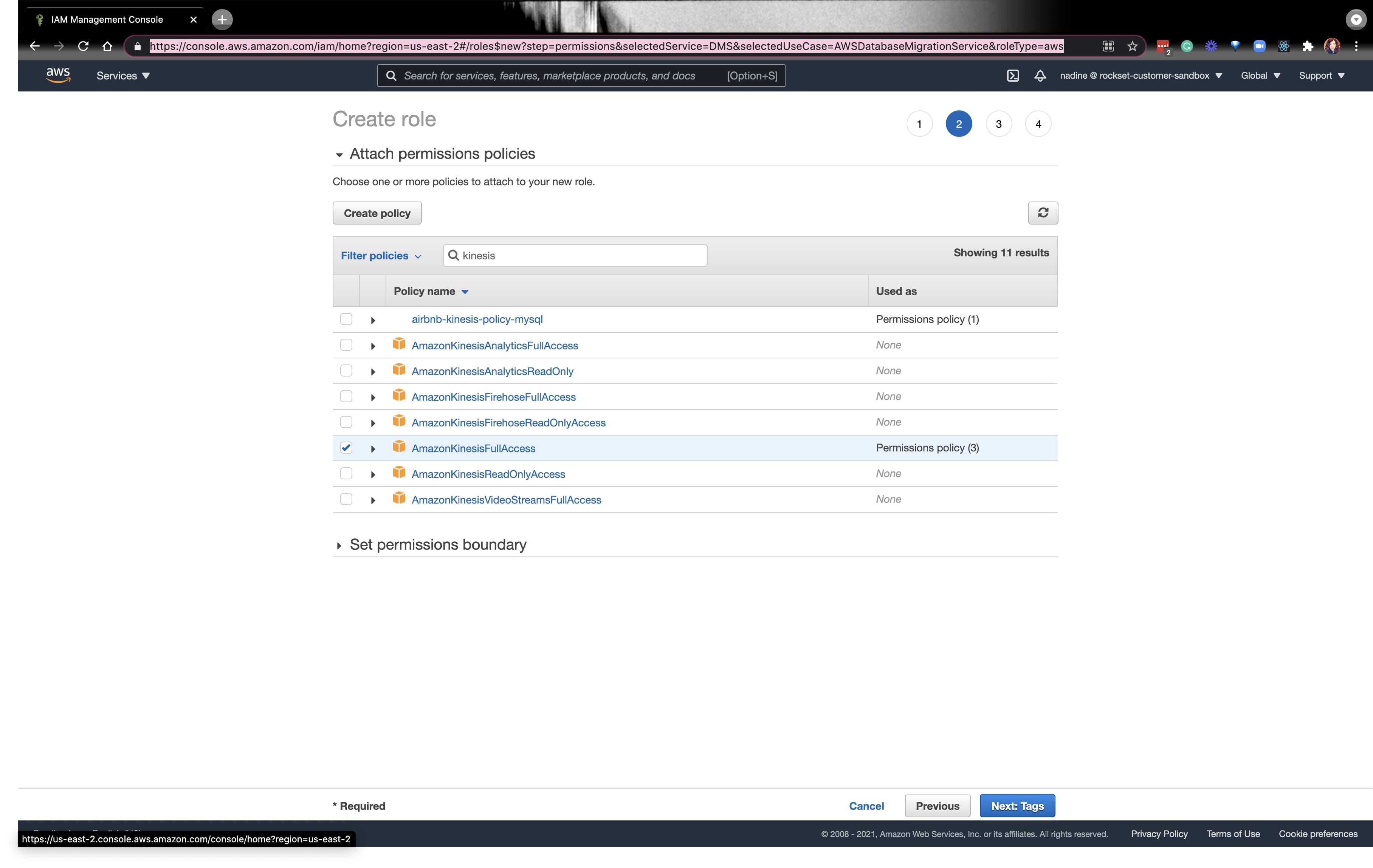

Test the field for AmazonKinesisFullAccess and click on on Subsequent: Tags:

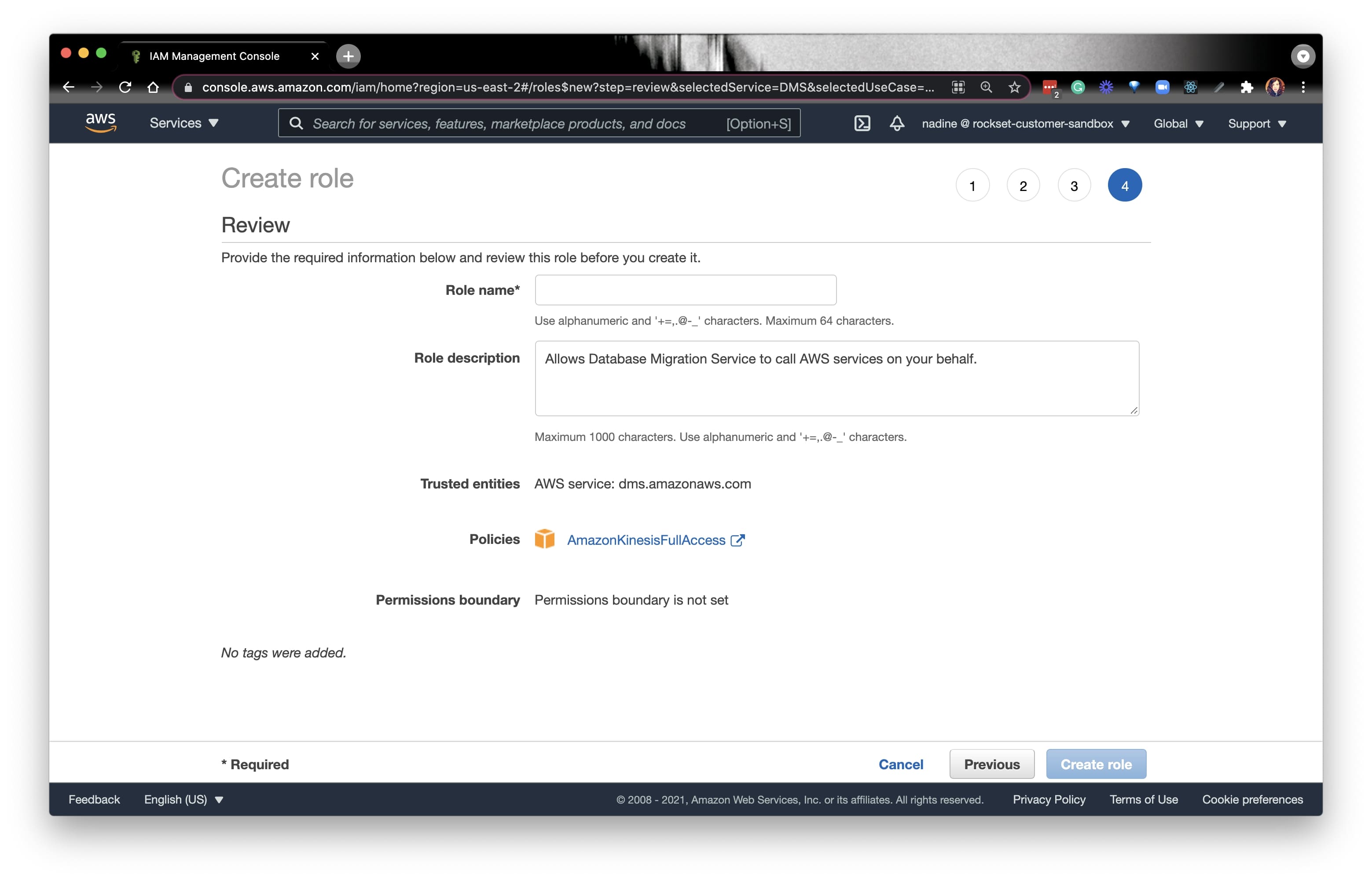

Fill out the main points as you see match and click on on Create function on the underside proper. Make sure you save the function ARN for the subsequent step.

Now, let’s go to the DMS console:

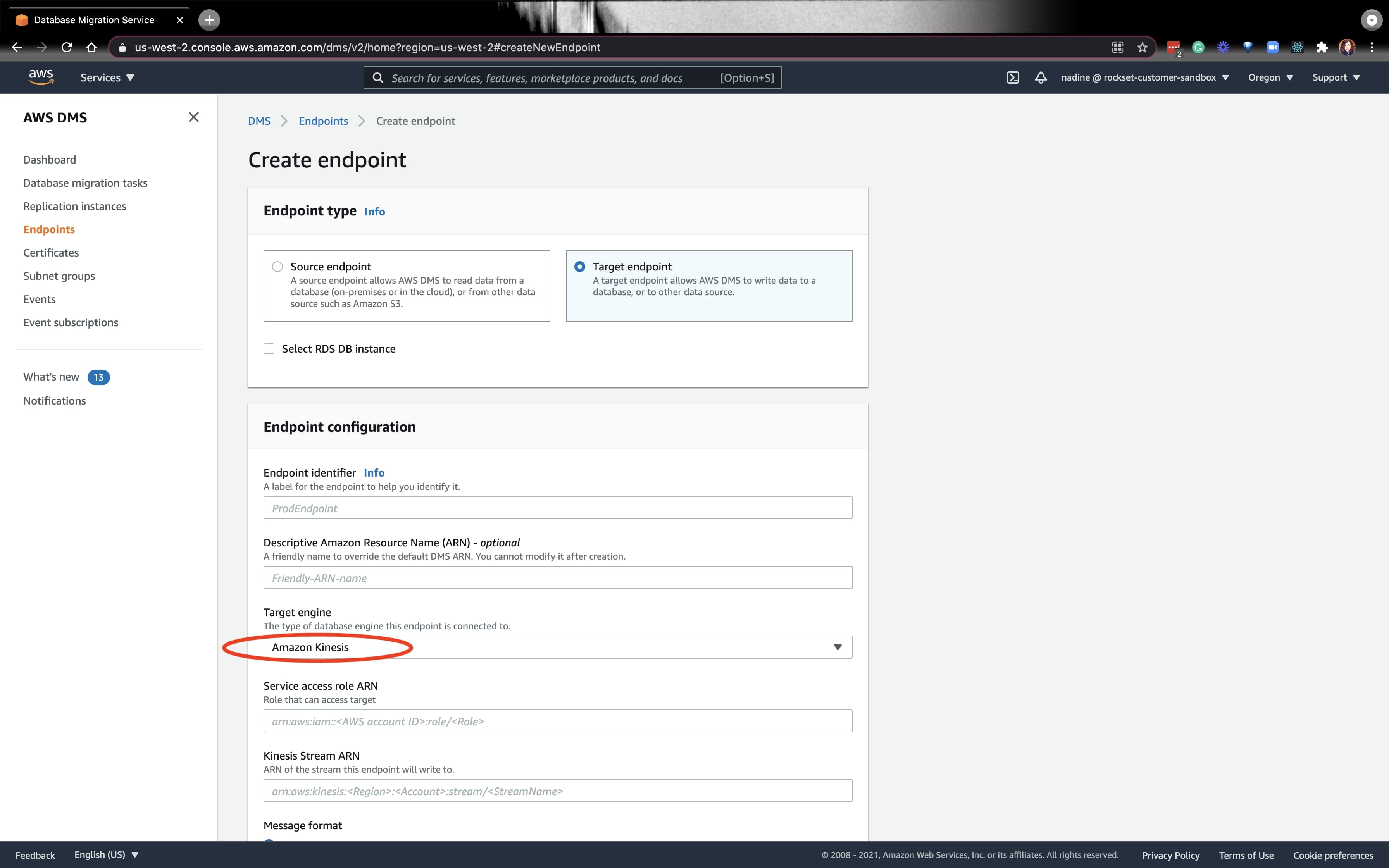

Let’s create a brand new Goal endpoint. On the drop-down, choose Kinesis:

For the Service entry function ARN, you’ll be able to put the ARN of the function we simply created. Equally, for the Kinesis Stream ARN, put the ARN for the Kinesis Stream we created. For the remainder of the fields beneath, you’ll be able to comply with the directions from our docs.

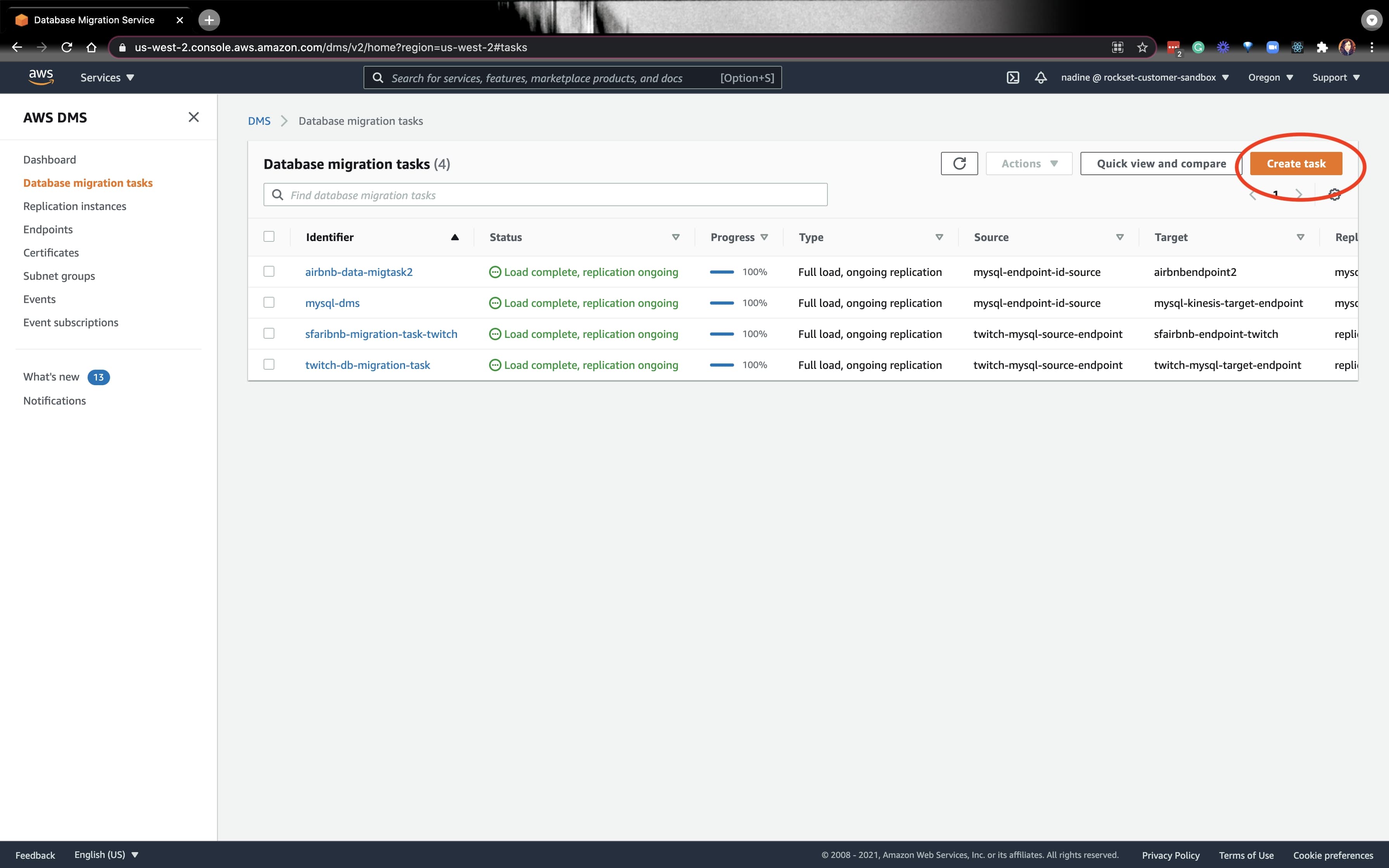

Subsequent, we’ll have to create a Information migration process:

We’ll select the supply endpoint we created final week, and select the endpoint we created at present. You’ll be able to learn the docs to see how one can modify the Process Settings.

If every thing is working nice, we’re prepared for the Rockset portion.

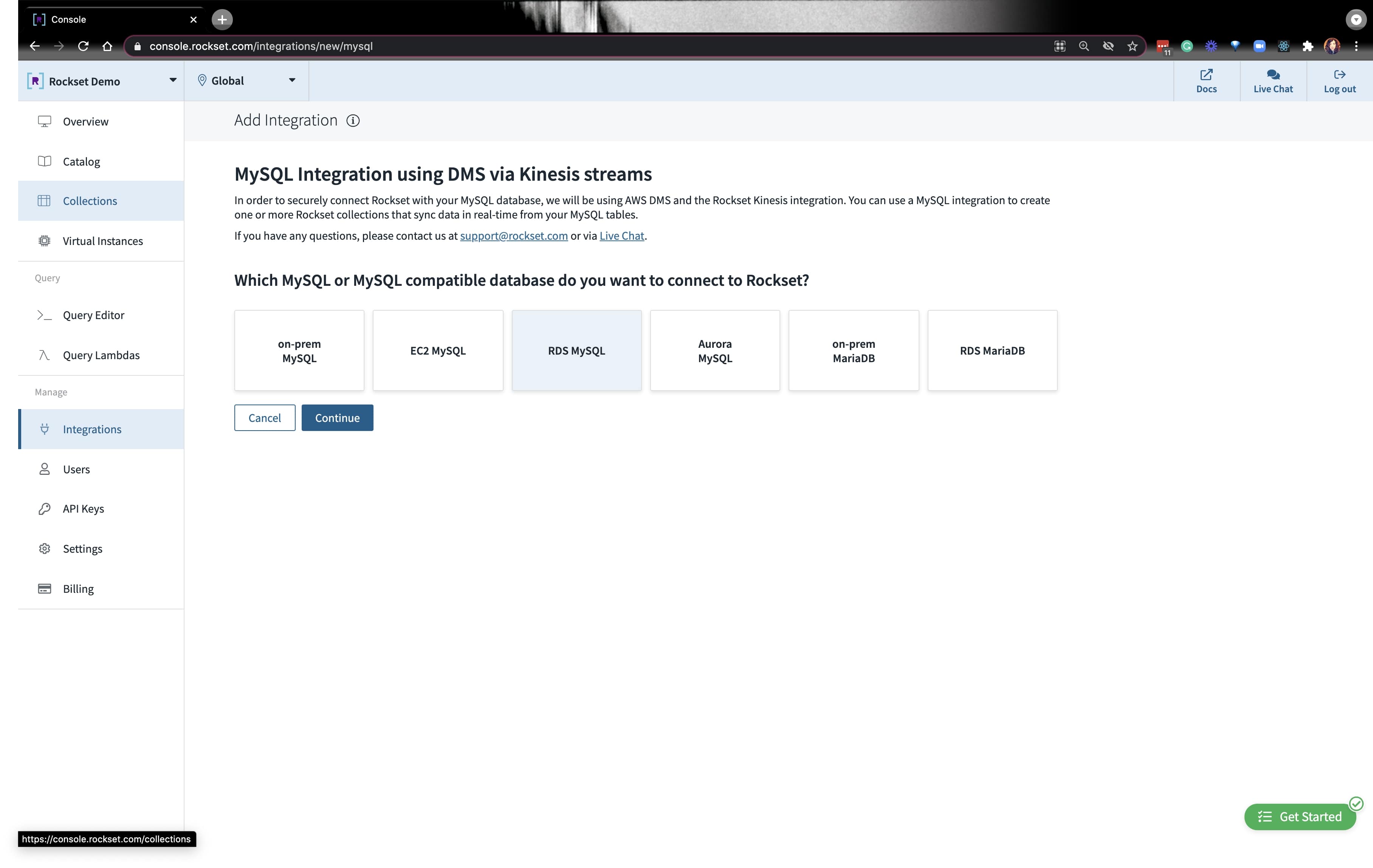

Integrating MySQL with Rockset through a knowledge connector

Go forward and create a brand new MySQL integration and click on on RDS MySQL. You’ll see prompts to make sure that you probably did the assorted setup directions we simply coated above. Simply click on Carried out and transfer to the subsequent immediate.

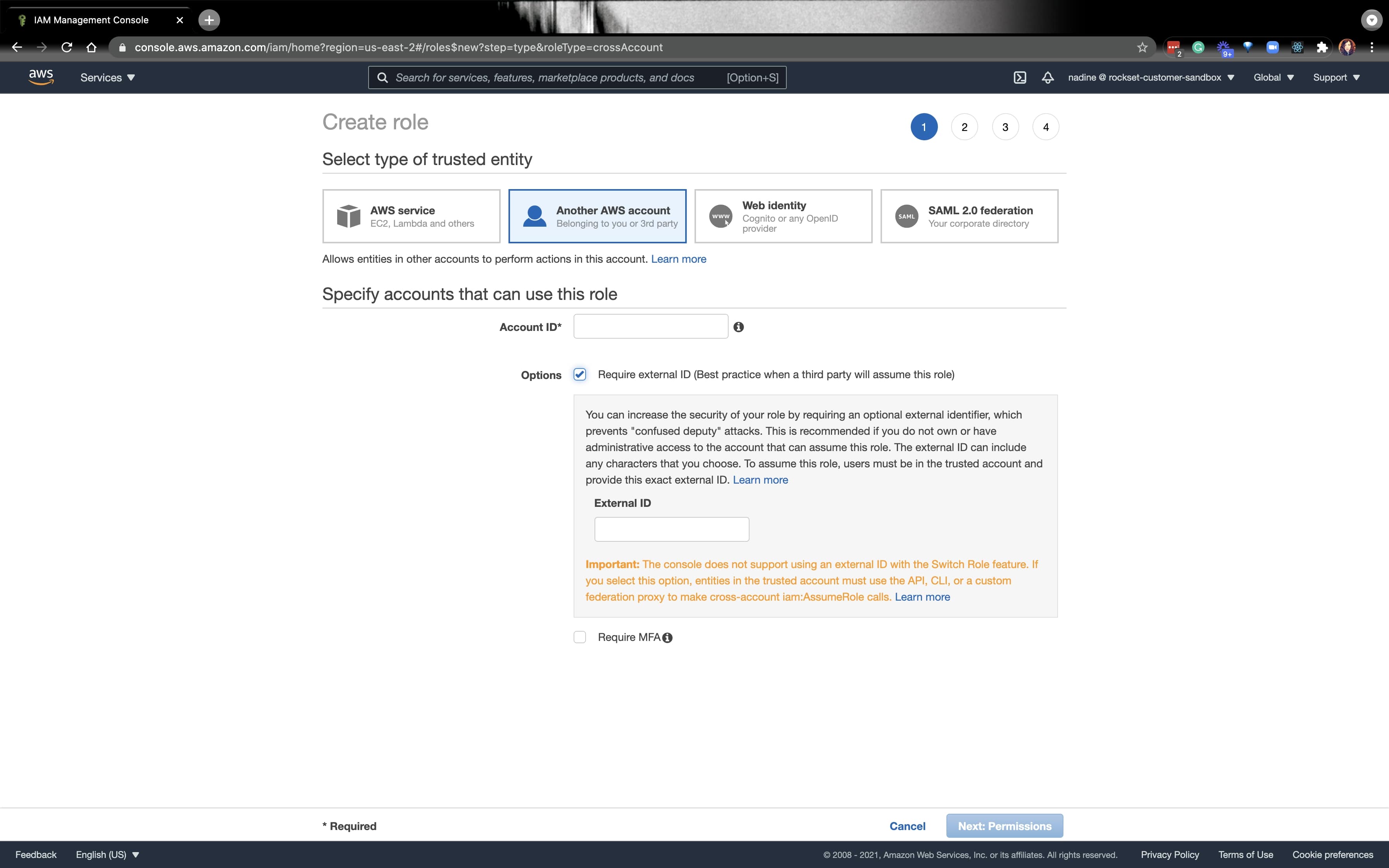

The final immediate will ask you for a task ARN particularly for Rockset. Navigate to the AWS IAM console and create a rockset-role and put Rockset’s account and exterior ID:

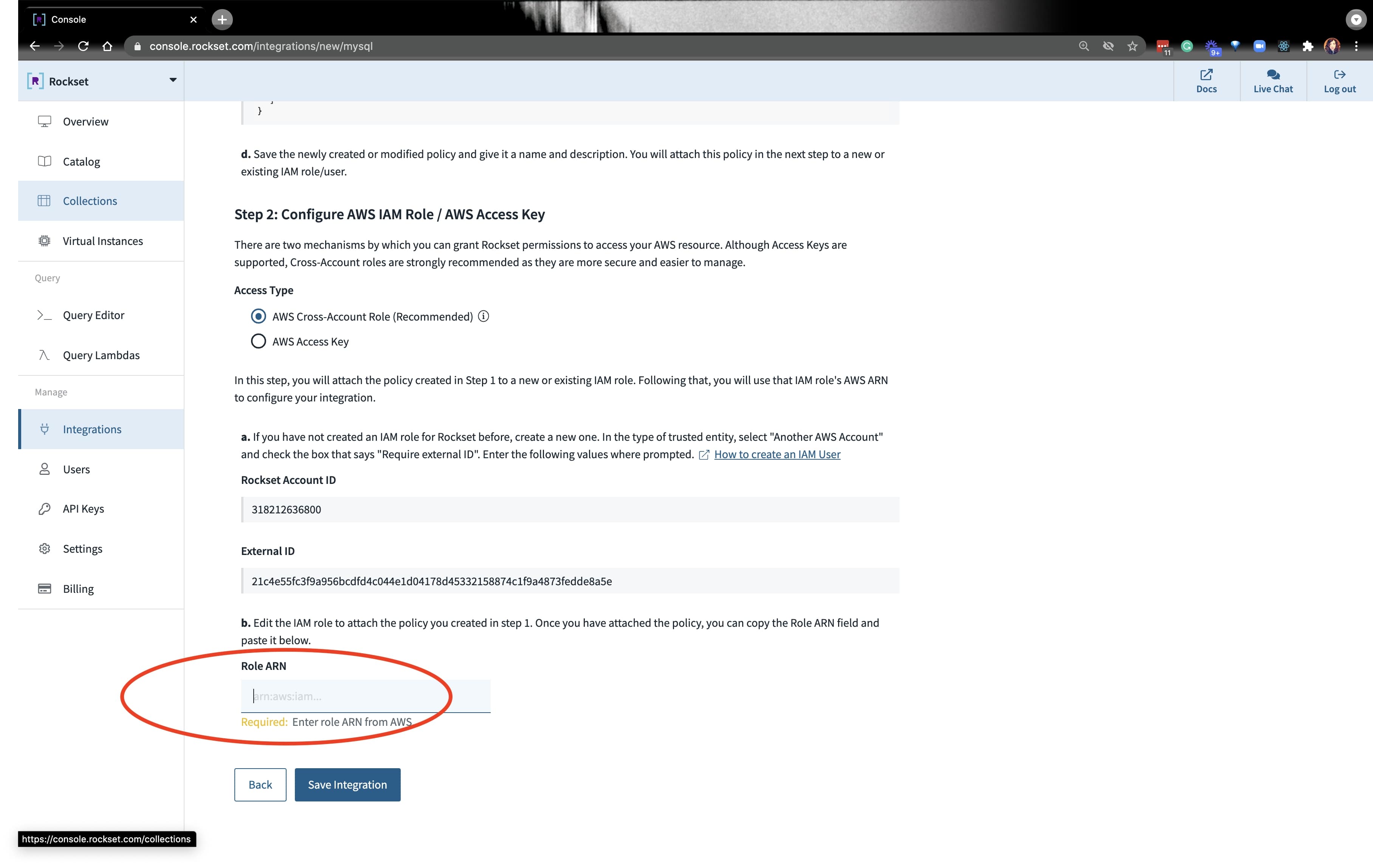

You’ll seize the ARN from the function we created and paste it on the backside the place it requires that data:

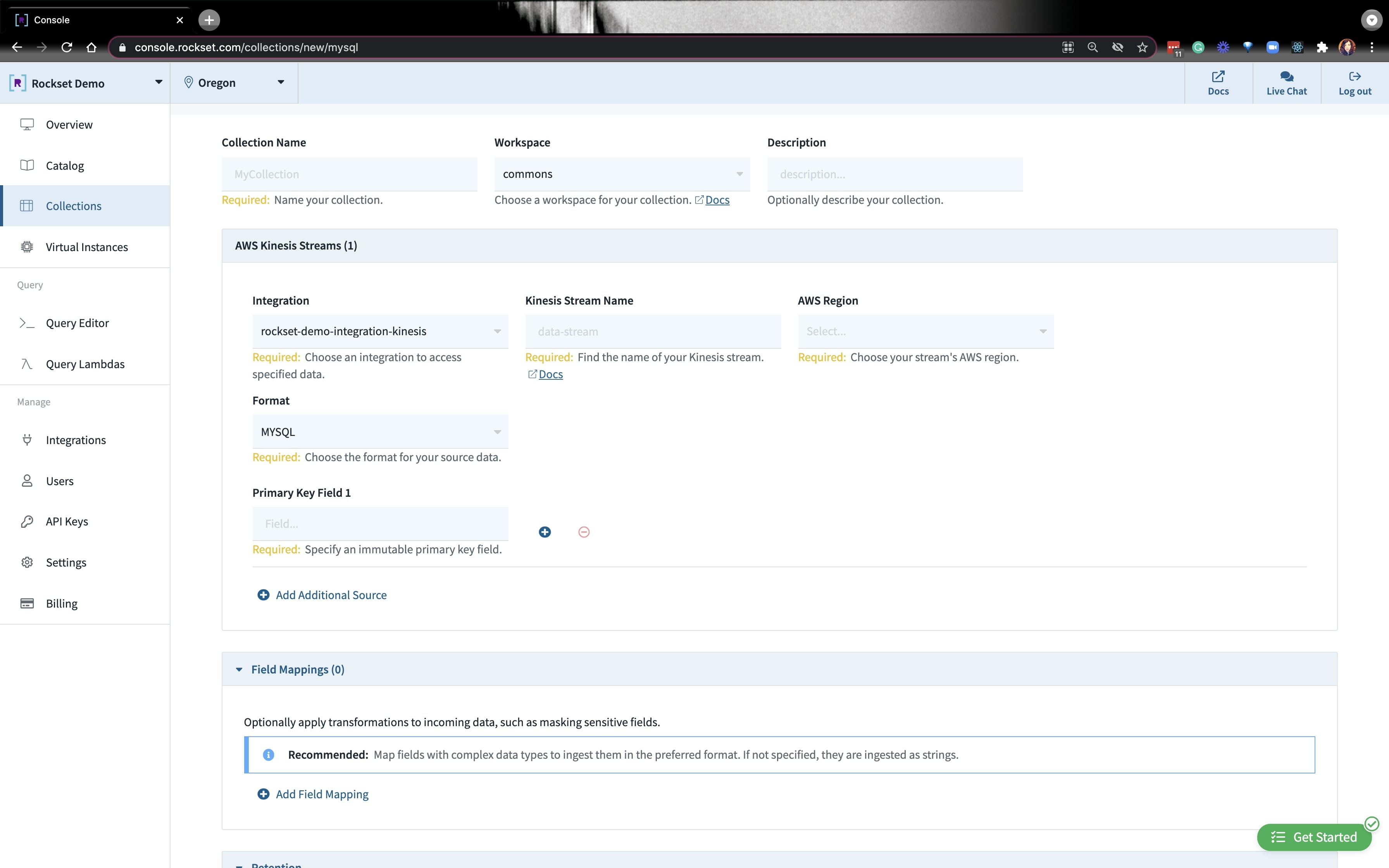

As soon as the combination is ready up, you’ll have to create a set. Go forward and put your assortment title, AWS area, and kinesis stream data:

After a minute or so, it’s best to be capable of question your information that’s coming in from MySQL!

Querying the Airbnb Ddata on Rockset

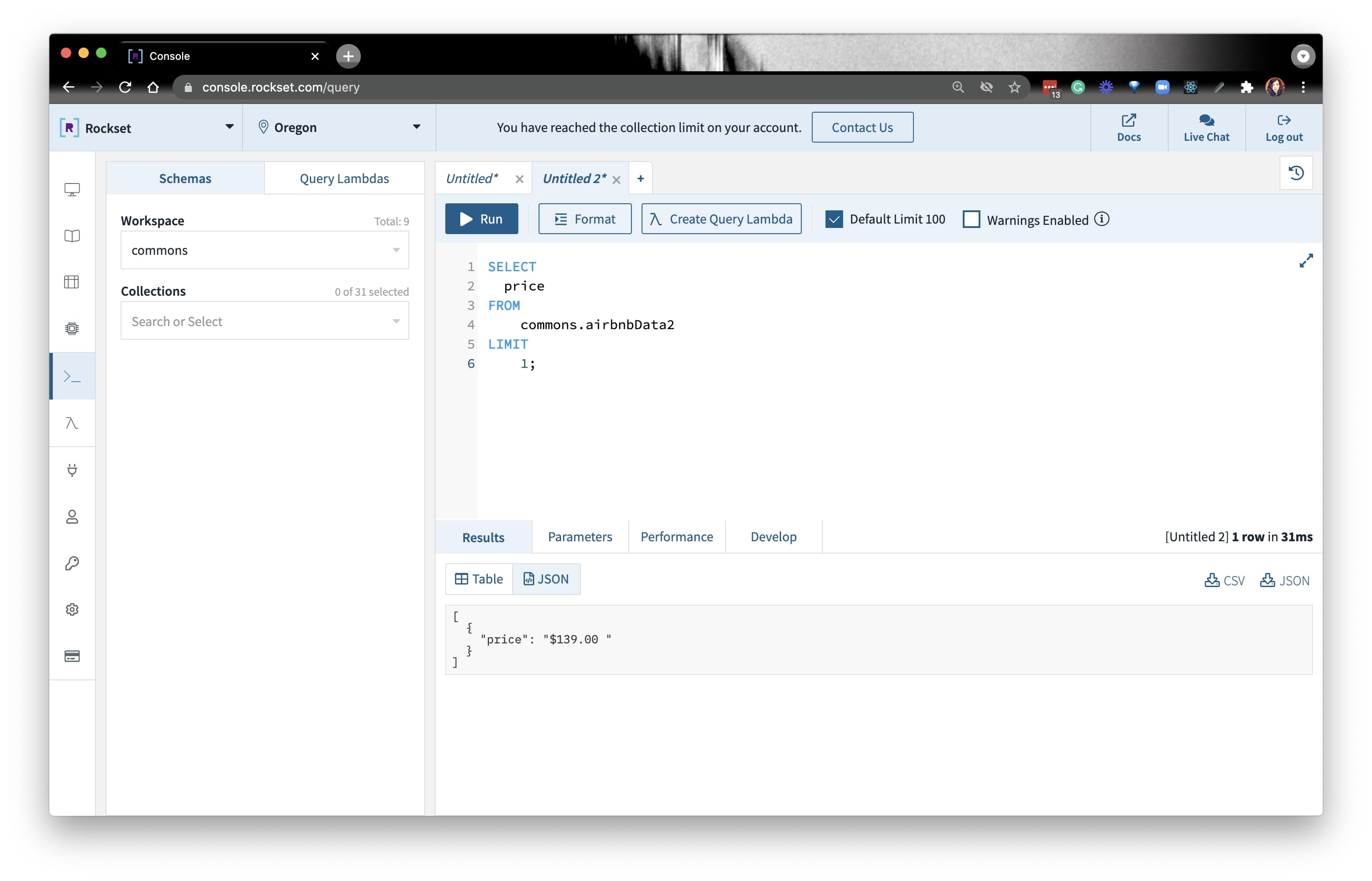

After every thing is loaded, we’re prepared to jot down some queries. Because the information is predicated on SF— and we all know SF costs are nothing to brag about— we are able to see what the typical Airbnb value is in SF. Since value is available in as a string kind, we’ll must convert it to a float kind:

SELECT value

FROM yourCollection

LIMIT 1;

We first used regex to eliminate the $. There are two approaches:

On this stream, we used REGEXP_LIKE(). From there, we TRY_CAST() value to a float kind. Then, we obtained the typical value. The question appeared like this:

SELECT AVG(try_cast(REGEXP_REPLACE(value, '[^d.]') as float)) avgprice

FROM commons.sfairbnbCollectioName

WHERE TRY_CAST(REGEXP_REPLACE(value, '[^d.]') as float) will not be null and metropolis = 'San Francisco';

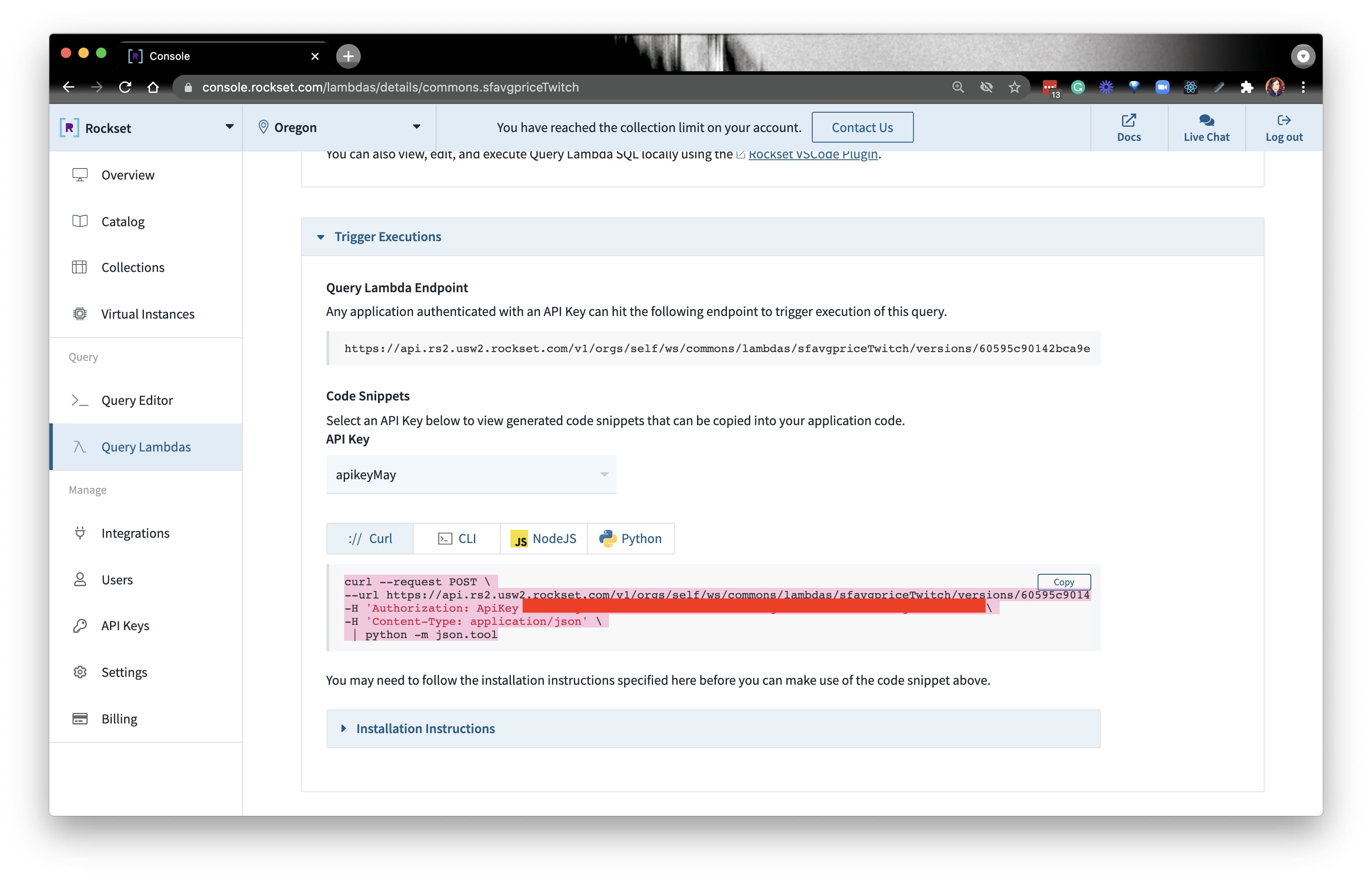

As soon as we write the question, we are able to use the Question Lambda function to create a knowledge API on the info from MySQL. We will execute the question on our terminal by copying the CURL command and pasting it in our terminal:

Voila! That is an end-to-end instance of how one can scale your MySQL analytical masses on Rockset. Should you haven’t already, you’ll be able to learn Justin’s weblog extra about scaling MySQL for real-time analytics.

You’ll be able to catch the stream of this information right here:

Embedded content material: https://www.youtube.com/embed/0UCiWfs-_nI

TLDR: yow will discover all of the assets you want within the developer nook.