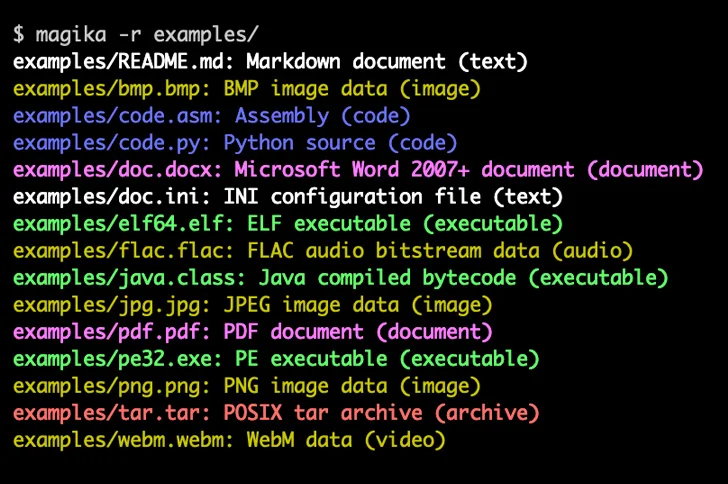

Google has introduced that it is open-sourcing Magika, a synthetic intelligence (AI)-powered software to establish file varieties, to assist defenders precisely detect binary and textual file varieties.

“Magika outperforms typical file identification strategies offering an general 30% accuracy enhance and as much as 95% greater precision on historically arduous to establish, however probably problematic content material comparable to VBA, JavaScript, and Powershell,” the corporate stated.

The software program makes use of a “customized, extremely optimized deep-learning mannequin” that permits the exact identification of file varieties inside milliseconds. Magika implements inference features utilizing the Open Neural Community Alternate (ONNX).

Google stated it internally makes use of Magika at scale to assist enhance customers’ security by routing Gmail, Drive, and Protected Looking recordsdata to the correct safety and content material coverage scanners.

In November 2023, the tech large unveiled RETVec (quick for Resilient and Environment friendly Textual content Vectorizer), a multilingual textual content processing mannequin to detect probably dangerous content material comparable to spam and malicious emails in Gmail.

Amid an ongoing debate on the dangers of the quickly creating know-how and its abuse by nation-state actors related to Russia, China, Iran, and North Korea to spice up their hacking efforts, Google stated deploying AI at scale can strengthen digital safety and “tilt the cybersecurity steadiness from attackers to defenders.”

It additionally emphasised the necessity for a balanced regulatory strategy to AI utilization and adoption in an effort to keep away from a future the place attackers can innovate, however defenders are restrained resulting from AI governance decisions.

“AI permits safety professionals and defenders to scale their work in menace detection, malware evaluation, vulnerability detection, vulnerability fixing and incident response,” the tech large’s Phil Venables and Royal Hansen famous. “AI affords the most effective alternative to upend the Defender’s Dilemma, and tilt the scales of our on-line world to offer defenders a decisive benefit over attackers.”

Issues have additionally been raised about generative AI fashions’ use of web-scraped information for coaching functions, which can additionally embody private information.

“If you do not know what your mannequin goes for use for, how are you going to guarantee its downstream use will respect information safety and folks’s rights and freedoms?,” the U.Ok. Data Commissioner’s Workplace (ICO) identified final month.

What’s extra, new analysis has proven that enormous language fashions can perform as “sleeper brokers” which may be seemingly innocuous however could be programmed to have interaction in misleading or malicious habits when particular standards are met or particular directions are supplied.

“Such backdoor habits could be made persistent in order that it’s not eliminated by commonplace security coaching strategies, together with supervised fine-tuning, reinforcement studying, and adversarial coaching (eliciting unsafe habits after which coaching to take away it), researchers from AI startup Anthropic stated within the examine.