Introduction

Indexes are a vital a part of correct information modeling for all databases, and DynamoDB isn’t any exception. DynamoDB’s secondary indexes are a strong instrument for enabling new entry patterns in your information.

On this publish, we’ll take a look at DynamoDB secondary indexes. First, we’ll begin with some conceptual factors about how to consider DynamoDB and the issues that secondary indexes clear up. Then, we’ll take a look at some sensible ideas for utilizing secondary indexes successfully. Lastly, we’ll shut with some ideas on when it is best to use secondary indexes and when it is best to search for different options.

Let’s get began.

What’s DynamoDB, and what are DynamoDB secondary indexes?

Earlier than we get into use instances and finest practices for secondary indexes, we must always first perceive what DynamoDB secondary indexes are. And to try this, we must always perceive a bit about how DynamoDB works.

This assumes some fundamental understanding of DynamoDB. We’ll cowl the essential factors it’s good to know to grasp secondary indexes, however in case you’re new to DynamoDB, chances are you’ll wish to begin with a extra fundamental introduction.

The Naked Minimal you Have to Learn about DynamoDB

DynamoDB is a novel database. It is designed for OLTP workloads, that means it is nice for dealing with a excessive quantity of small operations — consider issues like including an merchandise to a purchasing cart, liking a video, or including a touch upon Reddit. In that approach, it will possibly deal with related purposes as different databases you may need used, like MySQL, PostgreSQL, MongoDB, or Cassandra.

DynamoDB’s key promise is its assure of constant efficiency at any scale. Whether or not your desk has 1 megabyte of knowledge or 1 petabyte of knowledge, DynamoDB needs to have the identical latency in your OLTP-like requests. It is a large deal — many databases will see decreased efficiency as you improve the quantity of knowledge or the variety of concurrent requests. Nevertheless, offering these ensures requires some tradeoffs, and DynamoDB has some distinctive traits that it’s good to perceive to make use of it successfully.

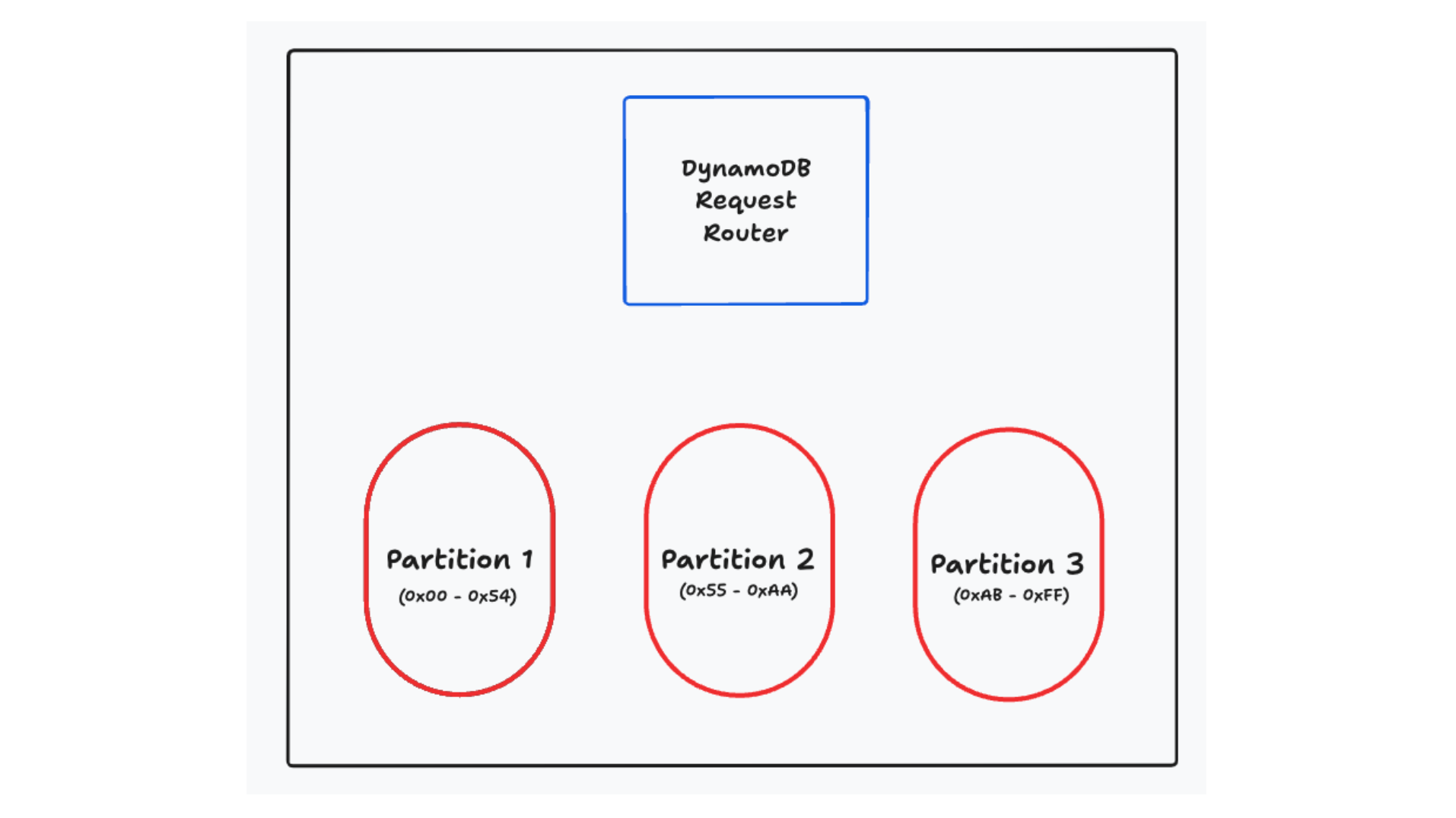

First, DynamoDB horizontally scales your databases by spreading your information throughout a number of partitions below the hood. These partitions should not seen to you as a person, however they’re on the core of how DynamoDB works. You’ll specify a major key in your desk (both a single component, known as a ‘partition key’, or a mix of a partition key and a kind key), and DynamoDB will use that major key to find out which partition your information lives on. Any request you make will undergo a request router that may decide which partition ought to deal with the request. These partitions are small — usually 10GB or much less — to allow them to be moved, break up, replicated, and in any other case managed independently.

Horizontal scalability through sharding is fascinating however is certainly not distinctive to DynamoDB. Many different databases — each relational and non-relational — use sharding to horizontally scale. Nevertheless, what is distinctive to DynamoDB is the way it forces you to make use of your major key to entry your information. Relatively than utilizing a question planner that interprets your requests right into a collection of queries, DynamoDB forces you to make use of your major key to entry your information. You might be primarily getting a instantly addressable index in your information.

The API for DynamoDB displays this. There are a collection of operations on particular person gadgets (GetItem, PutItem, UpdateItem, DeleteItem) that assist you to learn, write, and delete particular person gadgets. Moreover, there’s a Question operation that lets you retrieve a number of gadgets with the identical partition key. When you’ve got a desk with a composite major key, gadgets with the identical partition key will probably be grouped collectively on the identical partition. They are going to be ordered in accordance with the type key, permitting you to deal with patterns like “Fetch the latest Orders for a Consumer” or “Fetch the final 10 Sensor Readings for an IoT Gadget”.

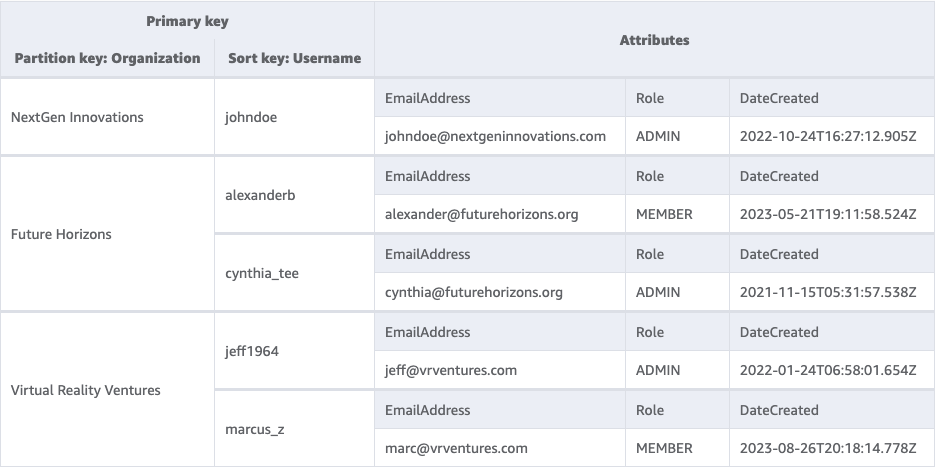

For instance, we could say a SaaS utility that has a desk of Customers. All Customers belong to a single Group. We’d have a desk that appears as follows:

We’re utilizing a composite major key with a partition key of ‘Group’ and a kind key of ‘Username’. This permits us to do operations to fetch or replace a person Consumer by offering their Group and Username. We will additionally fetch the entire Customers for a single Group by offering simply the Group to a Question operation.

What are secondary indexes, and the way do they work

With some fundamentals in thoughts, let’s now take a look at secondary indexes. One of the best ways to grasp the necessity for secondary indexes is to grasp the issue they clear up. We have seen how DynamoDB partitions your information in accordance with your major key and the way it pushes you to make use of the first key to entry your information. That is all nicely and good for some entry patterns, however what if it’s good to entry your information another way?

In our instance above, we had a desk of customers that we accessed by their group and username. Nevertheless, we may additionally must fetch a single person by their e-mail tackle. This sample does not match with the first key entry sample that DynamoDB pushes us in direction of. As a result of our desk is partitioned by totally different attributes, there’s not a transparent approach to entry our information in the way in which we wish. We may do a full desk scan, however that is sluggish and inefficient. We may duplicate our information right into a separate desk with a distinct major key, however that provides complexity.

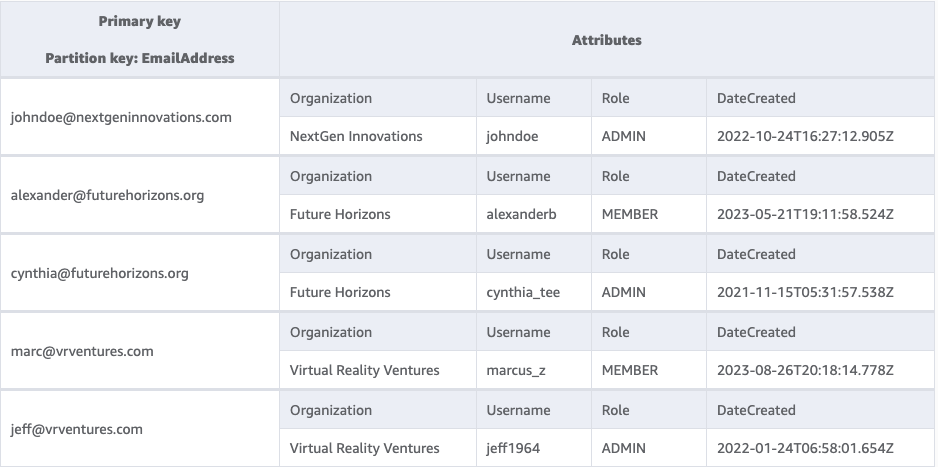

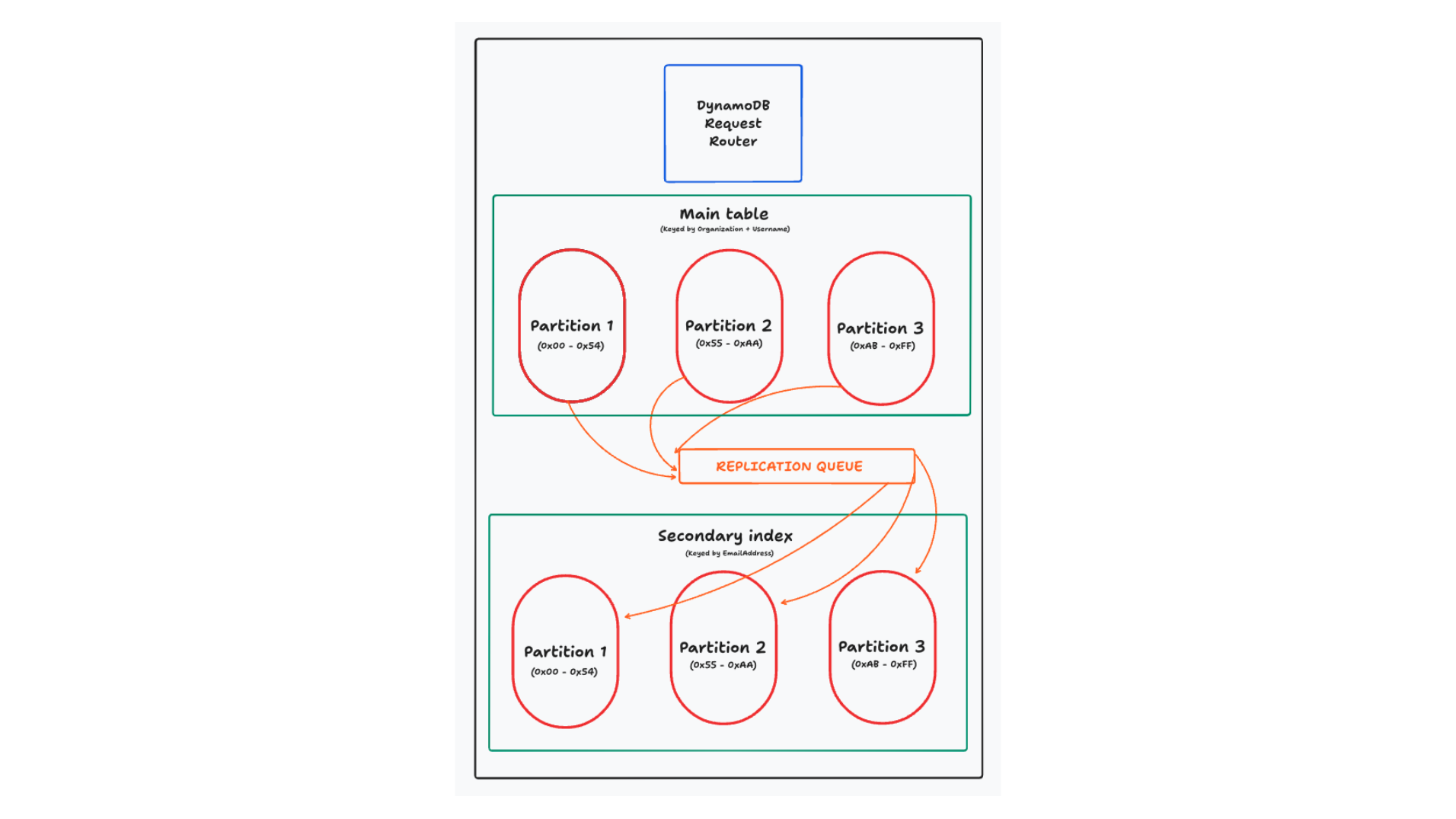

That is the place secondary indexes are available in. A secondary index is principally a completely managed copy of your information with a distinct major key. You’ll specify a secondary index in your desk by declaring the first key for the index. As writes come into your desk, DynamoDB will robotically replicate the info to your secondary index.

Word: All the things on this part applies to world secondary indexes. DynamoDB additionally gives native secondary indexes, that are a bit totally different. In virtually all instances, you will have a world secondary index. For extra particulars on the variations, take a look at this text on selecting a world or native secondary index.

On this case, we’ll add a secondary index to our desk with a partition key of “E-mail”. The secondary index will look as follows:

Discover that this is identical information, it has simply been reorganized with a distinct major key. Now, we will effectively search for a person by their e-mail tackle.

In some methods, that is similar to an index in different databases. Each present a knowledge construction that’s optimized for lookups on a specific attribute. However DynamoDB’s secondary indexes are totally different in a number of key methods.

First, and most significantly, DynamoDB’s indexes reside on fully totally different partitions than your principal desk. DynamoDB needs each lookup to be environment friendly and predictable, and it needs to supply linear horizontal scaling. To do that, it must reshard your information by the attributes you will use to question it.

In different distributed databases, they often do not reshard your information for the secondary index. They will often simply keep the secondary index for all information on the shard. Nevertheless, in case your indexes do not use the shard key, you are shedding among the advantages of horizontally scaling your information as a question with out the shard key might want to do a scatter-gather operation throughout all shards to seek out the info you are on the lookout for.

A second approach that DynamoDB’s secondary indexes are totally different is that they (usually) copy your entire merchandise to the secondary index. For indexes on a relational database, the index will usually include a pointer to the first key of the merchandise being listed. After finding a related report within the index, the database will then must go fetch the total merchandise. As a result of DynamoDB’s secondary indexes are on totally different nodes than the principle desk, they wish to keep away from a community hop again to the unique merchandise. As a substitute, you will copy as a lot information as you want into the secondary index to deal with your learn.

Secondary indexes in DynamoDB are highly effective, however they’ve some limitations. First off, they’re read-only — you possibly can’t write on to a secondary index. Relatively, you’ll write to your principal desk, and DynamoDB will deal with the replication to your secondary index. Second, you might be charged for the write operations to your secondary indexes. Thus, including a secondary index to your desk will usually double the overall write prices in your desk.

Ideas for utilizing secondary indexes

Now that we perceive what secondary indexes are and the way they work, let’s speak about how one can use them successfully. Secondary indexes are a strong instrument, however they are often misused. Listed here are some ideas for utilizing secondary indexes successfully.

Attempt to have read-only patterns on secondary indexes

The primary tip appears apparent — secondary indexes can solely be used for reads, so it is best to purpose to have read-only patterns in your secondary indexes! And but, I see this error on a regular basis. Builders will first learn from a secondary index, then write to the principle desk. This leads to further value and additional latency, and you may usually keep away from it with some upfront planning.

In case you’ve learn something about DynamoDB information modeling, you in all probability know that it is best to consider your entry patterns first. It isn’t like a relational database the place you first design normalized tables after which write queries to affix them collectively. In DynamoDB, it is best to take into consideration the actions your utility will take, after which design your tables and indexes to assist these actions.

When designing my desk, I like to begin with the write-based entry patterns first. With my writes, I am usually sustaining some kind of constraint — uniqueness on a username or a most variety of members in a bunch. I wish to design my desk in a approach that makes this easy, ideally with out utilizing DynamoDB Transactions or utilizing a read-modify-write sample that may very well be topic to race circumstances.

As you’re employed by way of these, you will usually discover that there is a ‘major’ approach to establish your merchandise that matches up along with your write patterns. It will find yourself being your major key. Then, including in further, secondary learn patterns is straightforward with secondary indexes.

In our Customers instance earlier than, each Consumer request will seemingly embrace the Group and the Username. It will enable me to search for the person Consumer report in addition to authorize particular actions by the Consumer. The e-mail tackle lookup could also be for much less distinguished entry patterns, like a ‘forgot password’ circulation or a ‘seek for a person’ circulation. These are read-only patterns, and so they match nicely with a secondary index.

Use secondary indexes when your keys are mutable

A second tip for utilizing secondary indexes is to make use of them for mutable values in your entry patterns. Let’s first perceive the reasoning behind it, after which take a look at conditions the place it applies.

DynamoDB lets you replace an current merchandise with the UpdateItem

operation. Nevertheless, you can not change the first key of an merchandise in an replace. The first secret’s the distinctive identifier for an merchandise, and altering the first secret’s principally creating a brand new merchandise. If you wish to change the first key of an current merchandise, you will must delete the previous merchandise and create a brand new one. This two-step course of is slower and dear. Usually you will must learn the unique merchandise first, then use a transaction to delete the unique merchandise and create a brand new one in the identical request.

Then again, you probably have this mutable worth within the major key of a secondary index, then DynamoDB will deal with this delete + create course of for you throughout replication. You’ll be able to challenge a easy UpdateItem request to alter the worth, and DynamoDB will deal with the remainder.

I see this sample come up in two principal conditions. The primary, and most typical, is when you’ve got a mutable attribute that you simply wish to type on. The canonical examples listed here are a leaderboard for a recreation the place individuals are regularly racking up factors, or for a regularly updating listing of things the place you wish to show essentially the most not too long ago up to date gadgets first. Consider one thing like Google Drive, the place you possibly can type your recordsdata by ‘final modified’.

A second sample the place this comes up is when you’ve got a mutable attribute that you simply wish to filter on. Right here, you possibly can consider an ecommerce retailer with a historical past of orders for a person. It’s possible you’ll wish to enable the person to filter their orders by standing — present me all my orders which might be ‘shipped’ or ‘delivered’. You’ll be able to construct this into your partition key or the start of your type key to permit exact-match filtering. Because the merchandise modifications standing, you possibly can replace the standing attribute and lean on DynamoDB to group the gadgets appropriately in your secondary index.

In each of those conditions, shifting this mutable attribute to your secondary index will prevent money and time. You will save time by avoiding the read-modify-write sample, and you may lower your expenses by avoiding the additional write prices of the transaction.

Moreover, word that this sample suits nicely with the earlier tip. It is unlikely you’ll establish an merchandise for writing primarily based on the mutable attribute like their earlier rating, their earlier standing, or the final time they have been up to date. Relatively, you will replace by a extra persistent worth, just like the person’s ID, the order ID, or the file’s ID. Then, you will use the secondary index to type and filter primarily based on the mutable attribute.

Keep away from the ‘fats’ partition

We noticed above that DynamoDB divides your information into partitions primarily based on the first key. DynamoDB goals to maintain these partitions small — 10GB or much less — and it is best to purpose to unfold requests throughout your partitions to get the advantages of DynamoDB’s scalability.

This usually means it is best to use a high-cardinality worth in your partition key. Consider one thing like a username, an order ID, or a sensor ID. There are giant numbers of values for these attributes, and DynamoDB can unfold the site visitors throughout your partitions.

Usually, I see individuals perceive this precept of their principal desk, however then utterly overlook about it of their secondary indexes. Usually, they need ordering throughout your entire desk for a sort of merchandise. In the event that they wish to retrieve customers alphabetically, they will use a secondary index the place all customers have USERS because the partition key and the username as the type key. Or, if they need ordering of the latest orders in an ecommerce retailer, they will use a secondary index the place all orders have ORDERS because the partition key and the timestamp as the type key.

This sample can work for small-traffic purposes the place you will not come near the DynamoDB partition throughput limits, however it’s a harmful sample for a high traffic utility. Your whole site visitors could also be funneled to a single bodily partition, and you may rapidly hit the write throughput limits for that partition.

Additional, and most dangerously, this may trigger issues in your principal desk. In case your secondary index is getting write throttled throughout replication, the replication queue will again up. If this queue backs up an excessive amount of, DynamoDB will begin rejecting writes in your principal desk.

That is designed that will help you — DynamoDB needs to restrict the staleness of your secondary index, so it’ll stop you from a secondary index with a considerable amount of lag. Nevertheless, it may be a stunning state of affairs that pops up whenever you’re least anticipating it.

Use sparse indexes as a world filter

Individuals usually consider secondary indexes as a approach to replicate all of their information with a brand new major key. Nevertheless, you do not want all your information to finish up in a secondary index. When you’ve got an merchandise that does not match the index’s key schema, it will not be replicated to the index.

This may be actually helpful for offering a world filter in your information. The canonical instance I exploit for this can be a message inbox. In your principal desk, you would possibly retailer all of the messages for a specific person ordered by the point they have been created.

However in case you’re like me, you’ve got loads of messages in your inbox. Additional, you would possibly deal with unread messages as a ‘todo’ listing, like little reminders to get again to somebody. Accordingly, I often solely wish to see the unread messages in my inbox.

You can use your secondary index to supply this world filter the place unread == true. Maybe your secondary index partition secret’s one thing like ${userId}#UNREAD, and the type secret’s the timestamp of the message. If you create the message initially, it’ll embrace the secondary index partition key worth and thus will probably be replicated to the unread messages secondary index. Later, when a person reads the message, you possibly can change the standing to READ and delete the secondary index partition key worth. DynamoDB will then take away it out of your secondary index.

I exploit this trick on a regular basis, and it is remarkably efficient. Additional, a sparse index will prevent cash. Any updates to learn messages won’t be replicated to the secondary index, and you may save on write prices.

Slender your secondary index projections to scale back index dimension and/or writes

For our final tip, let’s take the earlier level somewhat additional. We simply noticed that DynamoDB will not embrace an merchandise in your secondary index if the merchandise does not have the first key parts for the index. This trick can be utilized for not solely major key parts but in addition for non-key attributes within the information!

If you create a secondary index, you possibly can specify which attributes from the principle desk you wish to embrace within the secondary index. That is known as the projection of the index. You’ll be able to select to incorporate all attributes from the principle desk, solely the first key attributes, or a subset of the attributes.

Whereas it is tempting to incorporate all attributes in your secondary index, this could be a expensive mistake. Do not forget that each write to your principal desk that modifications the worth of a projected attribute will probably be replicated to your secondary index. A single secondary index with full projection successfully doubles the write prices in your desk. Every further secondary index will increase your write prices by 1/N + 1, the place N is the variety of secondary indexes earlier than the brand new one.

Moreover, your write prices are calculated primarily based on the dimensions of your merchandise. Every 1KB of knowledge written to your desk makes use of a WCU. In case you’re copying a 4KB merchandise to your secondary index, you will be paying the total 4 WCUs on each your principal desk and your secondary index.

Thus, there are two methods you could lower your expenses by narrowing your secondary index projections. First, you possibly can keep away from sure writes altogether. When you’ve got an replace operation that does not contact any attributes in your secondary index projection, DynamoDB will skip the write to your secondary index. Second, for these writes that do replicate to your secondary index, it can save you cash by lowering the dimensions of the merchandise that’s replicated.

This could be a tough stability to get proper. Secondary index projections should not alterable after the index is created. In case you discover that you simply want further attributes in your secondary index, you will must create a brand new index with the brand new projection after which delete the previous index.

Do you have to use a secondary index?

Now that we have explored some sensible recommendation round secondary indexes, let’s take a step again and ask a extra basic query — must you use a secondary index in any respect?

As we have seen, secondary indexes enable you to entry your information another way. Nevertheless, this comes at the price of the extra writes. Thus, my rule of thumb for secondary indexes is:

Use secondary indexes when the decreased learn prices outweigh the elevated write prices.

This appears apparent whenever you say it, however it may be counterintuitive as you are modeling. It appears really easy to say “Throw it in a secondary index” with out enthusiastic about different approaches.

To carry this residence, let us take a look at two conditions the place secondary indexes may not make sense.

A number of filterable attributes in small merchandise collections

With DynamoDB, you usually need your major keys to do your filtering for you. It irks me somewhat at any time when I exploit a Question in DynamoDB however then carry out my very own filtering in my utility — why could not I simply construct that into the first key?

Regardless of my visceral response, there are some conditions the place you would possibly wish to over-read your information after which filter in your utility.

The commonest place you will see that is whenever you wish to present loads of totally different filters in your information in your customers, however the related information set is bounded.

Consider a exercise tracker. You would possibly wish to enable customers to filter on loads of attributes, similar to kind of exercise, depth, period, date, and so forth. Nevertheless, the variety of exercises a person has goes to be manageable — even an influence person will take some time to exceed 1000 exercises. Relatively than placing indexes on all of those attributes, you possibly can simply fetch all of the person’s exercises after which filter in your utility.

That is the place I like to recommend doing the mathematics. DynamoDB makes it simple to calculate these two choices and get a way of which one will work higher in your utility.

A number of filterable attributes in giant merchandise collections

Let’s change our state of affairs a bit — what if our merchandise assortment is giant? What if we’re constructing a exercise tracker for a gymnasium, and we wish to enable the gymnasium proprietor to filter on the entire attributes we talked about above for all of the customers within the gymnasium?

This modifications the state of affairs. Now we’re speaking about tons of and even hundreds of customers, every with tons of or hundreds of exercises. It will not make sense to over-read your entire merchandise assortment and do post-hoc filtering on the outcomes.

However secondary indexes do not actually make sense right here both. Secondary indexes are good for identified entry patterns the place you possibly can depend on the related filters being current. If we wish our gymnasium proprietor to have the ability to filter on quite a lot of attributes, all of that are non-compulsory, we would must create numerous indexes to make this work.

We talked in regards to the attainable downsides of question planners earlier than, however question planners have an upside too. Along with permitting for extra versatile queries, they will additionally do issues like index intersections to have a look at partial outcomes from a number of indexes in composing these queries. You are able to do the identical factor with DynamoDB, however it will end in loads of forwards and backwards along with your utility, together with some advanced utility logic to determine it out.

When I’ve these kinds of issues, I usually search for a instrument higher suited to this use case. Rockset and Elasticsearch are my go-to suggestions right here for offering versatile, secondary-index-like filtering throughout your dataset.

Conclusion

On this publish, we realized about DynamoDB secondary indexes. First, we checked out some conceptual bits to grasp how DynamoDB works and why secondary indexes are wanted. Then, we reviewed some sensible tricks to perceive how one can use secondary indexes successfully and to study their particular quirks. Lastly, we checked out how to consider secondary indexes to see when it is best to use different approaches.

Secondary indexes are a strong instrument in your DynamoDB toolbox, however they don’t seem to be a silver bullet. As with all DynamoDB information modeling, be sure you rigorously think about your entry patterns and depend the prices earlier than you bounce in.

Be taught extra about how you need to use Rockset for secondary-index-like filtering in Alex DeBrie’s weblog DynamoDB Filtering and Aggregation Queries Utilizing SQL on Rockset.