This 12 months marks the world’s greatest election 12 months but.

An estimated 4 billion voters will head to the polls throughout greater than 60 nationwide elections worldwide in 2024 — all at a time when synthetic intelligence (AI) continues to make historical past of its personal. With out query, the dangerous use of AI will play a task in election interference worldwide.

In actual fact, it already has.

In January, hundreds of U.S. voters in New Hampshire obtained an AI robocall that impersonated President Joe Biden, urging them to not vote within the main. Within the UK, greater than 100 deepfake social media adverts impersonated Prime Minister Rishi Sunak on the Meta platform final December[i]. Equally, the 2023 parliamentary elections in Slovakia spawned deepfake audio clips that featured false proposals for rigging votes and elevating the value of beer[ii].

We will’t put it extra plainly. The dangerous use of AI has the potential to affect an election.

The rise of AI in main elections.

In simply over a 12 months, AI instruments have quickly advanced, providing a wealth of advantages. It analyzes well being information on huge scales, which promotes higher healthcare outcomes. It helps supermarkets convey the freshest produce to the aisles by streamlining the availability chain. And it does loads of useful on a regular basis issues too, like recommending films and exhibits in our streaming queues primarily based on what we like.

But as with virtually any know-how, whether or not AI helps or harms is as much as the individual utilizing it. And loads of dangerous actors have chosen to make use of it for hurt. Scammers have used it to dupe folks with convincing “deepfakes” that impersonate everybody from Taylor Swift to members of their very own household with phony audio, video, and images created by AI. Additional, AI has additionally helped scammers spin up phishing emails and texts that look achingly legit, all on an enormous scale because of AI’s ease of use.

Now, take into account how those self same deepfakes and scams would possibly affect an election 12 months. We’ve got little doubt, the examples cited above are solely the beginning.

Our pledge this election 12 months.

Inside this local weather, we’ve pledged to assist forestall misleading AI content material from interfering with this 12 months’s world elections as a part of the “Tech Accord to Fight Misleading Use of AI in 2024 Elections.” We be part of main tech firms corresponding to Adobe, Google, IBM, Meta, Microsoft, and TikTok to play our half in defending elections and the electoral course of.

Collectively, we’ll convey our respective powers to fight deepfakes and different dangerous makes use of of AI. That features digital content material corresponding to AI-generated audio, video, and pictures that deceptively pretend or alter the looks, voice, or actions of political candidates, election officers, and different figures in democratic elections. Likewise, it additional covers content material that gives false data about when, the place, and the way folks can forged their vote.

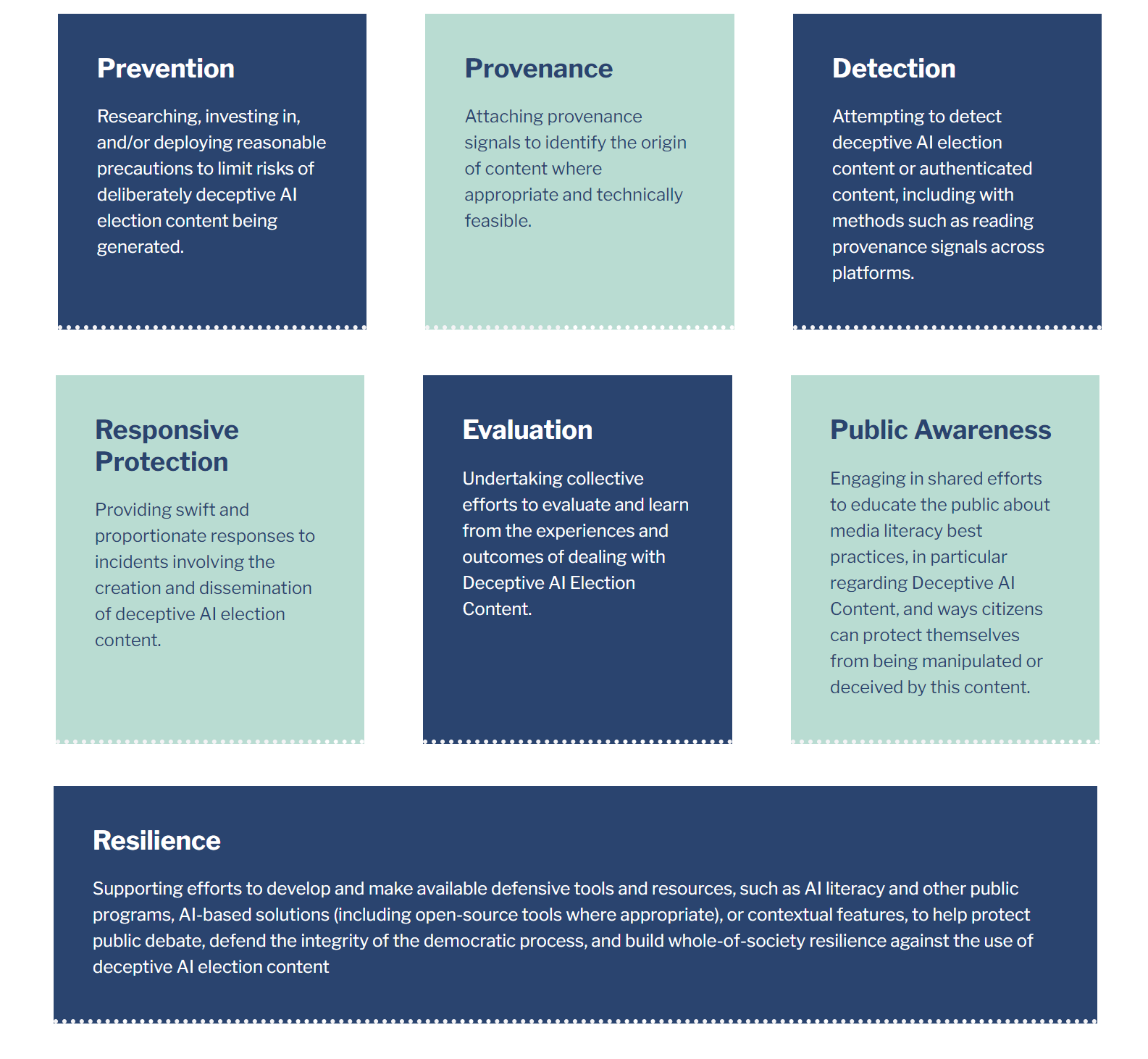

A set of seven rules information the best way for this accord, with every signatory of the pledge lending their strengths to the trigger:

- Earlier this 12 months, we introduced our Mission Mockingbird — a brand new detection know-how that may assist spot AI-cloned audio in messages and movies. (You may see it in motion right here in our weblog on the Taylor Swift deepfake rip-off) From there, you’ll be able to anticipate to see comparable detection applied sciences from us that cowl all method of content material, corresponding to video, images, and textual content.

- We’ve created McAfee Rip-off Safety, an AI-powered characteristic that places a cease to scams earlier than you click on or faucet a dangerous hyperlink. It detects suspicious hyperlinks and sends you an alert if one crops up in texts, emails, or social media — all essential when scammers use election cycles to siphon cash from victims with politically themed phishing websites.

- And as at all times, we pour loads of effort into consciousness, right here in our blogs, together with our analysis experiences and guides. On the subject of combatting the dangerous use of AI, know-how supplies a part of the answer — the opposite half is folks. With an understanding of how dangerous actors use AI, what that appears like, and a wholesome dose of web avenue smarts, folks can shield themselves even higher from scams and flat-out disinformation.

The AI tech accords — an essential first step of many

In all, we see the tech accord as one essential step that tech and media firms can take to maintain folks secure from dangerous AI-generated content material. Now on this election 12 months. And transferring ahead as AI continues to form and reshape what we see and listen to on-line.

But past this accord and the businesses which have signed on stays an essential level: the accord represents only one step in preserving the integrity of elections within the age of AI. As tech firms, we will, and can, do our half to stop dangerous AI from influencing elections. Nonetheless, truthful elections stay a product of countries and their folks. With that, the rule of regulation comes unmistakably into play.

Laws and rules that curb the dangerous use of AI and that levy penalties on its creators will present one other very important step within the broader resolution. One instance: we’ve seen how the U.S. Federal Communications Fee’s (FCC) not too long ago made AI robocalls unlawful. With its ruling, the FCC provides State Lawyer Generals throughout the nation new instruments to go after the dangerous actors behind nefarious robocalls[iii]. And that’s very a lot a step in the proper course.

Defending folks from the sick use of AI requires dedication from all corners. Globally, we face a problem tremendously imposing in nature. But not insurmountable. Collectively, we will preserve folks safer. Textual content from the accord we co-signed places it effectively, “The safety of electoral integrity and public belief is a shared duty and a standard good that transcends partisan pursuits and nationwide borders.”

We’re proud to say that we’ll contribute to that objective with all the things we will convey to bear.

[i] https://www.theguardian.com/know-how/2024/jan/12/deepfake-video-adverts-sunak-facebook-alarm-ai-risk-election

[ii] https://www.bloomberg.com/information/articles/2023-09-29/trolls-in-slovakian-election-tap-ai-deepfakes-to-spread-disinfo

[iii] https://docs.fcc.gov/public/attachments/DOC-400393A1.pdf