As a knowledge engineer, my time is spent both transferring information from one place to a different, or making ready it for publicity to both reporting instruments or entrance finish customers. As information assortment and utilization have turn out to be extra subtle, the sources of knowledge have turn out to be much more various and disparate, volumes have grown and velocity has elevated.

Selection, Quantity and Velocity have been popularised because the three Vs of Massive Information and on this put up I’m going to speak about my issues for every when choosing applied sciences for an actual time analytics platform, as they relate to the three Vs.

Selection

One of many largest developments in recent times with reference to information platforms is the power to extract information from storage silos and into a knowledge lake. This clearly introduces numerous issues for companies who wish to make sense of this information as a result of it’s now arriving in a wide range of codecs and speeds.

To resolve this, companies make use of information lakes with staging areas for all new information. The uncooked information is constantly added to the staging space after which picked up and processed by downstream processes. The foremost profit to having all the information in the identical place signifies that it may be cleaned and remodeled right into a constant format after which be joined collectively. This enables companies to get a full 360 diploma view of their information offering deeper perception and understanding.

A information warehouse is usually the one place in a enterprise the place all the information is clear, is sensible and in a state prepared to supply perception. Nonetheless, they’re usually solely used inside the enterprise for every day reviews and different inner duties, however are not often uncovered again to exterior customers. It’s because if you wish to feed any of this perception again to a person of your platform, the information warehouse isn’t normally geared up with the actual time pace that customers anticipate when utilizing an internet site for instance. Though they’re quick and able to crunching information, they aren’t constructed for a number of concurrent customers searching for millisecond-latency information retrieval.

That is the place applied sciences like Rockset can assist.

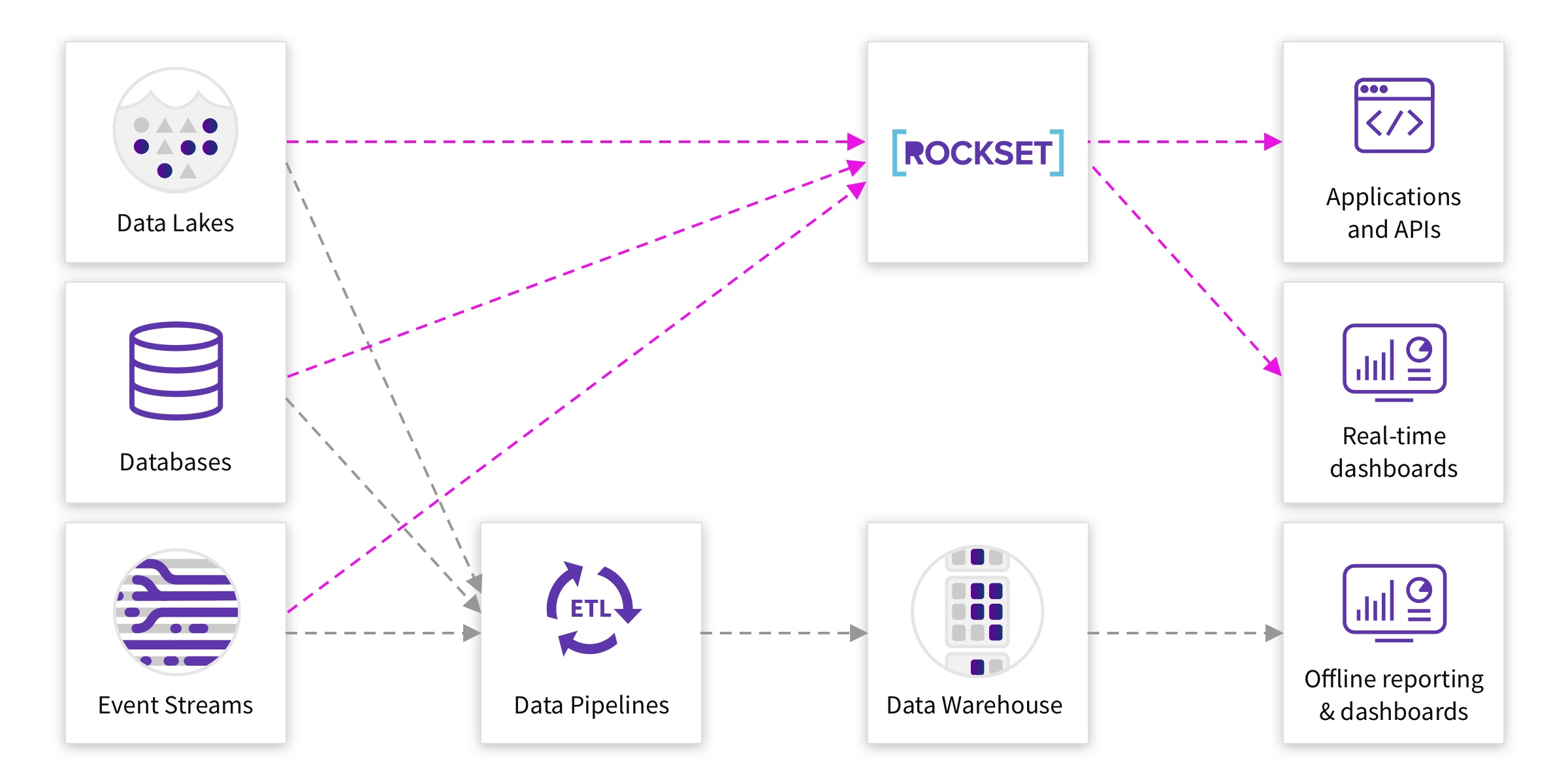

Rockset is an actual time analytics engine that permits SQL queries straight on uncooked information, reminiscent of nested JSON and XML. It repeatedly ingests uncooked information from a number of sources–data lakes, information streams, databases–into its storage layer and permits quick SQL entry from each visualisation instruments and analytic purposes. Which means it may well be part of throughout information from a number of sources and supply advanced analytics to each inner and exterior customers, with out the necessity for upfront information preparation.

Historically, to do that with Amazon Redshift, you would need to construct information pipelines to crunch the information into the precise format required to be proven to the person, then copy this information to DynamoDB or comparable after which present entry to it. As a result of Rockset helps fast SQL on uncooked information you don’t must crunch all the information upfront earlier than copying it, as transformations and calculations might be completed on the fly when the request is made. This simplifies the method and in flip makes it extra versatile to vary in a while.

Quantity

Information platforms now nearly all the time scale horizontally as a substitute of vertically. This implies if extra storage or energy is required, new machines are added that work collectively as a substitute of simply growing the storage and energy of a single machine.

An information warehouse will clearly require a number of space for storing as a consequence of it storing all or nearly all of a enterprise’s information. Rockset sometimes won’t be used to carry the whole lot of an organisation’s information however solely its unstructured information and the subset required for actual time requests, thus limiting the quantity of knowledge it must retailer.

And if you’re planning on copying large quantities of knowledge to Rockset, this additionally isn’t an issue. Rockset is a cloud based mostly answer that’s scaled robotically based mostly on how a lot information is copied to the platform and also you solely pay for the way a lot storage you utilize. It’s additionally constructed to serve advanced queries on giant volumes of knowledge, utilizing distributed question processing and an idea referred to as converged indexing, so that question instances stay quick even over terabytes of knowledge.

Velocity

The amount of knowledge being saved is ever growing because of the velocity at which it’s being created and seize. Actual time streaming applied sciences reminiscent of Apache Kafka have allowed companies to stream hundreds of thousands of rows per second from one information supply to a different.

You might be considering streaming information into a knowledge warehouse and querying it there, however Rockset supplies a unique mannequin for accessing these streams. Kafka connectors can be found inside Rockset to devour streams from Kafka in actual time. This information might be instantly out there for querying as SQL tables inside Rockset, with out requiring transformation, and queries will use the most recent information out there every time they’re run. The advantages of this are large as you are actually capable of realise perception from information because it’s being produced, turning actual time information into actual time perception, as a substitute of being delayed by downstream processes.

One other advantage of utilizing Rockset is the power to question the information by way of APIs and as a consequence of its skill to serve low-latency queries, these calls might be built-in into entrance finish techniques. If the rate of your information signifies that the actual time image for customers is all the time altering, for instance customers can remark and like posts in your web site, you’re going to wish to present in actual time the variety of likes and feedback a put up has. Each like and remark logged in your database might be instantly copied into Rockset and every time the API known as it is going to return the up to date combination numbers. This makes it extremely straightforward for builders to combine into an utility because of the out of the field API supplied by Rockset. This simply wouldn’t be attainable with conventional information warehousing options.

How Information Engineers Can Use Rockset

If your enterprise doesn’t have a knowledge warehouse, then for quick and fast insights in your information, I might advocate pulling this information straight into Rockset. You may rapidly get to insights and permit different members of the crew to utilise this information which is important in any enterprise, much more so in a brand new startup.

If you have already got a knowledge warehouse then you’ll in all probability discover that for many of your every day enterprise reviews, the information warehouse will suffice. Nonetheless the addition of Rockset to take your uncooked information in actual time, particularly if you’re an internet firm producing internet logs, registering new customers and monitoring their behaviour, gives you an actual time view of your information too. This may be highly effective if you wish to feed this information again to entrance finish customers, but in addition to permit your inner groups to watch efficiency in actual time and even spot potential points as they come up as a substitute of a day later.

Total I might say that Rockset ticks all of the containers for coping with selection, quantity and velocity. Information engineers usually spend a number of time getting all of the enterprise information clear, appropriate and ready for evaluation inside a knowledge warehouse nevertheless it usually comes with some delay. For instances if you want actual time solutions, Rockset simplifies the method of constructing this information out there to finish customers with out the overhead required by different options.

Lewis Gavin has been a knowledge engineer for 5 years and has additionally been running a blog about expertise inside the Information neighborhood for 4 years on a private weblog and Medium. Throughout his pc science diploma, he labored for the Airbus Helicopter crew in Munich enhancing simulator software program for navy helicopters. He then went on to work for Capgemini the place he helped the UK authorities transfer into the world of Massive Information. He’s presently utilizing this expertise to assist remodel the information panorama at easyfundraising, an internet charity cashback website, the place he’s serving to to form their information warehousing and reporting functionality from the bottom up.