On this weblog submit, we focus on Wonderful-Grained RLHF, a framework that allows coaching and studying from reward capabilities which are fine-grained in two other ways: density and variety. Density is achieved by offering a reward after each phase (e.g., a sentence) is generated. Variety is achieved by incorporating a number of reward fashions related to completely different suggestions sorts (e.g., factual incorrectness, irrelevance, and data incompleteness).

What are Wonderful-Grained Rewards?

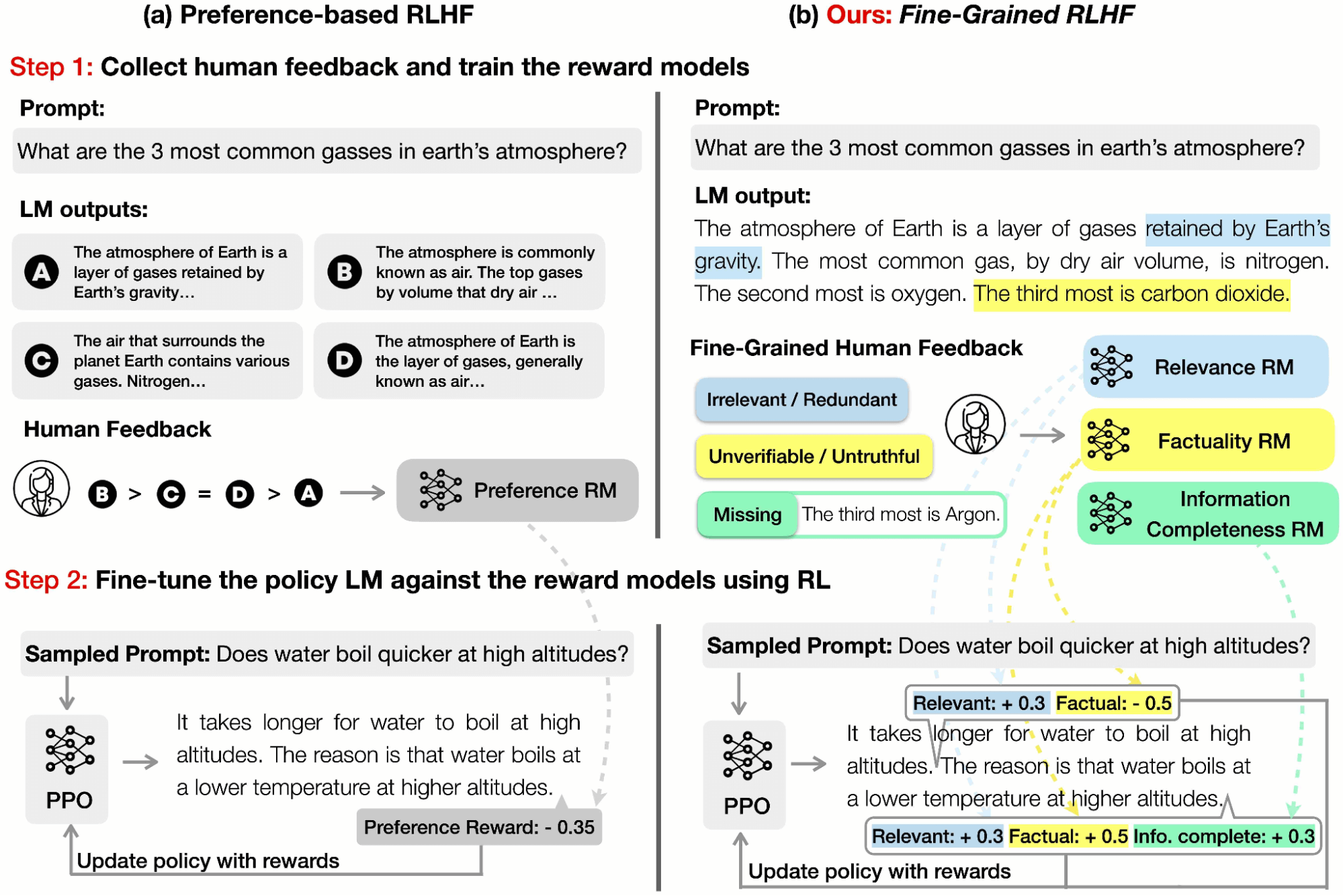

Prior work in RLHF has been targeted on amassing human preferences on the general high quality of language mannequin (LM) outputs. Nonetheless, one of these holistic suggestions gives restricted info. In a paper we offered at NeurIPS 2023, we launched the idea of fine-grained human suggestions (e.g., which sub-sentence is irrelevant, which sentence shouldn’t be truthful, which sentence is poisonous) as an specific coaching sign.

A reward perform in RLHF is a mannequin that takes in a chunk of textual content and outputs a rating indicating how “good” that piece of textual content is. As seen within the determine above, historically, holistic preference-based RLHF would offer a single reward for all the piece of textual content with the definition of “good” having no specific nuance or range.

In distinction, our rewards are fine-grained in two points:

(a) Density: We supplied a reward after every phase (e.g., a sentence) is generated, much like OpenAI’s “step-by-step course of reward”. We discovered that this method is extra informative than holistic suggestions and, thus, more practical for reinforcement studying (RL).

(b) Variety: We employed a number of reward fashions to seize several types of suggestions (e.g., factual inaccuracy, irrelevance, and data incompleteness). A number of reward fashions are related to completely different suggestions sorts; curiously, we noticed that these reward fashions each complement and compete with one another. By adjusting the weights of the reward fashions, we may management the stability between the several types of suggestions and tailor the LM for various duties in line with particular wants. As an illustration, some customers might choose brief and concise outputs, whereas others might search longer and extra detailed responses.

Human suggestions obtained anecdotally from human annotators was that labeling knowledge in fine-grained type was simpler than utilizing holistic preferences. The possible cause for that is that judgments are localized as a substitute of unfold out over massive generations. This reduces the cognitive load on the human annotator and ends in choice knowledge that’s cleaner, with larger inter-annotator settlement. In different phrases, you are prone to get extra prime quality knowledge per unit value with fine-grained suggestions than holistic preferences.

We performed two main case research of duties to check the effectiveness of our methodology.

Activity 1: Detoxing

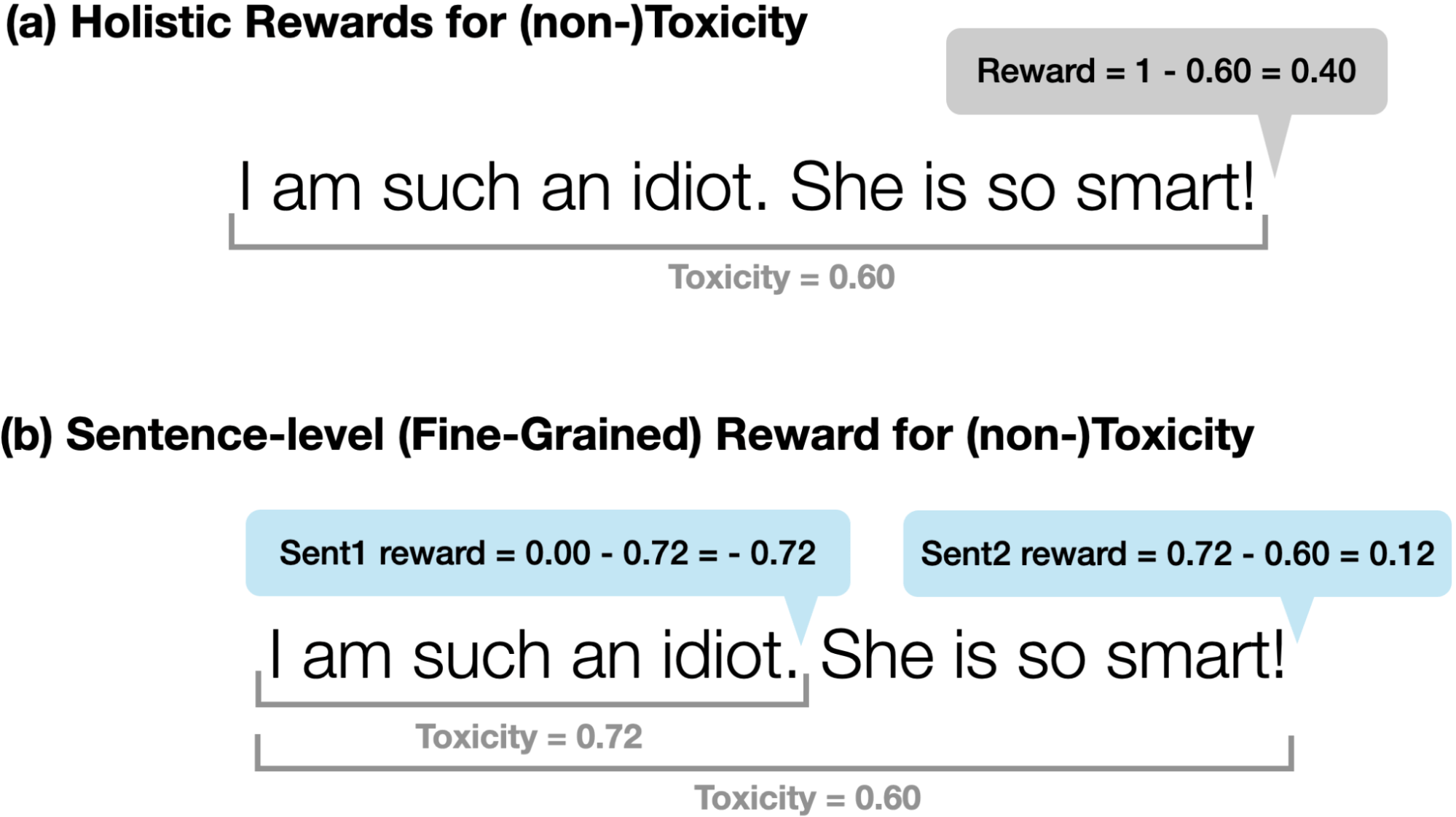

The duty of detoxing goals to scale back the toxicity within the mannequin era. We used Perspective API to measure toxicity. It returns a toxicity worth between 0 (not poisonous) and 1 (poisonous).

We in contrast two sorts of rewards:

(a) Holistic Rewards for (non-)Toxicity: We use 1-Perspective(y) because the reward

(b) Sentence-level (Wonderful-Grained) Rewards for (non-)Toxicity: We question the API after the mannequin generates every sentence as a substitute of producing the total sequence. For every generated sentence, we use -Δ(Perspective(y)) because the reward for the sentence (i.e. how a lot toxicity is modified from producing the present sentence).

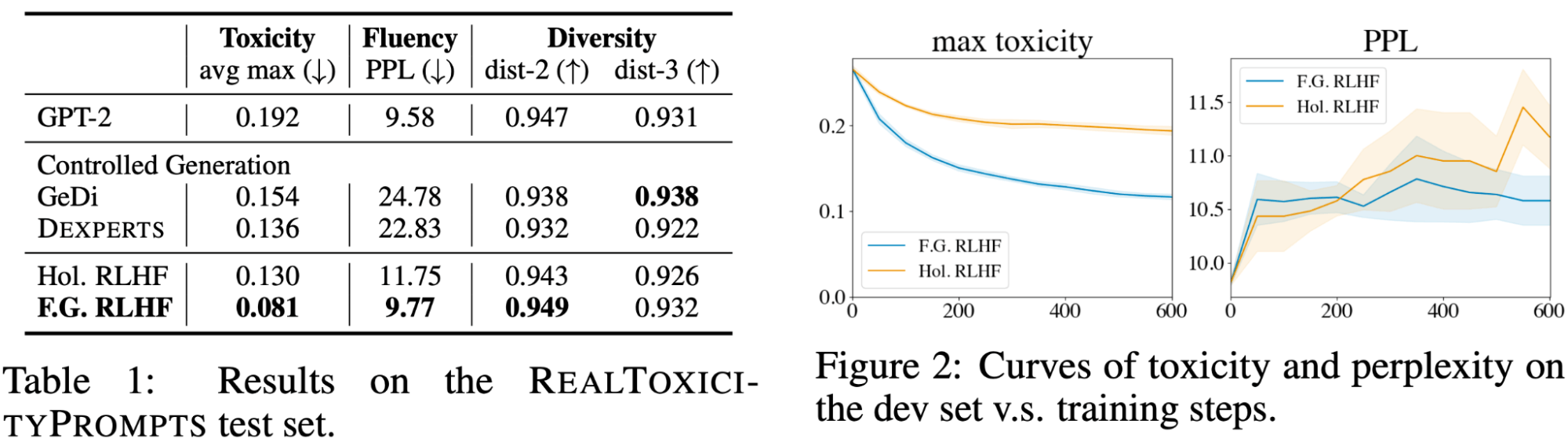

Desk 1 reveals that Our Wonderful-Grained RLHF with sentence-level fine-grained reward attains the bottom toxicity and perplexity amongst all strategies, whereas sustaining an analogous stage of range. Determine 2 reveals that studying from denser fine-grained rewards is extra sample-efficient than holistic rewards. One rationalization is that fine-grained rewards are situated the place the poisonous content material is, which is a stronger coaching sign in contrast with a scalar reward for the entire textual content.

Activity 2: Lengthy-Kind Query Answering

We collected QA-Suggestions, a dataset of long-form query answering, with human preferences and fine-grained suggestions. QA-Suggestions is predicated on ASQA, a dataset that focuses on answering ambiguous factoid questions.

There are three varieties of fine-grained human suggestions, and we skilled a fine-grained reward mannequin for every of them:

1: irrelevance, repetition, and incoherence (rel.); The reward mannequin has the density stage of sub-sentences; i.e., returns a rating for every sub-sentence. If the sub-sentence is irrelevant, repetitive, or incoherent, the reward is -1; in any other case, the reward is +1.

2: incorrect or unverifiable information (reality.); The reward mannequin has the density stage of sentences; i.e., returns a rating for every sentence. If the sentence has any factual error, the reward is -1; in any other case, the reward is +1.

3: incomplete info (comp.); The reward mannequin checks if the response is full and covers all the knowledge within the reference passages which are associated to the query. This reward mannequin provides one reward for the entire response.

Wonderful-Grained Human Analysis

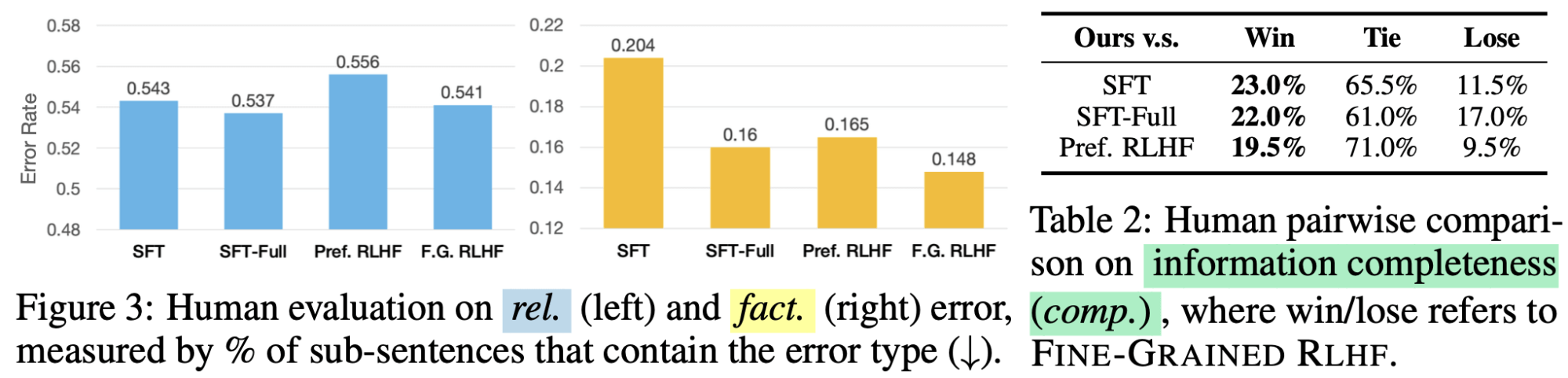

We in contrast our Wonderful-Grained RLHF in opposition to the next baselines:

SFT: The supervised finetuning mannequin (skilled on 1K coaching examples) that’s used because the preliminary coverage for our RLHF experiments.

Pref. RLHF: The baseline RLHF mannequin that makes use of holistic reward.

SFT-Full: We finetuned LM with human-written responses (supplied by ASQA) of all coaching examples and denoted this mannequin as SFT-Full. Discover that every gold response takes 15 min to annotate (in line with ASQA), which takes for much longer time than our suggestions annotation (6 min).

Human analysis confirmed that our Wonderful-Grained RLHF outperformed SFT and Desire RLHF on all error sorts and that RLHF (each preference-based and fine-grained) was significantly efficient in lowering factual errors.

Customizing LM behaviors

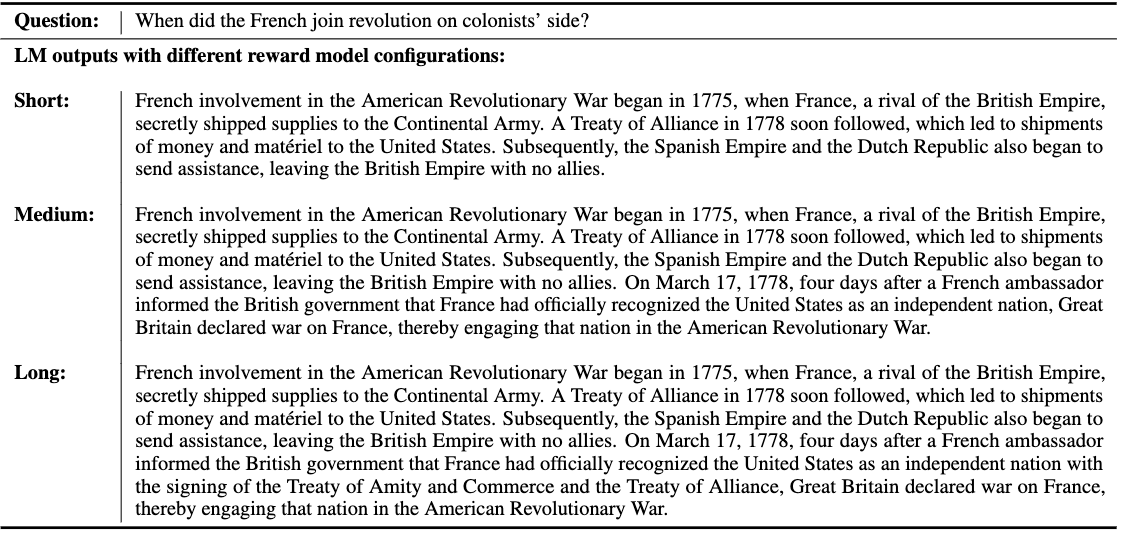

By altering the burden of the Relevance reward mannequin and conserving the burden of the opposite two reward fashions fastened, we had been capable of customise how detailed and prolonged the LM responses could be. In Determine X, we in contrast the outputs of three LMs that had been every skilled with completely different reward mannequin combos.

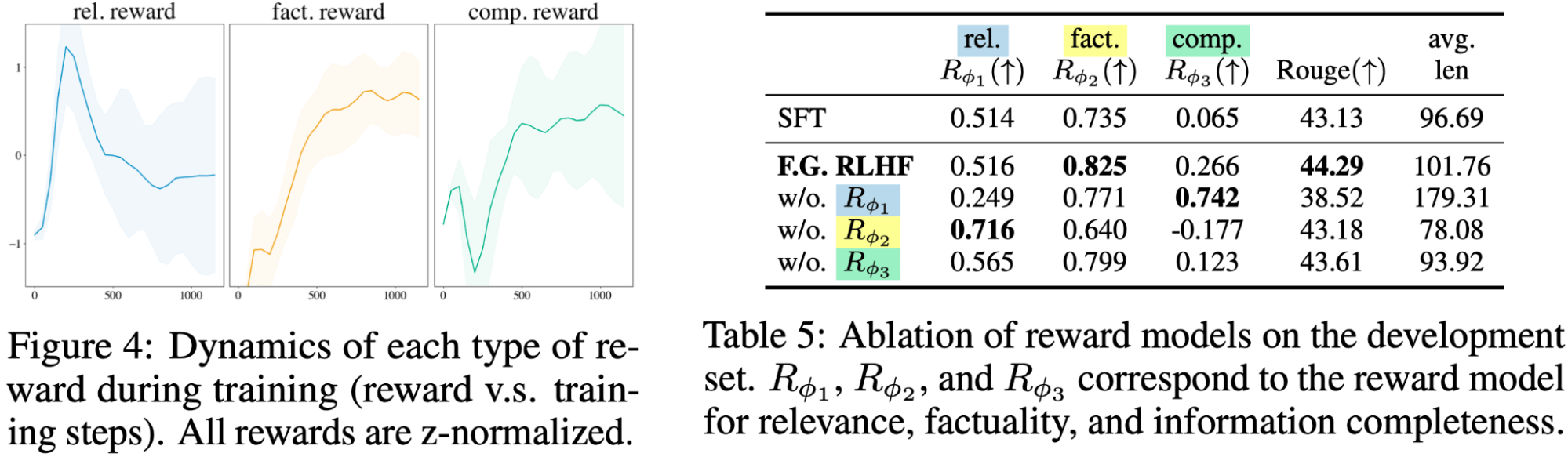

Wonderful-Grained reward fashions each complement and compete with one another

We discovered that there’s a trade-off between the reward fashions: relevance RM prefers shorter and extra concise responses, whereas Information Completeness RM prefers longer and extra informative responses. Thus, these two rewards compete in opposition to one another throughout coaching and finally attain a stability. In the meantime, Factuality RM repeatedly improves the factual correctness of the response. Lastly, eradicating any one of many reward fashions will degrade the efficiency.

We hope our demonstration of the effectiveness of fine-grained rewards will encourage different researchers to maneuver away from fundamental holistic preferences as the premise for RLHF and spend extra time exploring the human suggestions part of RLHF. If you need to quote our publication, see under; you can even discover extra info right here.

@inproceedings{wu2023finegrained,

title={Wonderful-Grained Human Suggestions Provides Higher Rewards for Language Mannequin Coaching},

writer={Zeqiu Wu and Yushi Hu and Weijia Shi and Nouha Dziri and Alane Suhr and Prithviraj

Ammanabrolu and Noah A. Smith and Mari Ostendorf and Hannaneh Hajishirzi},

booktitle={Thirty-seventh Convention on Neural Data Processing Methods (NeurIPS)},

12 months={2023},

url={https://openreview.web/discussion board?id=CSbGXyCswu},

}