Prior to now few months, we’ve seen a deepfake robocall of Joe Biden encouraging New Hampshire voters to “save your vote for the November election” and a faux endorsement of Donald Trump from Taylor Swift. It’s clear that 2024 will mark the primary “AI election” in United States historical past.

With many advocates calling for safeguards towards AI’s potential harms to our democracy, Meta (the father or mother firm of Fb and Instagram) proudly introduced final month that it’ll label AI-generated content material that was created utilizing the most well-liked generative AI instruments. The corporate stated it’s “constructing industry-leading instruments that may determine invisible markers at scale—particularly, the ‘AI generated’ info within the C2PA and IPTC technical requirements.”

Sadly, social media firms won’t clear up the issue of deepfakes on social media this yr with this strategy. Certainly, this new effort will do little or no to sort out the issue of AI-generated materials polluting the election surroundings.

The obvious weak point is that Meta’s system will solely work if the dangerous actors creating deepfakes use instruments that already put watermarks—that’s, hidden or seen details about the origin of digital content material—into their photos. Unsecured “open-source” generative AI instruments principally don’t produce watermarks in any respect. (We use the time period unsecured and put “open-source” in quotes to indicate that many such instruments don’t meet conventional definitions of open-source software program, however nonetheless pose a menace as a result of their underlying code or mannequin weights have been made publicly accessible.) If new variations of those unsecured instruments are launched that do include watermarks, the outdated instruments will nonetheless be accessible and capable of produce watermark-free content material, together with personalised and extremely persuasive disinformation and nonconsensual deepfake pornography.

We’re additionally involved that dangerous actors can simply circumvent Meta’s labeling routine even when they’re utilizing the AI instruments that Meta says will likely be coated, which embrace merchandise from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock. On condition that it takes about two seconds to take away a watermark from a picture produced utilizing the present C2PA watermarking normal that these firms have carried out, Meta’s promise to label AI-generated photos falls flat.

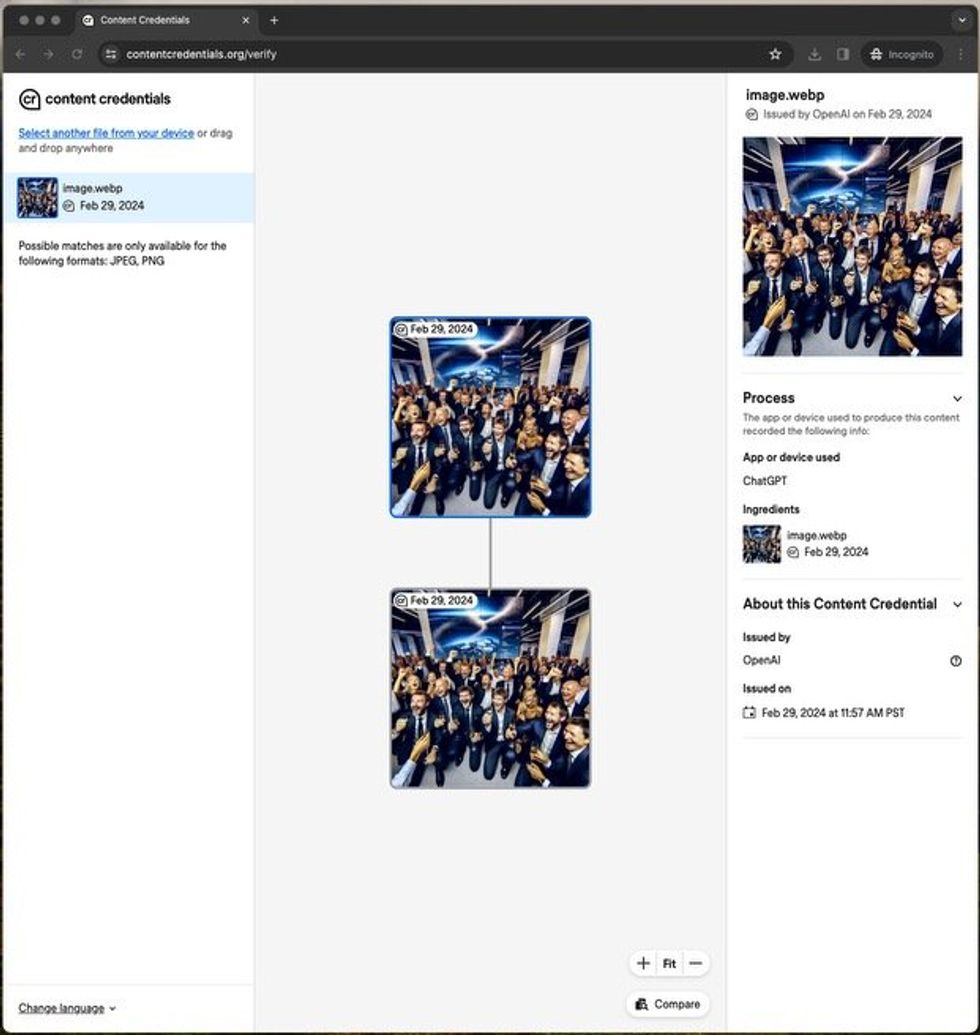

When the authors uploaded a picture they’d generated to an internet site that checks for watermarks, the positioning appropriately acknowledged that it was an artificial picture generated by an OpenAI instrument. IEEE Spectrum

When the authors uploaded a picture they’d generated to an internet site that checks for watermarks, the positioning appropriately acknowledged that it was an artificial picture generated by an OpenAI instrument. IEEE Spectrum

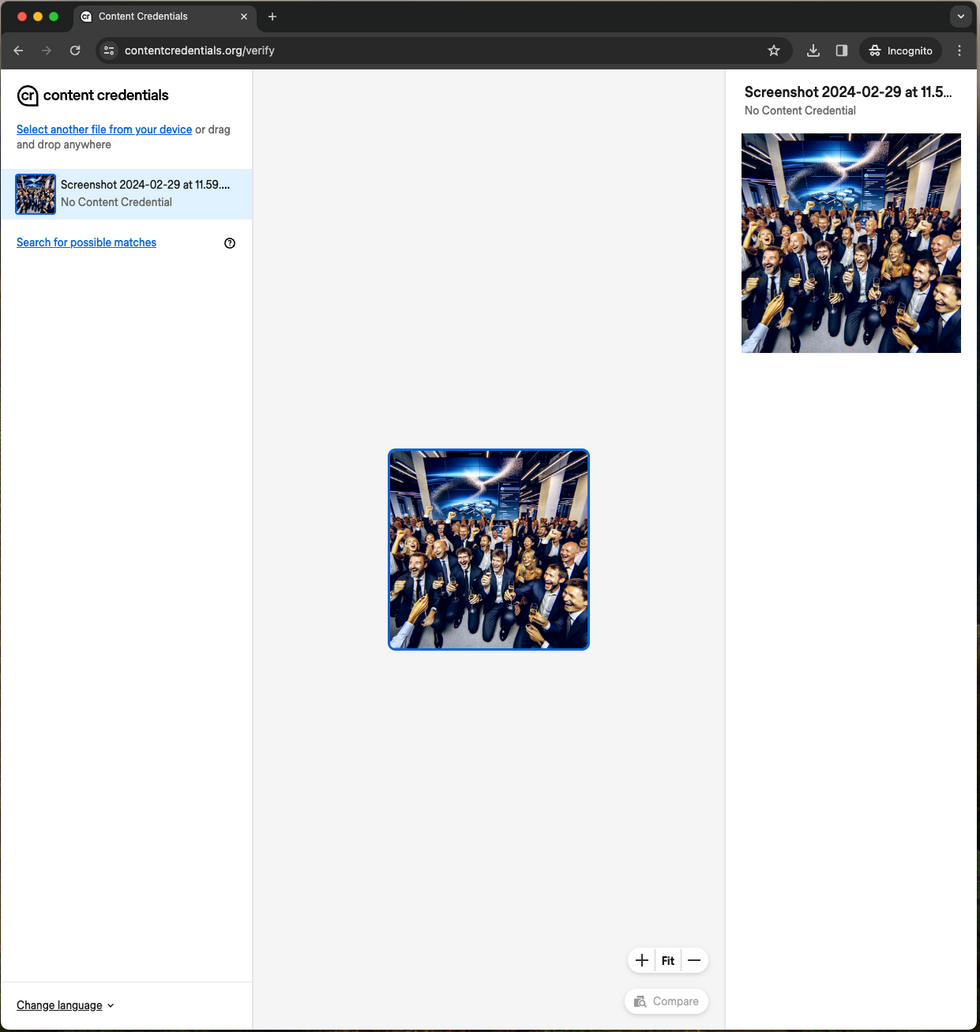

We all know this as a result of we had been capable of simply take away the watermarks Meta claims it should detect—and neither of us is an engineer. Nor did we’ve to jot down a single line of code or set up any software program.

First, we generated a picture with OpenAI’s DALL-E 3. Then, to see if the watermark labored, we uploaded the picture to the C2PA content material credentials verification web site. A easy and chic interface confirmed us that this picture was certainly made with OpenAI’s DALL-E 3. How did we then take away the watermark? By taking a screenshot. After we uploaded the screenshot to the identical verification web site, the verification web site discovered no proof that the picture had been generated by AI. The identical course of labored once we made a picture with Meta’s AI picture generator and took a screenshot of it—and uploaded it to a web site that detects the IPTC metadata that comprises Meta’s AI “watermark.”

Nevertheless, when the authors took a screenshot of the picture and uploaded that screenshot to the identical verification web site, the positioning discovered no watermark and due to this fact no proof that the picture was AI-generated. IEEE Spectrum

Nevertheless, when the authors took a screenshot of the picture and uploaded that screenshot to the identical verification web site, the positioning discovered no watermark and due to this fact no proof that the picture was AI-generated. IEEE Spectrum

Is there a greater approach to determine AI-generated content material?

Meta’s announcement states that it’s “working onerous to develop classifiers that may assist … to robotically detect AI-generated content material, even when the content material lacks invisible markers.” It’s good that the corporate is engaged on it, however till it succeeds and shares this expertise with the complete {industry}, we will likely be caught questioning whether or not something we see or hear on-line is actual.

For a extra rapid resolution, the {industry} might undertake maximally indelible watermarks—which means watermarks which can be as troublesome to take away as attainable.

Right now’s imperfect watermarks usually connect info to a file within the type of metadata. For maximally indelible watermarks to supply an enchancment, they should disguise info imperceptibly within the precise pixels of photos, the waveforms of audio (Google Deepmind claims to have finished this with their proprietary SynthID watermark) or by barely modified phrase frequency patterns in AI-generated textual content. We use the time period “maximally” to acknowledge that there could by no means be a superbly indelible watermark. This isn’t an issue simply with watermarks although. The celebrated safety skilled Bruce Schneier notes that “laptop safety isn’t a solvable downside…. Safety has at all times been an arms race, and at all times will likely be.”

In metaphorical phrases, it’s instructive to think about vehicle security. No automotive producer has ever produced a automotive that can’t crash. But that hasn’t stopped regulators from implementing complete security requirements that require seatbelts, airbags, and backup cameras on vehicles. If we waited for security applied sciences to be perfected earlier than requiring implementation of one of the best accessible choices, we’d be a lot worse off in lots of domains.

There’s growing political momentum to sort out deepfakes. Fifteen of the largest AI firms—together with nearly each one talked about on this article—signed on to the White Home Voluntary AI Commitments final yr, which included pledges to “develop sturdy mechanisms, together with provenance and/or watermarking techniques for audio or visible content material” and to “develop instruments or APIs to find out if a specific piece of content material was created with their system.” Sadly, the White Home didn’t set any timeline for the voluntary commitments.

Then, in October, the White Home, in its AI Govt Order, outlined AI watermarking as “the act of embedding info, which is often troublesome to take away, into outputs created by AI—together with into outputs equivalent to photographs, movies, audio clips, or textual content—for the needs of verifying the authenticity of the output or the id or traits of its provenance, modifications, or conveyance.”

Subsequent, on the Munich Safety Convention on 16 February, a gaggle of 20 tech firms (half of which had beforehand signed the voluntary commitments) signed onto a brand new “Tech Accord to Fight Misleading Use of AI in 2024 Elections.” With out making any concrete commitments or offering any timelines, the accord gives a imprecise intention to implement some type of watermarking or content material provenance efforts. Though a normal isn’t specified, the accord lists each C2PA and SynthID as examples of applied sciences that may very well be adopted.

May laws assist?

We’ve seen examples of sturdy pushback towards deepfakes. Following the AI-generated Biden robocalls, the New Hampshire Division of Justice launched an investigation in coordination with state and federal companions, together with a bipartisan activity drive made up of all 50 state attorneys common and the Federal Communications Fee. In the meantime, in early February the FCC clarified that calls utilizing voice-generation AI will likely be thought of synthetic and topic to restrictions beneath current legal guidelines regulating robocalls.

Sadly, we don’t have legal guidelines to drive motion by both AI builders or social media firms. Congress and the states ought to mandate that every one generative AI merchandise embed maximally indelible watermarks of their picture, audio, video, and textual content content material utilizing state-of-the-art expertise. They need to additionally handle dangers from unsecured “open-source” techniques that may both have their watermarking performance disabled or be used to take away watermarks from different content material. Moreover, any firm that makes a generative AI instrument ought to be inspired to launch a detector that may determine, with the very best accuracy attainable, any content material it produces. This proposal shouldn’t be controversial, as its tough outlines have already been agreed to by the signers of the voluntary commitments and the current elections accord.

Requirements organizations like C2PA, the Nationwide Institute of Requirements and Expertise, and the Worldwide Group for Standardization must also transfer sooner to construct consensus and launch requirements for maximally indelible watermarks and content material labeling in preparation for legal guidelines requiring these applied sciences. Google, as C2PA’s latest steering committee member, must also shortly transfer to open up its seemingly best-in-class SynthID watermarking expertise to all members for testing.

Misinformation and voter deception are nothing new in elections. However AI is accelerating current threats to our already fragile democracy. Congress should additionally take into account what steps it may well take to guard our elections extra typically from those that are in search of to undermine them. That ought to embrace some primary steps, equivalent to passing the Misleading Practices and Voter Intimidation Act, which might make it unlawful to knowingly mislead voters in regards to the time, place, and method of elections with the intent of stopping them from voting within the interval earlier than a federal election.

Congress has been woefully sluggish to take up complete democracy reform within the face of current shocks. The potential amplification of those shocks by abuse of AI should be sufficient to lastly get lawmakers to behave.

From Your Website Articles

Associated Articles Across the Net