Maintaining with an business as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a helpful roundup of latest tales on the planet of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

Final week, Midjourney, the AI startup constructing picture (and shortly video) turbines, made a small, blink-and-you’ll-miss-it change to its phrases of service associated to the corporate’s coverage round IP disputes. It primarily served to interchange jokey language with extra lawyerly, likely case law-grounded clauses. However the change can be taken as an indication of Midjourney’s conviction that AI distributors like itself will emerge victorious within the courtroom battles with creators whose works comprise distributors’ coaching information.

The change in Midjourney’s phrases of service.

Generative AI fashions like Midjourney’s are skilled on an infinite variety of examples — e.g. photographs and textual content — normally sourced from public web sites and repositories across the internet. Distributors assert that honest use, the authorized doctrine that enables for the usage of copyrighted works to make a secondary creation so long as it’s transformative, shields them the place it considerations mannequin coaching. However not all creators agree — notably in gentle of a rising variety of.research displaying that fashions can — and do — “regurgitate” coaching information.

Some distributors have taken a proactive method, inking licensing agreements with content material creators and establishing “opt-out” schemes for coaching information units. Others have promised that, if clients are implicated in a copyright lawsuit arising from their use of a vendor’s GenAI instruments, they received’t be on the hook for authorized charges.

Midjourney isn’t one of many proactive ones.

Quite the opposite, Midjourney has been considerably brazen in its use of copyrighted works, at one level sustaining an inventory of 1000’s of artists — together with illustrators and designers at main manufacturers like Hasbro and Nintendo — whose works had been, or could be, used to coach Midjourney’s fashions. A research reveals convincing proof that Midjourney used TV reveals and film franchises in its coaching information, as properly, from “Toy Story” to Star Wars” to “Dune” to “Avengers.”

Now, there’s a state of affairs wherein courtroom selections go Midjourney’s manner ultimately. Ought to the justice system resolve honest use applies, nothing’s stopping the startup from persevering with because it has been, scraping and coaching on copyrighted information outdated and new.

But it surely looks like a dangerous guess.

Midjourney is flying excessive in the meanwhile, having reportedly reached round $200 million in income and not using a dime of out of doors funding. Attorneys are costly, nonetheless. And if it’s determined honest use doesn’t apply in Midjourney’s case, it’d decimate the corporate in a single day.

No reward with out danger, eh?

Listed here are another AI tales of observe from the previous few days:

AI-assisted advert attracts the improper form of consideration: Creators on Instagram lashed out at a director whose business reused one other’s (far more tough and spectacular) work with out credit score.

EU authorities are placing AI platforms on discover forward of elections: They’re asking the most important firms in tech to elucidate their method to stopping electoral shenanigans.

Google Deepmind needs your co-op gaming companion to be their AI: Coaching an agent on many hours of 3D recreation play made it able to performing easy duties phrased in pure language.

The issue with benchmarks: Many, many AI distributors declare their fashions have the competitors met or beat by some goal metric. However the metrics they’re utilizing are flawed, usually.

AI2 scores $200M: AI2 Incubator, spun out of the nonprofit Allen Institute for AI, has secured a windfall $200 million in compute that startups going by way of its program can make the most of to speed up early growth.

India requires, then rolls again, gov approval for AI: India’s authorities can’t appear to resolve what degree of regulation is suitable for the AI business.

Anthropic launches new fashions: AI startup Anthropic has launched a brand new household of fashions, Claude 3, that it claims rivals OpenAI’s GPT-4. We put the flagship mannequin (Claude 3 Opus) to the take a look at, and located it spectacular — but additionally missing in areas like present occasions.

Political deepfakes: A research from the Middle for Countering Digital Hate (CCDH), a British nonprofit, appears to be like on the rising quantity of AI-generated disinformation — particularly deepfake photographs pertaining to elections — on X (previously Twitter) over the previous yr.

OpenAI versus Musk: OpenAI says that it intends to dismiss all claims made by X CEO Elon Musk in a latest lawsuit, and urged that the billionaire entrepreneur — who was concerned within the firm’s co-founding — didn’t actually have that a lot of an affect on OpenAI’s growth and success.

Reviewing Rufus: Final month, Amazon introduced that it’d launch a brand new AI-powered chatbot, Rufus, contained in the Amazon Buying app for Android and iOS. We acquired early entry — and had been rapidly upset by the shortage of issues Rufus can do (and do properly).

Extra machine learnings

Molecules! How do they work? AI fashions have been useful in our understanding and prediction of molecular dynamics, conformation, and different points of the nanoscopic world that will in any other case take costly, advanced strategies to check. You continue to need to confirm, after all, however issues like AlphaFold are quickly altering the sector.

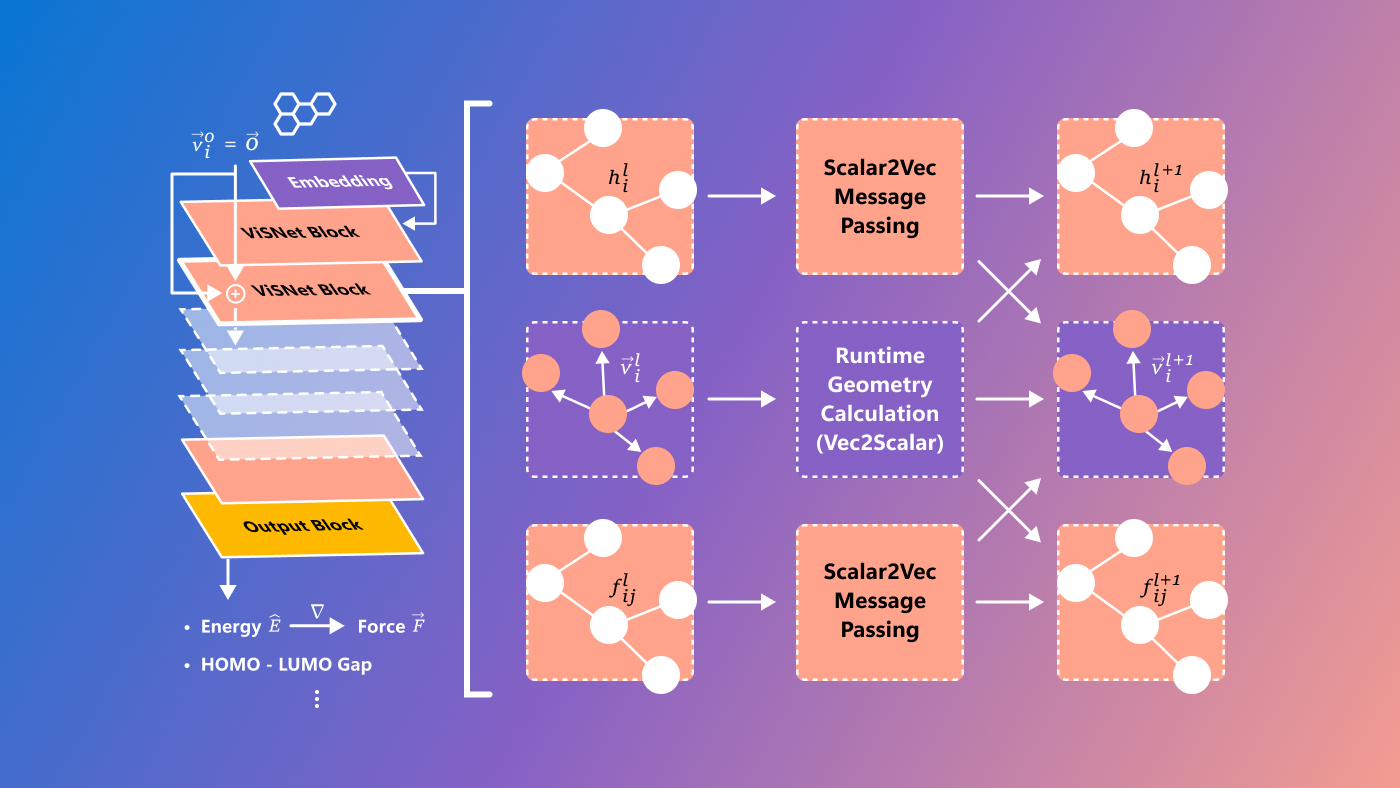

Microsoft has a brand new mannequin known as ViSNet, geared toward predicting what are known as structure-activity relationships, advanced relationships between molecules and organic exercise. It’s nonetheless fairly experimental and positively for researchers solely, however it’s at all times nice to see exhausting science issues being addressed by cutting-edge tech means.

Picture Credit: Microsoft

College of Manchester researchers are trying particularly at figuring out and predicting COVID-19 variants, much less from pure construction like ViSNet and extra by evaluation of the very giant genetic datasets pertaining to coronavirus evolution.

“The unprecedented quantity of genetic information generated through the pandemic calls for enhancements to our strategies to investigate it completely,” stated lead researcher Thomas Home. His colleague Roberto Cahuantzi added: “Our evaluation serves as a proof of idea, demonstrating the potential use of machine studying strategies as an alert software for the early discovery of rising main variants.”

AI can design molecules too, and plenty of researchers have signed an initiative calling for security and ethics on this discipline. Although as David Baker (among the many foremost computational biophysicists on the planet) notes, “The potential advantages of protein design far exceed the risks at this level.” Nicely, as a designer of AI protein designers he would say that. However all the identical, we should be cautious of regulation that misses the purpose and hinders respectable analysis whereas permitting dangerous actors freedom.

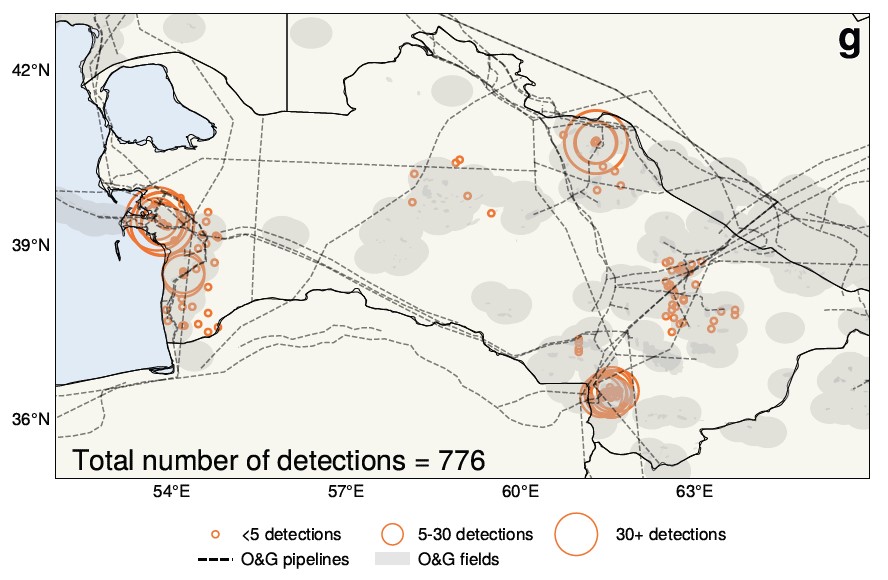

Atmospheric scientists on the College of Washington have made an attention-grabbing assertion primarily based on AI evaluation of 25 years of satellite tv for pc imagery over Turkmenistan. Primarily, the accepted understanding that the financial turmoil following the autumn of the Soviet Union led to decreased emissions will not be true — in truth, the alternative could have occurred.

AI helped discover and measure the methane leaks proven right here.

“We discover that the collapse of the Soviet Union appears to consequence, surprisingly, in a rise in methane emissions.,” stated UW professor Alex Turner. The massive datasets and lack of time to sift by way of them made the subject a pure goal for AI, which resulted on this surprising reversal.

Giant language fashions are largely skilled on English supply information, however this may increasingly have an effect on greater than their facility in utilizing different languages. EPFL researchers trying on the “latent language” of LlaMa-2 discovered that the mannequin seemingly reverts to English internally even when translating between French and Chinese language. The researchers recommend, nonetheless, that that is greater than a lazy translation course of, and in reality the mannequin has structured its complete conceptual latent area round English notions and representations. Does it matter? Most likely. We needs to be diversifying their datasets anyway.