At present at Nvidia’s developer convention, GTC 2024, the corporate revealed its subsequent GPU, the B200. The B200 is able to delivering 4 occasions the coaching efficiency, as much as 30 occasions the inference efficiency, and as much as 25 occasions higher vitality effectivity, in comparison with its predecessor, the Hopper H100 GPU. Primarily based on the brand new Blackwell structure, the GPU may be mixed with the corporate’s Grace CPUs to kind a brand new era of DGX SuperPOD computer systems able to as much as 11.5 billion billion floating level operations (exaflops) of AI computing utilizing a brand new, low-precision quantity format.

“Blackwell is a brand new class of AI superchip,” says Ian Buck, Nvidia’s vp of high-performance computing and hyperscale. Nvidia named the GPU structure for mathematician David Harold Blackwell, the primary Black inductee into the U.S. Nationwide Academy of Sciences.

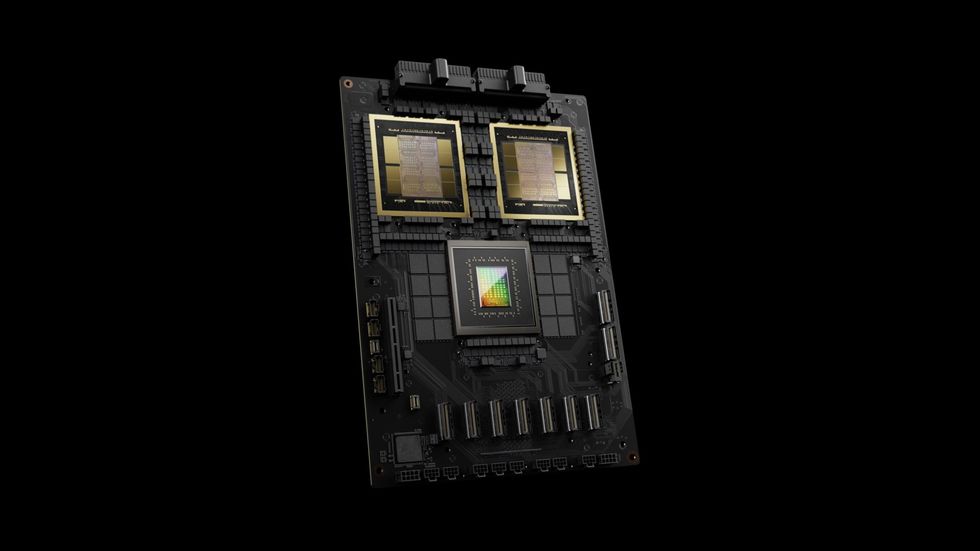

The B200 consists of about 1600 sq. millimeters of processor on two silicon dies which are linked in the identical bundle by a ten terabyte per second connection, so that they carry out as in the event that they had been a single 208-billion-transistor chip. These slices of silicon are made utilizing TSMC’s N4P chip know-how, which supplies a 6 p.c efficiency increase over the N4 know-how used to make Hopper structure GPUs, just like the H100.

Like Hopper chips, the B200 is surrounded by high-bandwidth reminiscence, more and more necessary to decreasing the latency and vitality consumption of huge AI fashions. B200’s reminiscence is the most recent selection, HBM3e, and it totals 192 GB (up from 141 GB for the second era Hopper chip, H200). Moreover, the reminiscence bandwidth is boosted to eight terabytes per second from the H200’s 4.8 TB/s.

Smaller Numbers, Quicker Chips

Chipmaking know-how did among the job in making Blackwell, however its what the GPU does with the transistors that actually makes the distinction. In explaining Nvidia’s AI success to laptop scientists final 12 months at IEEE Sizzling Chips, Nvidia chief scientist Invoice Dally stated that almost all got here from utilizing fewer and fewer bits to signify numbers in AI calculations. Blackwell continues that pattern.

It’s predecessor structure, Hopper, was the primary occasion of what Nvidia calls the transformer engine. It’s a system that examines every layer of a neural community and determines whether or not it could possibly be computed utilizing lower-precision numbers. Particularly, Hopper can use floating level quantity codecs as small as 8 bits. Smaller numbers are sooner and extra vitality environment friendly to compute, require much less reminiscence and reminiscence bandwidth, and the logic required to do the mathematics takes up much less silicon.

“With Blackwell, we have now taken a step additional,” says Buck. The brand new structure has models that do matrix math with floating level numbers simply 4 bits broad. What’s extra, it may determine to deploy them on components of every neural community layer, not simply whole layers like Hopper. “Getting right down to that degree of high-quality granularity is a miracle in itself,” says Buck.

NVLink and Different Options

Among the many different architectural insights Nvidia revealed about Blackwell are that it incorporates a devoted “engine” dedicated to the GPU’s reliability, availability, and serviceability. In line with Nvidia, it makes use of an AI-based system to run diagnostics and forecast reliability points, with the purpose of accelerating up time and serving to huge AI methods run uninterrupted for weeks at a time, a interval usually wanted to coach massive language fashions.

Nvidia additionally included methods to assist hold AI fashions safe and to decompress information to hurry database queries and information analytics.

Lastly, Blackwell incorporates Nvidia’s fifth era laptop interconnect know-how NVLink, which now delivers 1.8 terabytes per second bidirectionally between GPUs and permits for high-speed communication amongst as much as 576 GPUs. Hopper’s model of NVLink may solely attain half that bandwidth.

SuperPOD and Different Computer systems

NVLink’s bandwidth is vital to constructing large-scale computer systems from Blackwell, able to crunching by means of trillion-parameter neural community fashions.

The bottom computing unit known as the DGX GB200. Every of these embody 36 GB200 superchips. These are modules that embody a Grace CPU and two Blackwell GPUs, all linked along with NVLink.

The Grace Blackwell superchip is 2 Blackwell GPUs and a Grace CPU in the identical module.Nvidia

The Grace Blackwell superchip is 2 Blackwell GPUs and a Grace CPU in the identical module.Nvidia

Eight DGX GB200s may be linked additional by way of NVLINK to kind a 576-GPU supercomputer known as a DGX SuperPOD. Nvidia says such a pc can blast by means of 11.5 exaflops utilizing 4-bit precision calculations. Programs of tens of 1000’s of GPUs are doable utilizing the corporate’s Quantum Infiniband networking know-how.

The corporate says to count on SuperPODs and different Nvidia computer systems to turn into accessible later this 12 months. In the meantime, chip foundry TSMC and digital design automation firm Synopsys every introduced that they’d be transferring Nvidia’s inverse lithography instrument, cuLitho, into manufacturing. Lastly, the Nvidia introduced a new basis mannequin for humanoid robots known as GR00T.