Nvidia Inference Microservices (NIMs) are just some days previous, however AI software program distributors are already shifting to help the brand new deployment scheme to assist their clients get generative AI functions off the bottom.

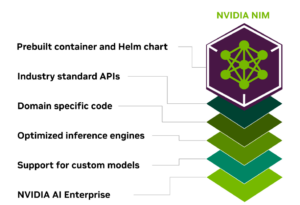

Nvidia CEO Jensen Huang launched NIM on Monday as a solution to simplify the event and deployment of GenAI functions constructed atop massive language and laptop imaginative and prescient fashions. By combining most of the parts one wants right into a pre-built Kubernetes container that may run throughout Nvidia’s household of GPU {hardware}, the corporate hopes to take a variety of the ache out of deployment of GenAI apps.

Along with Nvidia software program like CUDA and NeMo Retriever, NIMs will embrace software program from third-party software program corporations, Huang stated throughout his keynote.

“How can we construct software program sooner or later? It’s unlikely you’ll write it from scratch or write an entire bunch of Python code or something like that,” Huang stated. “It’s very doubtless that you simply assemble a staff of AIs. There’s most likely going to be a brilliant AI that you simply use that takes the mission that you simply give it and breaks it down into an execution plan.

“A few of the execution plan might be handed off to a different NIM. Possibly it understands SAP,” he continued. “It’d hand it off to a different NIM that goes off and does some calculation on it. Possibly it’s optimization software program, or a combinatorial optimization algorithm. Possibly it’s only a primary calculator. Possibly it’s pandas to do some numerical evaluation on it, and it comes again with its reply and it will get mixed with everyone else’s and since it’s been introduced with ‘That is what the suitable reply ought to appear to be.’ It is aware of what the suitable reply to provide, and it current it to you.”

The AI software program trade wasted no time in getting behind Nvidia’s NIM plan.

DataStax introduced that it has built-in the retrieval-augmented technology (RAG) capabilities of its managed database, Astra DB, with Nvidia NIM. The corporate claims the mixing will allow customers to create vector embeddings 20x sooner and 80% inexpensive than different cloud-based vector embedding companies.

NVIDIA NeMo Retriever can generate greater than 800 embeddings per second per GPU, which DataStax says pairs properly with its Astra DB, which is designed to ingest embeddings at a charge of 4,000 transactions per second.

“In at the moment’s dynamic panorama of AI innovation, RAG has emerged because the pivotal differentiator for enterprises constructing GenAI functions with fashionable massive language frameworks,” DataStax CEO and Chairman Chet Kapoor stated in a press launch. “With a wealth of unstructured information at their disposal, starting from software program logs to buyer chat historical past, enterprises maintain a cache of useful area data and real-time insights important for generative AI functions, however nonetheless face challenges. Integrating NVIDIA NIM into RAGStack cuts down the limitations enterprises are going through to deliver them the high-performing RAG options they should make important strides of their GenAI utility improvement.”

Weights & Biases can be supporting Nvidia’s NIM with its AI developer platform, which automates most of the steps that information scientists and builders should undergo to create AI fashions and functions. The corporate says that clients monitoring mannequin artifacts in its platform can use W&B Launch to deploy to NIMs, thereby streamlining the mannequin deployment course of.

The San Francsico firm additionally introduced that its offering W&B Launch to clients by way of GPU-in-the-cloud supplier CoreWeave in a bid to simplify {hardware} provisioning. By making W&B Launch accessible as a NIM throughout the CoreWeave Software Catalog, it can speed up clients’ deployment of GenAI apps.

“Our mission is to construct the most effective instruments for machine studying practitioners world wide, and that additionally means collaborating with the most effective companions,” stated Lukas Biewald, CEO at Weights & Biases, in a press launch. “The brand new integrations with NVIDIA and CoreWeave applied sciences will improve our clients’ potential to simply prepare, tune, analyze, and deploy AI fashions to drive large worth for his or her organizations.”

Anyscale, the corporate behind the open supply Ray mission, introduced its help for NIM and Nvidia’s AI Enterprise software program (which NIM is part of). The San Francisco software program firm is working with Nvidia to combine the Anyscale managed runtime surroundings with NIM, which is able to profit clients by bringing them higher container orchestration, observability, autoscaling, safety, and efficiency for his or her AI functions.

“This enhanced integration with Nvidia AI Enterprise makes it less complicated than ever for purchasers to get entry to cutting-edge infrastructure software program and top-of-the-line compute assets to speed up the manufacturing of generative AI fashions,” Anyscale CEO Robert Nishihara stated in a press launch. “As AI turns into a strategic functionality, it’s important to stability efficiency, scale, and price whereas minimizing infrastructure complexity. The flexibility to faucet into the best-of-breed infrastructure, accelerated computing, pre-trained fashions and instruments can be essential for organizations to face out and compete. This collaboration is one other necessary step ahead in bringing generative AI to extra folks.”

EY (previously Ernst & Younger) additionally introduced an enlargement of its partnership with Nvidia to assist joint clients use the corporate’s GPU and software program within the areas of scientific computing, synthetic intelligence, information science, autonomous autos, robotics, metaverse and 3D web functions.

EY pledged to coach 10,000 extra workers world wide to make use of Nvidia choices, together with its GPUs and software program choices like AI Enterprise, NIM, and NeMo Retriever. “We’re working with EY US to combine NVIDIA’s modern accelerated computing options with EY US’ intensive trade data to assist empower shoppers to streamline their AI transformation efforts,” stated Alvin Da Costa, Vice President of the International Consulting Accomplice Group at Nvidia, in a press launch.

Associated Objects:

The Generative AI Future Is Now, Nvidia’s Huang Says

Nvidia Appears to be like to Speed up GenAI Adoption with NIM

Nvidia Introduces New Blackwell GPU for Trillion-Parameter AI Fashions