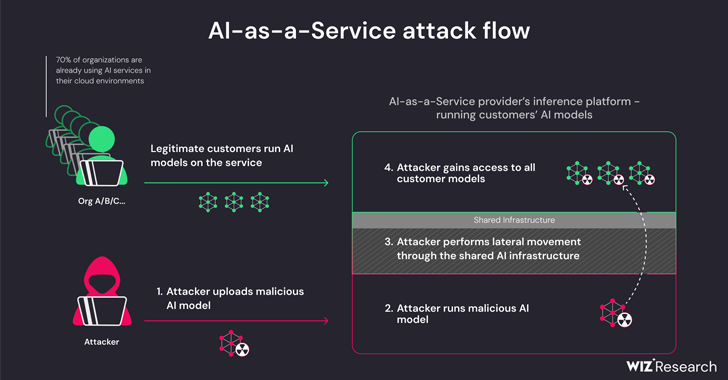

New analysis has discovered that synthetic intelligence (AI)-as-a-service suppliers comparable to Hugging Face are inclined to 2 vital dangers that might permit risk actors to escalate privileges, acquire cross-tenant entry to different prospects’ fashions, and even take over the continual integration and steady deployment (CI/CD) pipelines.

“Malicious fashions signify a significant threat to AI techniques, particularly for AI-as-a-service suppliers as a result of potential attackers might leverage these fashions to carry out cross-tenant assaults,” Wiz researchers Shir Tamari and Sagi Tzadik mentioned.

“The potential influence is devastating, as attackers might be able to entry the hundreds of thousands of personal AI fashions and apps saved inside AI-as-a-service suppliers.”

The event comes as machine studying pipelines have emerged as a model new provide chain assault vector, with repositories like Hugging Face turning into a gorgeous goal for staging adversarial assaults designed to glean delicate data and entry goal environments.

The threats are two-pronged, arising because of shared Inference infrastructure takeover and shared CI/CD takeover. They make it attainable to run untrusted fashions uploaded to the service in pickle format and take over the CI/CD pipeline to carry out a provide chain assault.

The findings from the cloud safety agency present that it is attainable to breach the service operating the customized fashions by importing a rogue mannequin and leverage container escape strategies to interrupt out from its personal tenant and compromise your entire service, successfully enabling risk actors to acquire cross-tenant entry to different prospects’ fashions saved and run in Hugging Face.

“Hugging Face will nonetheless let the consumer infer the uploaded Pickle-based mannequin on the platform’s infrastructure, even when deemed harmful,” the researchers elaborated.

This primarily permits an attacker to craft a PyTorch (Pickle) mannequin with arbitrary code execution capabilities upon loading and chain it with misconfigurations within the Amazon Elastic Kubernetes Service (EKS) to acquire elevated privileges and laterally transfer throughout the cluster.

“The secrets and techniques we obtained might have had a major influence on the platform in the event that they had been within the arms of a malicious actor,” the researchers mentioned. “Secrets and techniques inside shared environments might usually result in cross-tenant entry and delicate knowledge leakage.

To mitigate the problem, it is beneficial to allow IMDSv2 with Hop Restrict in order to forestall pods from accessing the Occasion Metadata Service (IMDS) and acquiring the position of a Node throughout the cluster.

The analysis additionally discovered that it is attainable to realize distant code execution through a specifically crafted Dockerfile when operating an utility on the Hugging Face Areas service, and use it to drag and push (i.e., overwrite) all the pictures which can be accessible on an inner container registry.

Hugging Face, in coordinated disclosure, mentioned it has addressed all of the recognized points. It is also urging customers to make use of fashions solely from trusted sources, allow multi-factor authentication (MFA), and chorus from utilizing pickle information in manufacturing environments.

“This analysis demonstrates that using untrusted AI fashions (particularly Pickle-based ones) might end in critical safety penalties,” the researchers mentioned. “Moreover, if you happen to intend to let customers make the most of untrusted AI fashions in your setting, this can be very necessary to make sure that they’re operating in a sandboxed setting.”

The disclosure follows one other analysis from Lasso Safety that it is attainable for generative AI fashions like OpenAI ChatGPT and Google Gemini to distribute malicious (and non-existant) code packages to unsuspecting software program builders.

In different phrases, the thought is to discover a advice for an unpublished package deal and publish a trojanized package deal as an alternative to be able to propagate the malware. The phenomenon of AI package deal hallucinations underscores the necessity for exercising warning when counting on massive language fashions (LLMs) for coding options.

AI firm Anthropic, for its half, has additionally detailed a brand new technique known as “many-shot jailbreaking” that can be utilized to bypass security protections constructed into LLMs to provide responses to doubtlessly dangerous queries by benefiting from the fashions’ context window.

“The flexibility to enter increasingly-large quantities of data has apparent benefits for LLM customers, however it additionally comes with dangers: vulnerabilities to jailbreaks that exploit the longer context window,” the corporate mentioned earlier this week.

The approach, in a nutshell, entails introducing a lot of fake dialogues between a human and an AI assistant inside a single immediate for the LLM in an try to “steer mannequin conduct” and reply to queries that it would not in any other case (e.g., “How do I construct a bomb?”).