For those who’ve been investing in search engine marketing for a while and are contemplating a net redesign or re-platform mission, seek the advice of with an search engine marketing conversant in website migrations early on in your mission.

Simply final yr my company partnered with an organization within the fertility medication area that misplaced an estimated $200,000 in income after their natural visibility all however vanished after an internet site redesign. This might have been prevented with search engine marketing steering and correct planning

This text tackles a confirmed course of for retaining search engine marketing property throughout a redesign. Find out about key failure factors, deciding which URLs to maintain, prioritizing them and utilizing environment friendly instruments.

Frequent causes of search engine marketing declines after an internet site redesign

Listed below are a handful of things that may wreak havoc on Google’s index and rankings of your web site when not dealt with correctly:

- Area change.

- New URLs and lacking 301 redirects.

- Web page content material (removing/additions).

- Removing of on-site key phrase focusing on (unintentional retargeting).

- Unintentional web site efficiency modifications (Core Net Vitals and web page pace).

- Unintentionally blocking crawlers.

These parts are essential as they affect indexability and key phrase relevance. Moreover, I embody a radical audit of inner hyperlinks, backlinks and key phrase rankings, that are extra nuanced in how they’ll have an effect on your efficiency however are vital to contemplate nonetheless.

Domains, URLs and their position in your rankings

It is not uncommon for URLs to alter throughout an internet site redesign. The important thing lies in creating correct 301- redirects. A 301 redirect communicates to Google that the vacation spot of your web page has modified.

For each URL that ceases to exist, inflicting a 404 error, you threat shedding natural rankings and valuable site visitors. Google doesn’t like rating webpages that finish in a “lifeless click on.” There’s nothing worse than clicking on a Google consequence and touchdown on a 404.

The extra you are able to do to retain your authentic URL construction and reduce the variety of 301 redirects you want, the much less doubtless your pages are to drop from Google’s index.

For those who should change a URL, I recommend utilizing Screaming Frog to crawl and catalog all of the URLs in your web site. This can let you individually map previous URLs to any receiving modifications. Most search engine marketing instruments or CMS platforms can import CSV information containing a listing of redirects, so that you’re caught including them one after the other.

That is a particularly tedious portion of search engine marketing asset retention, however it’s the solely surefire approach to assure that Google will join the dots between what’s previous and new.

In some instances, I really recommend creating 404s to encourage Google to drop low-value pages from its index. A web site redesign is a good time to wash home. I desire web sites to be lean and imply. Concentrating the search engine marketing worth throughout fewer URLs on a brand new web site can really see rating enhancements.

A much less frequent prevalence is a change to your area identify. Say you need to change your web site URL from “sitename.com” to “newsitename.com”, although Google has supplied a method for speaking the change inside Google Search Console by way of their Change of Deal with Device, you continue to run the chance of shedding efficiency if redirects aren’t arrange correctly.

I like to recommend avoiding a change in area identify in any respect prices. Even when all the pieces goes off with out a hitch, Google could have little to no historical past with the brand new area identify, primarily wiping the slate clear (in a nasty manner).

Webpage content material and key phrase focusing on

Google’s index is primarily composed of content material gathered from crawled web sites, which is then processed by rating programs to generate natural search outcomes. Rating relies upon closely on the relevance of a web page’s content material to particular key phrase phrases.

Web site redesigns typically entail restructuring and rewriting content material, doubtlessly resulting in shifts in relevance and subsequent modifications in rank positions. For instance, a web page initially optimized for “canine coaching companies” could develop into extra related to “pet behavioral help,” leading to a lower in its rank for the unique phrase.

Typically, content material modifications are inevitable and could also be a lot wanted to enhance an internet site’s general effectiveness. Nonetheless, contemplate that the extra drastic the modifications to your content material, the extra potential there’s for volatility in your key phrase rankings. You’ll doubtless lose some and achieve others just because Google should reevaluate your web site’s new content material altogether.

Metadata concerns

When web site content material modifications, metadata typically modifications unintentionally with it. Parts like title tags, meta descriptions and alt textual content affect Google’s capability to grasp the that means of your web page’s content material.

I usually seek advice from this as a web page being “untargeted or retargeted.” When new phrase decisions inside headers, physique or metadata on the brand new website inadvertently take away on-page search engine marketing parts, key phrase relevance modifications and rankings fluctuate.

Net efficiency and Core Net Vitals

Many components play into web site efficiency, together with your CMS or builder of selection and even design parts like picture carousels and video embeds.

At the moment’s web site builders provide a large quantity of flexibility and options giving the typical marketer the power to supply a suitable web site, nonetheless because the variety of accessible options will increase inside your chosen platform, usually web site efficiency decreases.

Discovering the precise platform to fit your wants, whereas balancing Google’s efficiency metric requirements is usually a problem.

I’ve had success with Duda, a cloud-hosted drag-and-drop builder, in addition to Oxygen Builder, a light-weight WordPress builder.

Unintentionally blocking Google’s crawlers

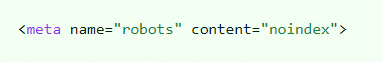

A standard apply amongst net designers in the present day is to create a staging setting that enables them to design, construct and take a look at your new web site in a “reside setting.”

To maintain Googlebot from crawling and indexing the testing setting, you may block crawlers by way of a disallow protocol within the robots.txt file. Alternatively, you may implement a noindex meta tag that instructs Googlebot to not index the content material on the web page.

As foolish as it might appear, web sites are launched on a regular basis with out eradicating these protocols. Site owners then surprise why their website instantly disappears from Google’s outcomes.

This process is a must-check earlier than your new website launches. If Google crawls these protocols your web site might be faraway from natural search.

Dig deeper: The right way to redesign your website with out shedding your Google rankings

Get the every day e-newsletter search entrepreneurs depend on.

In my thoughts, there are three main components for figuring out what pages of your web site represent an “search engine marketing asset” – hyperlinks, site visitors and prime key phrase rankings.

Any web page receiving backlinks, common natural site visitors or rating properly for a lot of phrases ought to be recreated on the brand new web site as near the unique as doable. In sure cases, there might be pages that meet all three standards.

Deal with these like gold bars. Most frequently, you’ll have to resolve how a lot site visitors you’re OK with shedding by eradicating sure pages. If these pages by no means contributed site visitors to the location, your determination is far simpler.

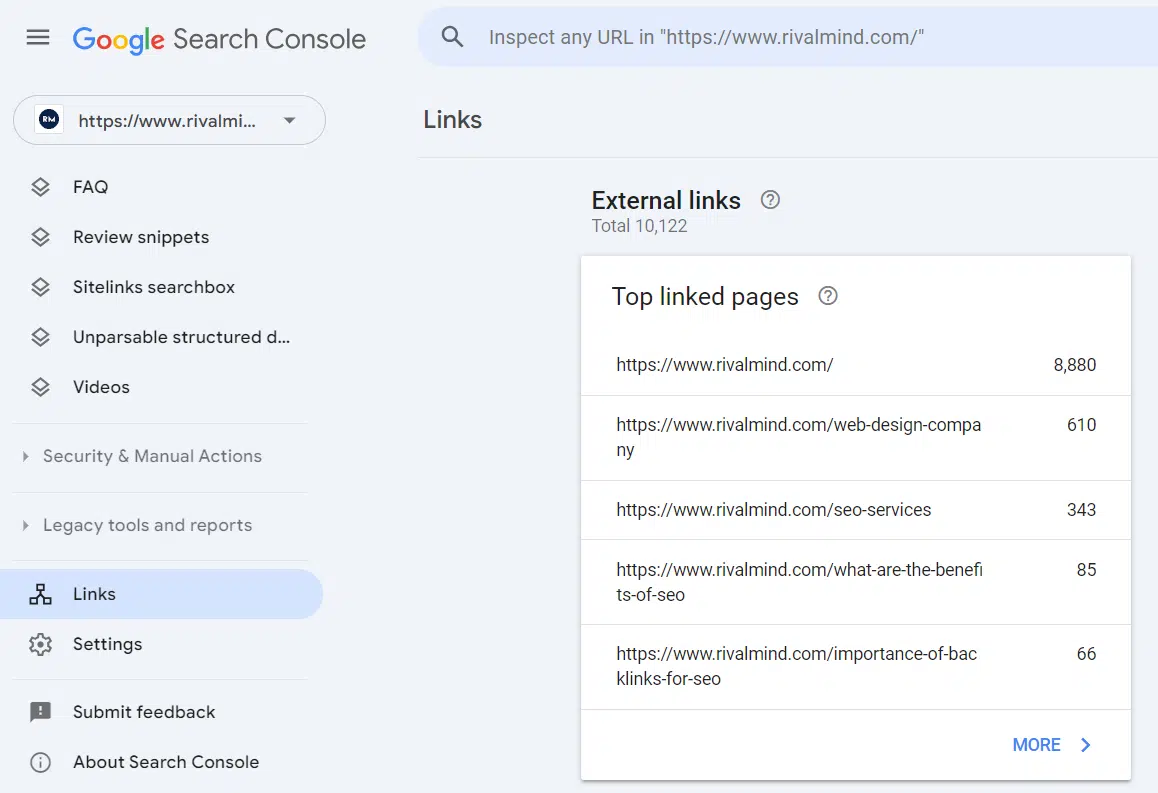

Right here’s the brief record of instruments I exploit to audit giant numbers of pages rapidly. (Be aware that Google Search Console gathers information over time, so if doable, it ought to be arrange and tracked months forward of your mission.)

Hyperlinks (inner and exterior)

- Semrush (or one other various with backlink audit capabilities)

- Google Search Console

- Screaming Frog (nice for managing and monitoring inner hyperlinks to key pages)

Web site site visitors

Key phrase rankings

- Semrush (or one other various with key phrase rank monitoring)

- Google Search Console

Info structure

- Octopus.do (lo-fi wireframing and sitemap planning)

The right way to establish search engine marketing property in your web site

As talked about above, I contemplate any webpage that at present receives backlinks, drives natural site visitors or ranks properly for a lot of key phrases an search engine marketing asset – particularly pages assembly all three standards.

These are pages the place your search engine marketing fairness is concentrated and ought to be transitioned to the brand new web site with excessive care.

For those who’re conversant in VLOOKUP in Excel or Google Sheets, this course of ought to be comparatively straightforward.

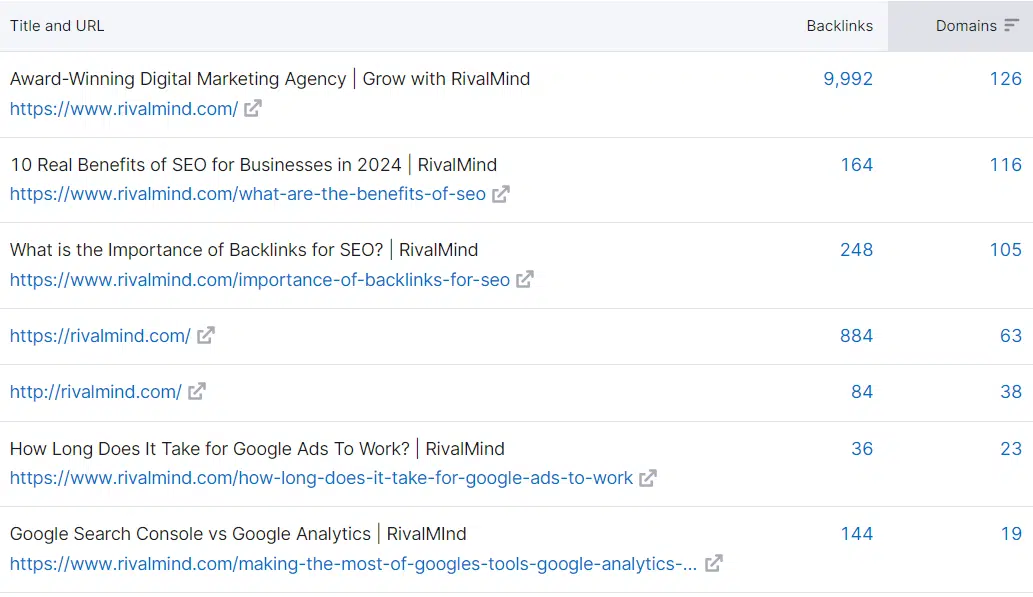

1. Discover and catalog backlinked pages

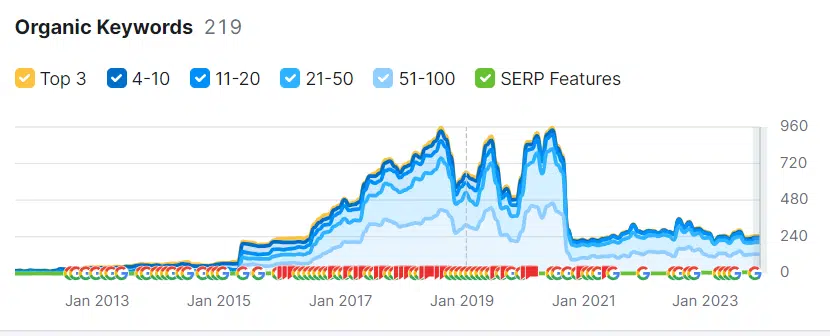

Start by downloading a whole record of URLs and their backlink counts out of your search engine marketing device of selection. In Semrush you should use the Backlink Analytics device to export a listing of your prime backlinked pages.

As a result of your search engine marketing device has a finite dataset, it’s at all times a sensible thought to assemble the identical information from a distinct device, for this reason I arrange Google Search Console prematurely. We will pull the identical information kind from Google Search Console, giving us extra information to evaluation.

Now cross-reference your information, searching for extra pages missed by both device, and take away any duplicates.

You can too sum up the variety of hyperlinks between the 2 datasets to see which pages have probably the most backlinks general. This can make it easier to prioritize which URLs have probably the most hyperlink fairness throughout your website.

Inside hyperlink worth

Now that you already know which pages are receiving probably the most hyperlinks from exterior sources, contemplate cataloging which pages in your web site have the best focus of inner hyperlinks from different pages inside your website.

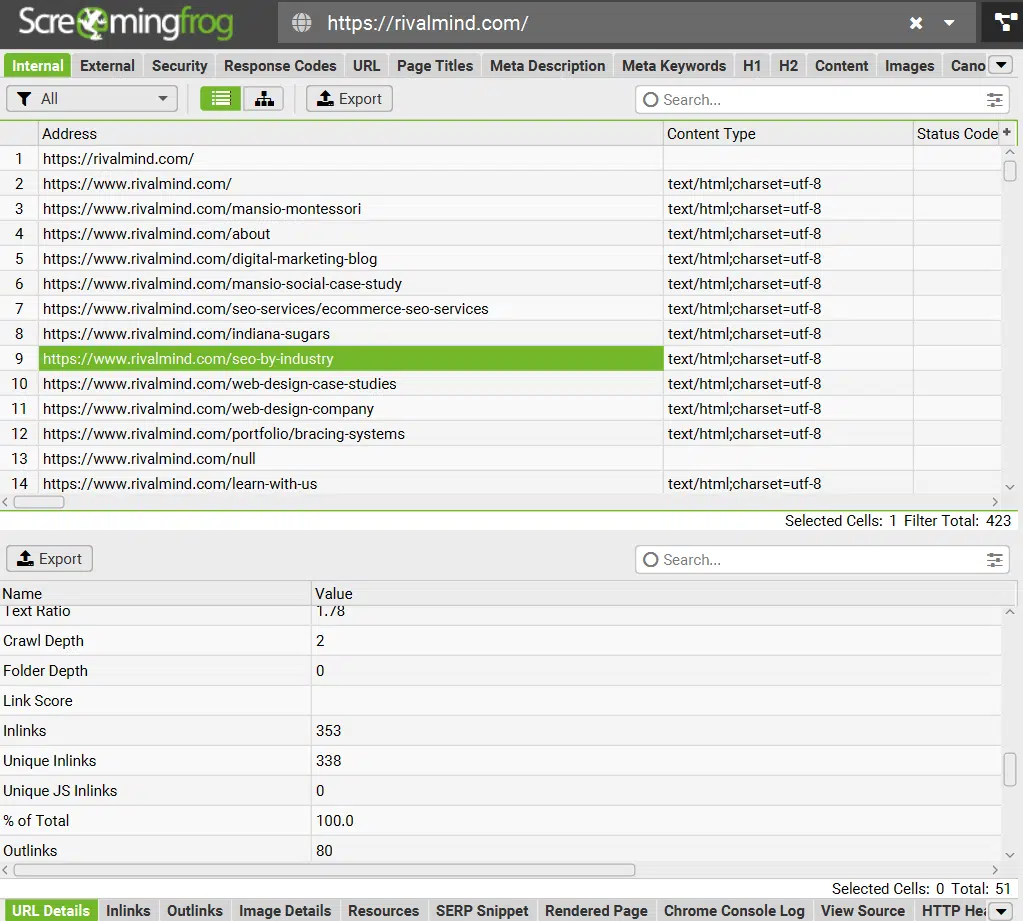

Pages with larger inner hyperlink counts additionally carry extra fairness, which contributes to their capability to rank. This data will be gathered from a Screaming Frog Crawl within the URL Particulars or Inlinks report.

Take into account what inner hyperlinks you intend to make use of. Inside hyperlinks are Google’s main manner of crawling by your web site and carry hyperlink fairness from web page to web page.

Eradicating inner hyperlinks and altering your website’s crawlability can have an effect on its capability to be listed as a complete.

2. Catalog prime natural site visitors contributors

For this portion of the mission, I deviate barely from an “natural solely” focus.

It’s vital to keep in mind that webpages draw site visitors from many alternative channels and simply because one thing doesn’t drive oodles of natural guests, doesn’t imply it’s not a beneficial vacation spot for referral, social and even electronic mail guests.

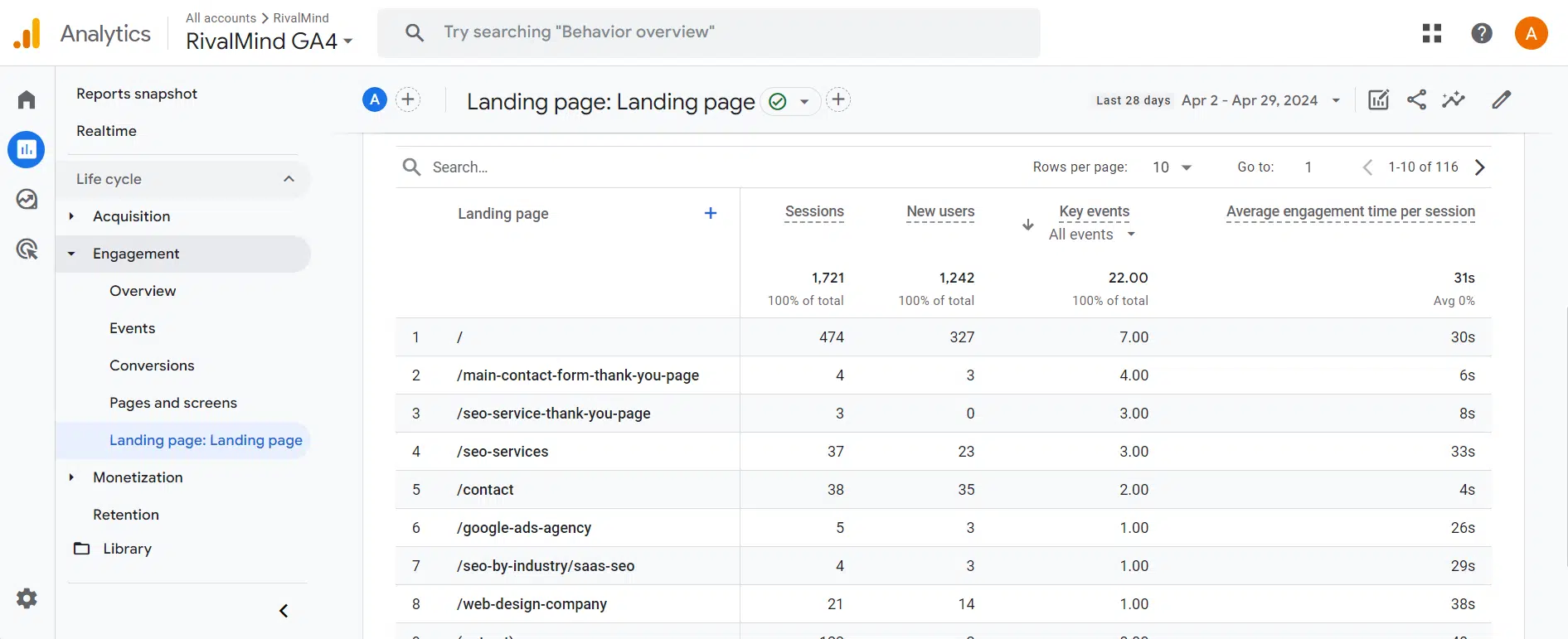

The Touchdown Pages report in Google Analytics 4 is a good way to see what number of classes started on a particular web page. Entry this by deciding on Studies > Engagement > Touchdown Web page.

These pages are accountable for drawing individuals to your web site, whether or not or not it’s organically or by one other channel.

Relying on what number of month-to-month guests your web site attracts, contemplate rising your date vary to have a bigger dataset to look at.

I usually evaluation all touchdown web page information from the prior 12 months and exclude any new pages carried out on account of an ongoing search engine marketing technique. These ought to be carried over to your new web site regardless.

To granularize your information, be at liberty to implement a Session Supply filter for Natural Search to see solely Natural classes from search engines like google and yahoo.

3. Catalog pages with prime rankings

This closing step is considerably superfluous, however I’m a stickler for seeing the whole image in relation to understanding what pages maintain search engine marketing worth.

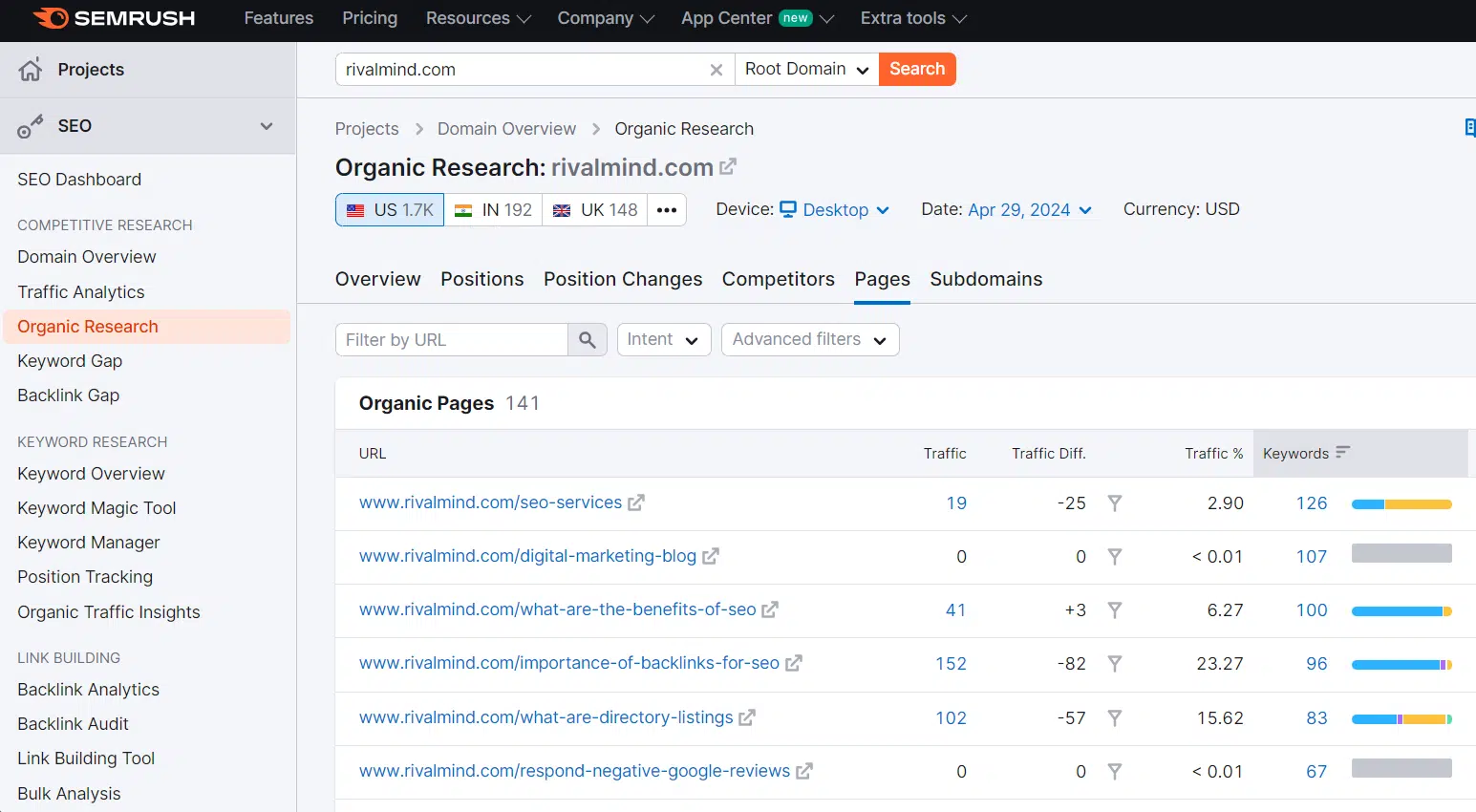

Semrush lets you simply collect a spreadsheet of your webpages which have key phrase rankings within the prime 20 positions on Google. I contemplate rankings in place 20 or higher very beneficial as a result of they normally require much less effort to enhance than key phrase rankings in a worse place.

Use the Natural Analysis device and choose Pages. From right here you may export a listing of your URLs with key phrase rankings within the prime 20.

By combining this information along with your prime backlinks and prime site visitors drivers, you could have a whole record of URLs that meet a number of standards to be thought of an search engine marketing asset.

I then prioritize URLs that meet all three standards first, adopted by URLs that meet two and eventually, URLs that meet simply one of many standards.

By adjusting thresholds for the variety of backlinks, minimal month-to-month site visitors and key phrase rank place, you may change how strict the standards are for which pages you really contemplate to be an search engine marketing asset.

A rule of thumb to observe: Highest precedence pages ought to be modified as little as doable, to protect as a lot of the unique search engine marketing worth you may.

Seamlessly transition your search engine marketing property throughout an internet site redesign

search engine marketing success in an internet site redesign mission boils right down to planning. Strategize your new web site across the property you have already got, don’t attempt to shoehorn property into a brand new design.

Even with all of the packing containers checked, there’s no assure you’ll mitigate rankings and site visitors loss.

Don’t inherently belief your net designer after they say it can all be high quality. Create the plan your self or discover somebody who can do that for you. The chance price of poor planning is just too nice.

Opinions expressed on this article are these of the visitor writer and never essentially Search Engine Land. Employees authors are listed right here.