We’re excited to introduce Phi-3, a household of open AI fashions developed by Microsoft. Phi-3 fashions are the most succesful and cost-effective small language fashions (SLMs) obtainable, outperforming fashions of the identical dimension and subsequent dimension up throughout a wide range of language, reasoning, coding, and math benchmarks. This launch expands the number of high-quality fashions for patrons, providing extra sensible selections as they compose and construct generative AI functions.

Beginning at the moment, Phi-3-mini, a 3.8B language mannequin is out there on Microsoft Azure AI Studio, Hugging Face, and Ollama.

- Phi-3-mini is out there in two context-length variants—4K and 128K tokens. It’s the first mannequin in its class to assist a context window of as much as 128K tokens, with little influence on high quality.

- It’s instruction-tuned, which means that it’s skilled to observe various kinds of directions reflecting how folks usually talk. This ensures the mannequin is able to use out-of-the-box.

- It’s obtainable on Azure AI to reap the benefits of the deploy-eval-finetune toolchain, and is out there on Ollama for builders to run domestically on their laptops.

- It has been optimized for ONNX Runtime with assist for Home windows DirectML together with cross-platform assist throughout graphics processing unit (GPU), CPU, and even cellular {hardware}.

- It is usually obtainable as an NVIDIA NIM microservice with an ordinary API interface that may be deployed wherever. And has been optimized for NVIDIA GPUs.

Within the coming weeks, extra fashions will likely be added to Phi-3 household to supply prospects much more flexibility throughout the quality-cost curve. Phi-3-small (7B) and Phi-3-medium (14B) will likely be obtainable within the Azure AI mannequin catalog and different mannequin gardens shortly.

Microsoft continues to supply one of the best fashions throughout the quality-cost curve and at the moment’s Phi-3 launch expands the number of fashions with state-of-the-art small fashions.

Azure AI Studio

Phi-3-mini is now obtainable

Groundbreaking efficiency at a small dimension

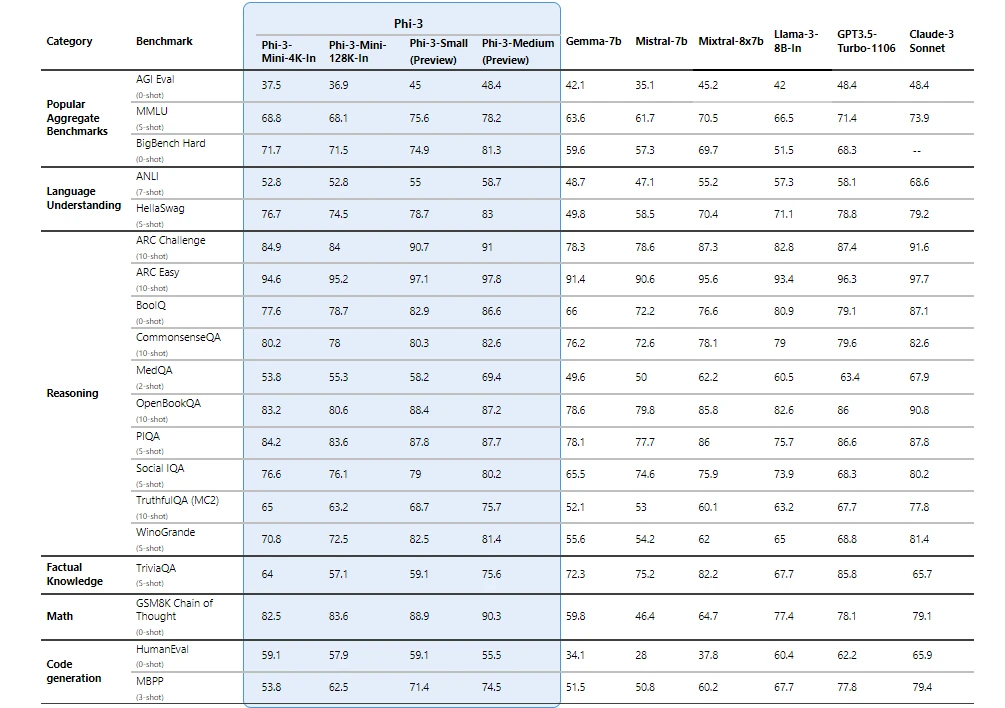

Phi-3 fashions considerably outperform language fashions of the identical and bigger sizes on key benchmarks (see benchmark numbers under, larger is best). Phi-3-mini does higher than fashions twice its dimension, and Phi-3-small and Phi-3-medium outperform a lot bigger fashions, together with GPT-3.5T.

All reported numbers are produced with the identical pipeline to make sure that the numbers are comparable. In consequence, these numbers might differ from different printed numbers because of slight variations within the analysis methodology. Extra particulars on benchmarks are supplied in our technical paper.

Observe: Phi-3 fashions don’t carry out as properly on factual data benchmarks (comparable to TriviaQA) because the smaller mannequin dimension ends in much less capability to retain details.

Security-first mannequin design

Phi-3 fashions had been developed in accordance with the Microsoft Accountable AI Normal, which is a company-wide set of necessities based mostly on the next six rules: accountability, transparency, equity, reliability and security, privateness and safety, and inclusiveness. Phi-3 fashions underwent rigorous security measurement and analysis, red-teaming, delicate use overview, and adherence to safety steering to assist be certain that these fashions are responsibly developed, examined, and deployed in alignment with Microsoft’s requirements and finest practices.

Constructing on our prior work with Phi fashions (“Textbooks Are All You Want”), Phi-3 fashions are additionally skilled utilizing high-quality information. They had been additional improved with in depth security post-training, together with reinforcement studying from human suggestions (RLHF), automated testing and evaluations throughout dozens of hurt classes, and handbook red-teaming. Our method to security coaching and evaluations are detailed in our technical paper, and we define advisable makes use of and limitations within the mannequin playing cards. See the mannequin card assortment.

Unlocking new capabilities

Microsoft’s expertise delivery copilots and enabling prospects to rework their companies with generative AI utilizing Azure AI has highlighted the rising want for different-size fashions throughout the quality-cost curve for various duties. Small language fashions, like Phi-3, are particularly nice for:

- Useful resource constrained environments together with on-device and offline inference situations.

- Latency sure situations the place quick response occasions are crucial.

- Price constrained use instances, notably these with easier duties.

For extra on small language fashions, see our Microsoft Supply Weblog.

Because of their smaller dimension, Phi-3 fashions can be utilized in compute-limited inference environments. Phi-3-mini, particularly, can be utilized on-device, particularly when additional optimized with ONNX Runtime for cross-platform availability. The smaller dimension of Phi-3 fashions additionally makes fine-tuning or customization simpler and extra reasonably priced. As well as, their decrease computational wants make them a decrease price possibility with significantly better latency. The longer context window permits taking in and reasoning over giant textual content content material—paperwork, internet pages, code, and extra. Phi-3-mini demonstrates robust reasoning and logic capabilities, making it a great candidate for analytical duties.

Prospects are already constructing options with Phi-3. One instance the place Phi-3 is already demonstrating worth is in agriculture, the place web may not be readily accessible. Highly effective small fashions like Phi-3 together with Microsoft copilot templates can be found to farmers on the level of want and supply the extra good thing about working at decreased price, making AI applied sciences much more accessible.

ITC, a number one enterprise conglomerate based mostly in India, is leveraging Phi-3 as a part of their continued collaboration with Microsoft on the copilot for Krishi Mitra, a farmer-facing app that reaches over one million farmers.

“Our objective with the Krishi Mitra copilot is to enhance effectivity whereas sustaining the accuracy of a giant language mannequin. We’re excited to associate with Microsoft on utilizing fine-tuned variations of Phi-3 to fulfill each our objectives—effectivity and accuracy!”

Saif Naik, Head of Expertise, ITCMAARS

Originating in Microsoft Analysis, Phi fashions have been broadly used, with Phi-2 downloaded over 2 million occasions. The Phi sequence of fashions have achieved exceptional efficiency with strategic information curation and modern scaling. Beginning with Phi-1, a mannequin used for Python coding, to Phi-1.5, enhancing reasoning and understanding, after which to Phi-2, a 2.7 billion-parameter mannequin outperforming these as much as 25 occasions its dimension in language comprehension.1 Every iteration has leveraged high-quality coaching information and data switch methods to problem standard scaling legal guidelines.

Get began at the moment

To expertise Phi-3 for your self, begin with taking part in with the mannequin on Azure AI Playground. You can even discover the mannequin on the Hugging Chat playground. Begin constructing with and customizing Phi-3 in your situations utilizing the Azure AI Studio. Be part of us to study extra about Phi-3 throughout a particular dwell stream of the AI Present.

1 Microsoft Analysis Weblog, Phi-2: The shocking energy of small language fashions, December 12, 2023.