Cybersecurity researchers have found a novel assault that employs stolen cloud credentials to focus on cloud-hosted massive language mannequin (LLM) companies with the purpose of promoting entry to different menace actors.

The assault approach has been codenamed LLMjacking by the Sysdig Risk Analysis Staff.

“As soon as preliminary entry was obtained, they exfiltrated cloud credentials and gained entry to the cloud surroundings, the place they tried to entry native LLM fashions hosted by cloud suppliers,” safety researcher Alessandro Brucato stated. “On this occasion, an area Claude (v2/v3) LLM mannequin from Anthropic was focused.”

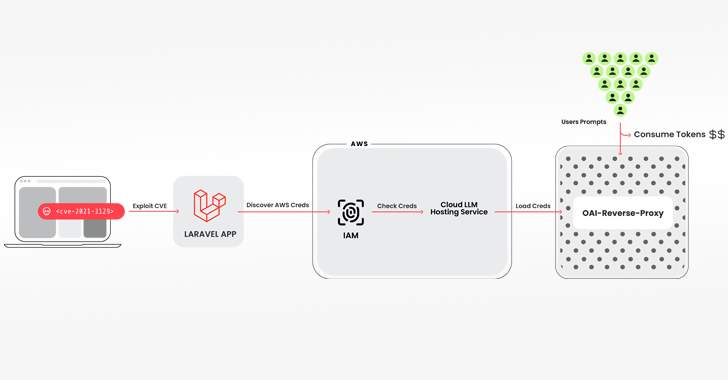

The intrusion pathway used to drag off the scheme entails breaching a system working a weak model of the Laravel Framework (e.g., CVE-2021-3129), adopted by getting maintain of Amazon Internet Providers (AWS) credentials to entry the LLM companies.

Among the many instruments used is an open-source Python script that checks and validates keys for varied choices from Anthropic, AWS Bedrock, Google Cloud Vertex AI, Mistral, and OpenAI, amongst others.

“No reliable LLM queries have been truly run in the course of the verification part,” Brucato defined. “As a substitute, simply sufficient was completed to determine what the credentials have been able to and any quotas.”

The keychecker additionally has integration with one other open-source software referred to as oai-reverse-proxy that capabilities as a reverse proxy server for LLM APIs, indicating that the menace actors are doubtless offering entry to the compromised accounts with out truly exposing the underlying credentials.

“If the attackers have been gathering a list of helpful credentials and wished to promote entry to the accessible LLM fashions, a reverse proxy like this might permit them to monetize their efforts,” Brucato stated.

Moreover, the attackers have been noticed querying logging settings in a probable try to sidestep detection when utilizing the compromised credentials to run their prompts.

The event is a departure from assaults that target immediate injections and mannequin poisoning, as an alternative permitting attackers to monetize their entry to the LLMs whereas the proprietor of the cloud account foots the invoice with out their data or consent.

Sysdig stated that an assault of this sort might rack up over $46,000 in LLM consumption prices per day for the sufferer.

“Using LLM companies could be costly, relying on the mannequin and the quantity of tokens being fed to it,” Brucato stated. “By maximizing the quota limits, attackers may block the compromised group from utilizing fashions legitimately, disrupting enterprise operations.”

Organizations are really helpful to allow detailed logging and monitor cloud logs for suspicious or unauthorized exercise, in addition to make sure that efficient vulnerability administration processes are in place to forestall preliminary entry.