Knowledge breaches could look like a dime a dozen, however this week introduced one thing a bit bit completely different: information of a large breach of 26 billion report, by far the most important ever recorded. The truth that the information of the Mom of All Breaches (MOAB) occurred throughout Knowledge Privateness Week highlights the significance and the problem of holding non-public knowledge non-public in a super-connected world.

Phrase of the MOAB occasion got here out of Cybernews, a web based cybersecurity publication primarily based in Lithuania. In a narrative posted January 24, Vilius Petkauskas, a deputy editor with the publication, described how Cybernews labored with Bob Dyachenko, cybersecurity researcher and proprietor of SecurityDiscovery.com, to uncover the breach.

The MOAB reportedly spans 12 TB throughout 3,800 folders, which had been left unprotected on the Web. The information seems to be comprised of beforehand compromised information, and there doesn’t seem like any newly compromised knowledge, Petkauskas writes. The haul contains knowledge from tens of millions of peoples’ LinkedIn, Twitter, Weibo, and Tencent accounts, amongst others.

The MOAB additionally units the bar for a sure kind of breach, dubbed a compilation of a number of breaches, or COMB. The researchers discovered “hundreds of meticulously compiled and reindexed leaks, breaches, and privately offered databases,” Petkauskas writes. The truth that the information was beforehand disclosed doesn’t make it much less vital.

“The dataset is extraordinarily harmful as risk actors might leverage the aggregated knowledge for a variety of assaults, together with id theft, refined phishing schemes, focused cyberattacks, and unauthorized entry to private and delicate accounts,” Petkauskas quotes researchers as saying.

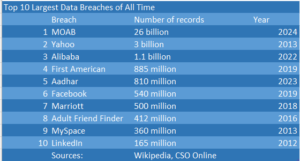

The MOAB dwarfs earlier knowledge breaches in dimension. It’s practically 10 instances greater than the information breaches that impacted Yahoo prospects in 2013, which the corporate didn’t disclose till years later.

The MOAB additionally caught the eye of knowledge safety professionals, together with Doriel Abrahams, the principal technologist at Forter.

“Though the frequent assumption with this leak is there’s nothing ‘new,’ this COMB is extraordinarily useful for unhealthy actors,” Abrahams says. “Since they will leverage this knowledge to validate whether or not customers have comparable or an identical passwords throughout a number of platforms, they will try ATOs [account takeovers] on different websites not half of the present leak. Understanding which platforms customers frequent is a superpower for social engineering scammers. They are often extra focused and, finally, efficient.”

Richard Chicken, Chief Safety Officer at Traceable AI, questioned whether or not the brand new breach would spur firms and governments to take knowledge safety extra severely.

“Possibly it lastly takes one thing like a MOAB to get the US Authorities and the businesses that function inside its borders to wake the heck up,” Chicken says. “We stay in a nation with no nationwide knowledge privateness legal guidelines, no incentives for firms to be protectors of the information that they’re trusted with, and no disincentives that appear to work. Corporations will proceed to trash the lives of their very own prospects by failing to guard the information that’s related to them and really feel no ache for his or her failures. A listing like this can solely create extra victims who should kind out the damages achieved to them on their very own, with no penalties for the businesses that gave that knowledge away within the first place.”

As knowledge breaches develop into extra commonplace, there’s a danger that firms and people will develop into extra blasé about them sooner or later. That would imply greater breaches, extra delicate knowledge, or each. As an example, 23andMe just lately introduced that hackers had obtained details about 6.9 million customers who opted into the DNA Kin characteristic.

Knowledge Privateness Week is a superb reminder that the onus for safeguarding prospects’ private knowledge is on the businesses that gather, use and share it, says Jennifer Mahoney, the supervisor of knowledge governance, privateness and safety at Optiv.

“Corporations have a accountability to guard shoppers, safe their knowledge and do proper by them morally, ethically and legally,” she writes. “Dealing with knowledge privateness the appropriate means drives shopper belief and builds long-lasting relationships.”

Expertise innovation usually outpaces laws and regulation, Mahoney says. However that doesn’t imply that organizations ought to wait to be instructed by native, state, or federal legal guidelines easy methods to deal with knowledge privateness. “They should act now,” she says.

Synthetic intelligence has surged in reputation because of new generative language fashions like GPT-4. Nonetheless, GenAI raises the chance of knowledge being abused, says Mark Sangster, the vice chairman and chief of technique at Adlumin.

“Elementary safety practices ought to develop into the outer defend, with a selected give attention to knowledge and ensuing obligations,” Sangster says. “When it comes to synthetic intelligence, firms want to guard knowledge lakes and construct insurance policies and procedures to make sure non-public knowledge doesn’t mistakenly leak into knowledge units for giant studying fashions that may simply expose confidential and probably damaging info.”

It’s too simple to place a bit of knowledge into a big language mannequin with out interested by potential harms occurring downstream, says Jeff Barker, vice chairman of product at Synack

“As folks search for shortcuts to do every little thing from writing emails to diagnosing sufferers, AI apps can now double as repositories of extremely private knowledge,” Barker says. “Even when they don’t maintain private knowledge from the outset, LLMs can nonetheless be poisoned by poor app safety, leading to a consumer sharing private info with the adversary.”

GenAI poses a specific risk to knowledge privateness, however there are numerous others, together with the amount and high quality of the information saved, says Steve Stone, head of Rubrik Zero Labs. As an example, a typical group’s knowledge has grown 42% in simply the final 18 months, rising to a mean of 24 million delicate information, he says.

“Breaches usually compromise the holy trinity of delicate knowledge: personally identifiable info, monetary information, and login credentials,” Stone says. “So long as these profitable knowledge sorts stay decentralized throughout numerous clouds, endpoints and methods not correctly monitored, they are going to proceed to entice, and reward more and more refined attackers.”

Biometric knowledge, corresponding to fingerprints and your face, are generally touted as essential enablers of upper safety. However biometric knowledge brings its personal baggage as a very delicate type of knowledge, says Viktoria Ruubel, Managing Director of Digital Identification, Veriff.

“As shoppers and staff, we’ve got all seen or skilled biometric expertise in motion,” Ruubel says. “In enterprise settings, face scans can allow entry into managed entry areas and even the workplace. Nonetheless, whereas these instruments have made id verification simpler and diminished among the friction of identification and authentication, there’s rising concern round biometric knowledge and privateness – biometric knowledge is exclusive to every particular person and everlasting, making it one of the crucial private types of identification accessible.”

Knowledge Privateness Day comes solely as soon as per 12 months. However to really allow knowledge privateness, we should work at it day-after-day, says Ajay Bhatia, international vice chairman and common supervisor of knowledge compliance and governance at Veritas Applied sciences. “It’s a continuing course of that requires vigilance 24/7/365,” Bhatia says.

Associated Objects:

Buckle Up: It’s Time for 2024 Safety Predictions

AI Regs a Shifting Goal within the US, However Hold an Eye on Europe

Feds Increase Cyber Spending as Safety Threats to Knowledge Proliferate