An offshoot of the Linux Basis in the present day introduced the Open Platform for Enterprise AI (OPEA), a brand new group venture supposed to drive open supply innovation in knowledge and AI. A selected focus of OPEA will probably be round creating open requirements round retrieval augmented era (RAG), which the group says possesses the capability “to unlock important worth from current knowledge repositories.”

The OPEA was created by LF AI & Information Basis, the Linux Basis offshoot based in 2018 to facilitate the event of vendor-neutral, open supply AI and knowledge applied sciences. OPEA matches proper into that paradigm with a objective of facilitating the event of versatile, scalable, and open supply generative AI expertise, significantly round RAG.

RAG is an rising method that brings outdoors knowledge to bear on massive language fashions (LLMs) and different generative AI fashions. As an alternative of relying completely on pre-trained LLMs which are inclined to creating issues up, RAG helps steer the AI mannequin to offering related and contextual solutions. It’s considered as a much less dangerous various and time-intensive various to coaching one’s personal LLM or sharing delicate knowledge immediately with LLMs like GPT-4.

Whereas GenAI and RAG methods have emerged rapidly, it’s additionally led to “a fragmentation of instruments, methods, and options,” the LF AI & AI Basis says. The group intends to handle that fragmentation by working with the business “to create standardize elements together with frameworks, structure blueprints and reference options that showcase efficiency, interoperability, trustworthiness and enterprise-grade readiness.”

The emergence of RAG instruments and methods will probably be a central focus for OPEA in its quest to create standardized instruments and frameworks, says Ibrahim Haddad, the chief director of LF AI & Information.

“We’re thrilled to welcome OPEA to LF AI & Information with the promise to supply open supply, standardized, modular and heterogenous [RAG] pipelines for enterprises with a deal with open mannequin growth, hardened and optimized help of assorted compilers and toolchains,” Haddad stated in a press launch posted to the LF AI & Information web site.

“OPEA will unlock new prospects in AI by creating an in depth, composable framework that stands on the forefront of expertise stacks,” Haddad continued. “This initiative is a testomony to our mission to drive open supply innovation and collaboration throughout the AI and knowledge communities beneath a impartial and open governance mannequin.”

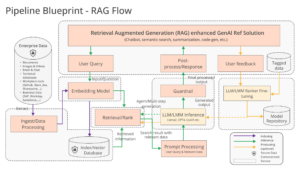

OPEA already has a blueprint for a RAG resolution that’s made up of composable constructing blocks, together with knowledge shops, LLMs, and immediate engines. You may see extra on the OPEA web site at opea.dev.

There’s a well-recognized solid of distributors becoming a member of OPEA as founding members, together with: Anyscale, Cloudera, Datastax, Domino Information Lab, Hugging Face, Intel, KX, MariaDB Basis, Minio, Qdrant, Pink Hat, SAS, VMware, Yellowbrick Information, and Zilliz, amongst others.

“The OPEA initiative is essential for the way forward for AI growth,” says Minio CEO and cofounder AB Periasamy. “The AI knowledge infrastructure should even be constructed on these open ideas. Solely by having open supply and open customary options, from fashions to infrastructure and right down to the info can we create belief, guarantee transparency, and promote accountability.”

“We see large alternatives for core MariaDB customers–and customers of the associated MySQL Server–to construct RAG options,” stated Kaj Arnö, CEO of MariaDB Basis. “It’s logical to maintain the supply knowledge, the AI vector knowledge, and the output knowledge in a single and the identical RDBMS. The OPEA group, as a part of LF AI & Information, is an apparent entity to simplify Enterprise GenAI adoption.”

“The facility of RAG is plain, and its integration into gen AI creates a ballast of fact that allows companies to confidently faucet into their knowledge and use it to develop their enterprise,” stated Michael Gilfix, Chief Product and Engineering Officer at KX.

“OPEA, with the help of the broader group, will deal with vital ache factors of RAG adoption and scale in the present day,” stated Melissa Evers, Intel’s vp of software program engineering group and common supervisor of technique to execution. “It would additionally outline a platform for the subsequent phases of developer innovation that harnesses the potential worth generative AI can carry to enterprises and all our lives.”

Associated Gadgets:

Vectara Spies RAG As Resolution to LLM Fibs and Shannon Theorem Limitations

DataStax Acquires Langflow to Speed up GenAI App Improvement

What’s Holding Up the ROI for GenAI?