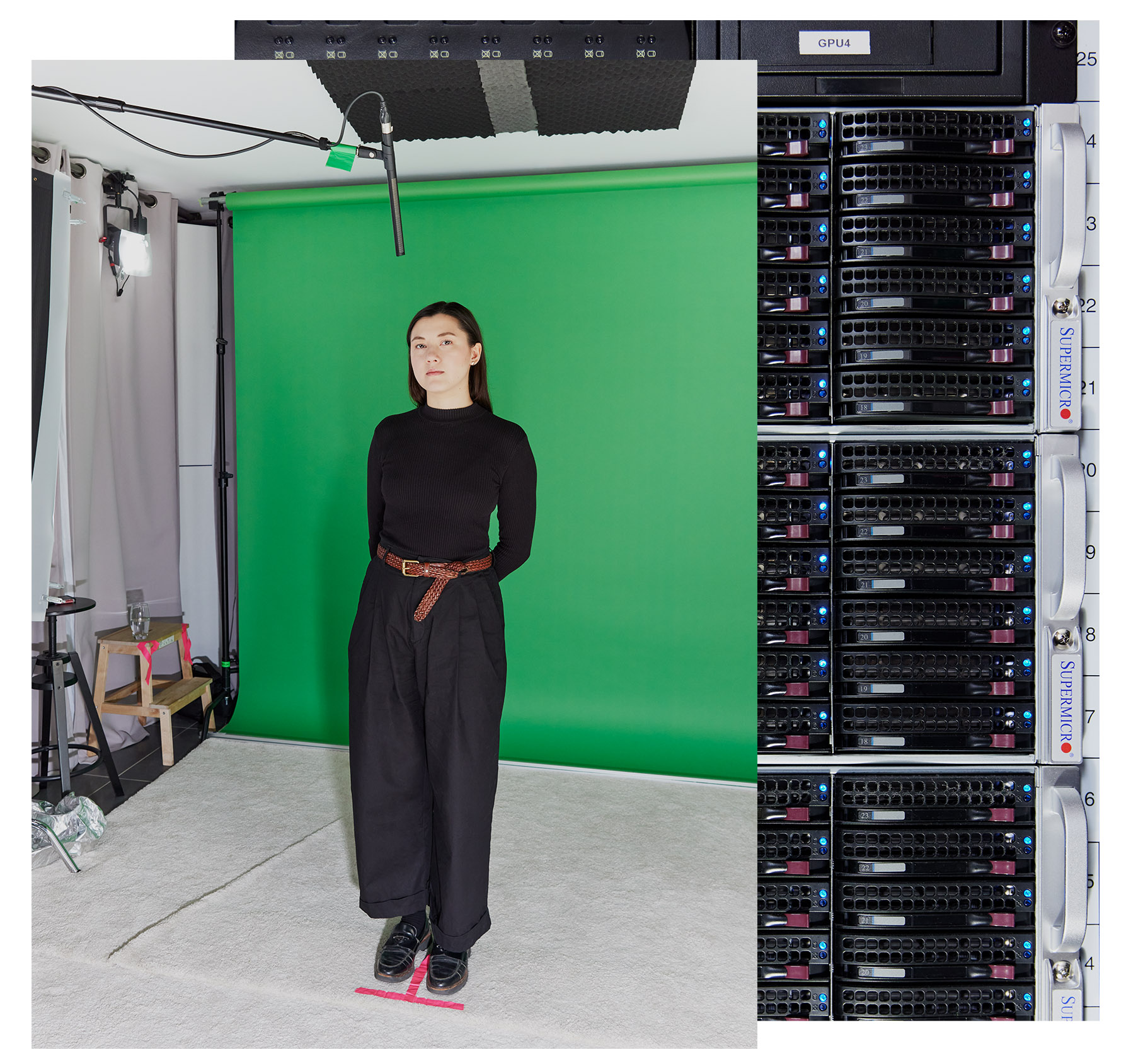

DAVID VINTINER

He then asks me to learn a script for a fictitious YouTuber in several tones, directing me on the spectrum of feelings I ought to convey. First I’m presupposed to learn it in a impartial, informative manner, then in an encouraging manner, an aggravated and complain-y manner, and eventually an excited, convincing manner.

“Hey, everybody—welcome again to Elevate Her together with your host, Jess Mars. It’s nice to have you ever right here. We’re about to tackle a subject that’s fairly delicate and actually hits near house—coping with criticism in our religious journey,” I learn off the teleprompter, concurrently making an attempt to visualise ranting about one thing to my accomplice in the course of the complain-y model. “Regardless of the place you look, it seems like there’s all the time a essential voice able to chime in, doesn’t it?”

Don’t be rubbish, don’t be rubbish, don’t be rubbish.

“That was actually good. I used to be watching it and I used to be like, ‘Effectively, that is true. She’s undoubtedly complaining,’” Oshinyemi says, encouragingly. Subsequent time, perhaps add some judgment, he suggests.

We movie a number of takes that includes totally different variations of the script. In some variations I’m allowed to maneuver my fingers round. In others, Oshinyemi asks me to carry a metallic pin between my fingers as I do. That is to check the “edges” of the expertise’s capabilities on the subject of speaking with fingers, Oshinyemi says.

Traditionally, making AI avatars look pure and matching mouth actions to speech has been a really tough problem, says David Barber, a professor of machine studying at College Faculty London who will not be concerned in Synthesia’s work. That’s as a result of the issue goes far past mouth actions; it’s important to take into consideration eyebrows, all of the muscular tissues within the face, shoulder shrugs, and the quite a few totally different small actions that people use to precise themselves.

DAVID VINTINER

Synthesia has labored with actors to coach its fashions since 2020, and their doubles make up the 225 inventory avatars which can be accessible for patrons to animate with their very own scripts. However to coach its newest technology of avatars, Synthesia wanted extra information; it has spent the previous yr working with round 1,000 skilled actors in London and New York. (Synthesia says it doesn’t promote the info it collects, though it does launch a few of it for tutorial analysis functions.)

The actors beforehand obtained paid every time their avatar was used, however now the corporate pays them an up-front price to coach the AI mannequin. Synthesia makes use of their avatars for 3 years, at which level actors are requested in the event that they wish to renew their contracts. In that case, they arrive into the studio to make a brand new avatar. If not, the corporate will delete their information. Synthesia’s enterprise prospects may generate their very own customized avatars by sending somebody into the studio to do a lot of what I’m doing.