Databricks Unity Catalog (“UC”) offers a single unified governance resolution for all of an organization’s information and AI belongings throughout clouds and information platforms. This weblog digs deeper into the prior Unity Catalog Governance Worth Levers weblog to indicate how the know-how itself particularly permits constructive enterprise outcomes by complete information and AI monitoring, reporting, and lineage.

Total challenges with conventional non-unified governance

The Unity Catalog Governance Worth Levers weblog mentioned the “why” of the organizational significance of governance for data safety, entry management, utilization monitoring, enacting guardrails, and acquiring “single supply of reality” insights from their information belongings. These challenges compound as their firm grows and with out Databricks UC, conventional governance options now not adequately meet their wants.

The key challenges mentioned included weaker compliance and fractured information privateness managed throughout a number of distributors; uncontrolled and siloed information and AI swamps; exponentially rising prices; lack of alternatives, income, and collaboration.

How Databricks Unity Catalog Helps a Unified View, Monitoring, and Observability

So, how does this all work from a technical standpoint? UC manages all registered belongings throughout the Databricks Information Intelligence Platform. These belongings could be something inside BI, DW, information engineering, information streaming, information science, and ML. This governance mannequin offers entry controls, lineage, discovery, monitoring, auditing, and sharing. It additionally offers metadata administration of information, tables, ML fashions, notebooks, and dashboards. UC provides one single view of your whole end-to-end data, by the Databricks asset catalog, characteristic retailer and mannequin registry, lineage capabilities, and metadata tagging for information classifications, as mentioned beneath:

Unified view of all the information property

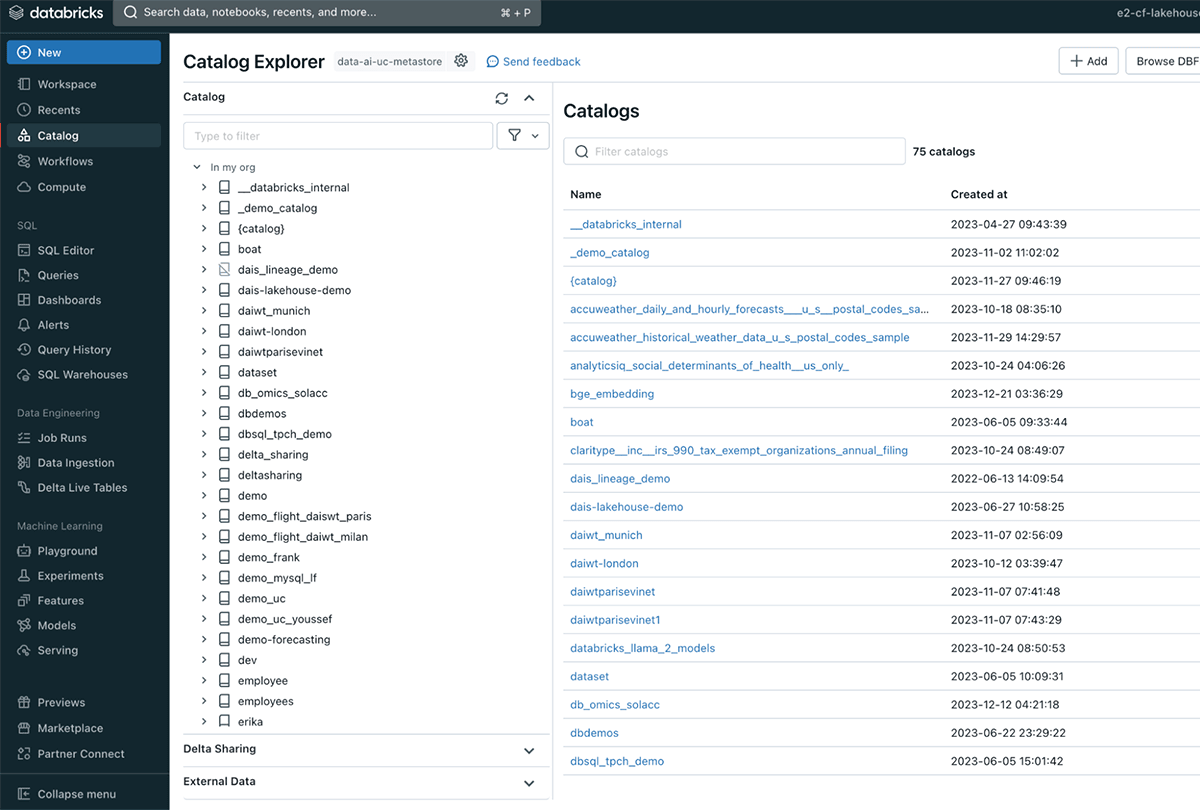

- Asset catalog: by system tables that include metadata, you may see all that’s contained in your catalog equivalent to schemas, tables, columns, information, fashions, and extra. In case you are not accustomed to volumes inside Databricks, they’re used for managing non-tabular datasets. Technically, they’re logical volumes of storage to entry information in any format: structured, semi-structured, and unstructured.

Catalog Explorer helps you to uncover and govern all of your information and ML fashions - Characteristic Retailer and Mannequin Registry: outline options utilized by information scientists throughout the centralized repository. That is useful for constant mannequin coaching and inference in your whole AI workflow.

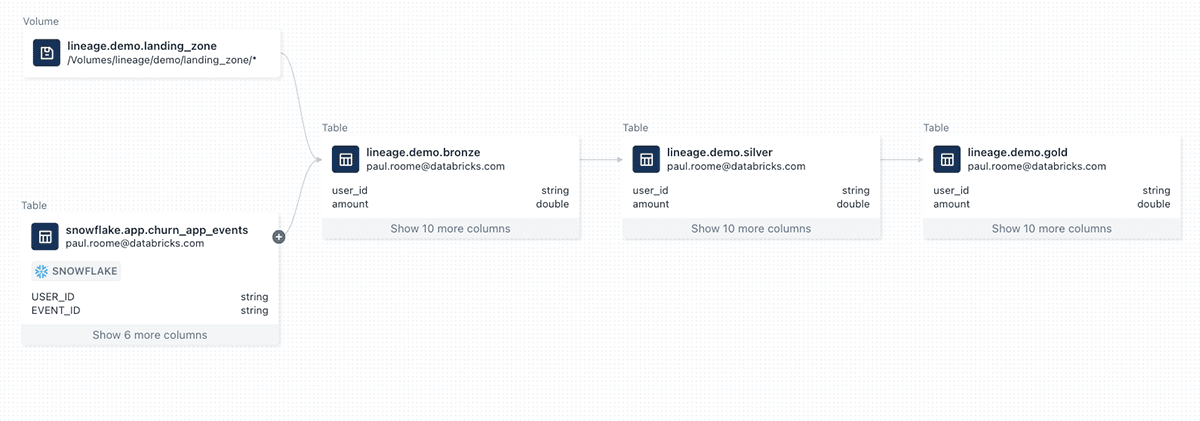

- Lineage capabilities: belief in your information is vital for what you are promoting to take motion in actual life. Finish-to-end transparency into your information is required for belief in your studies, fashions, and insights. UC makes this straightforward by lineage capabilities, offering insights on: What are the uncooked information sources? Who created it and when? How was information merged and remodeled? What’s the traceability from the fashions again to the datasets they’re educated on? Lineage exhibits end-to-end from information to mannequin – each table-level and column-level. You possibly can even question throughout information sources equivalent to Snowflake and profit instantly:

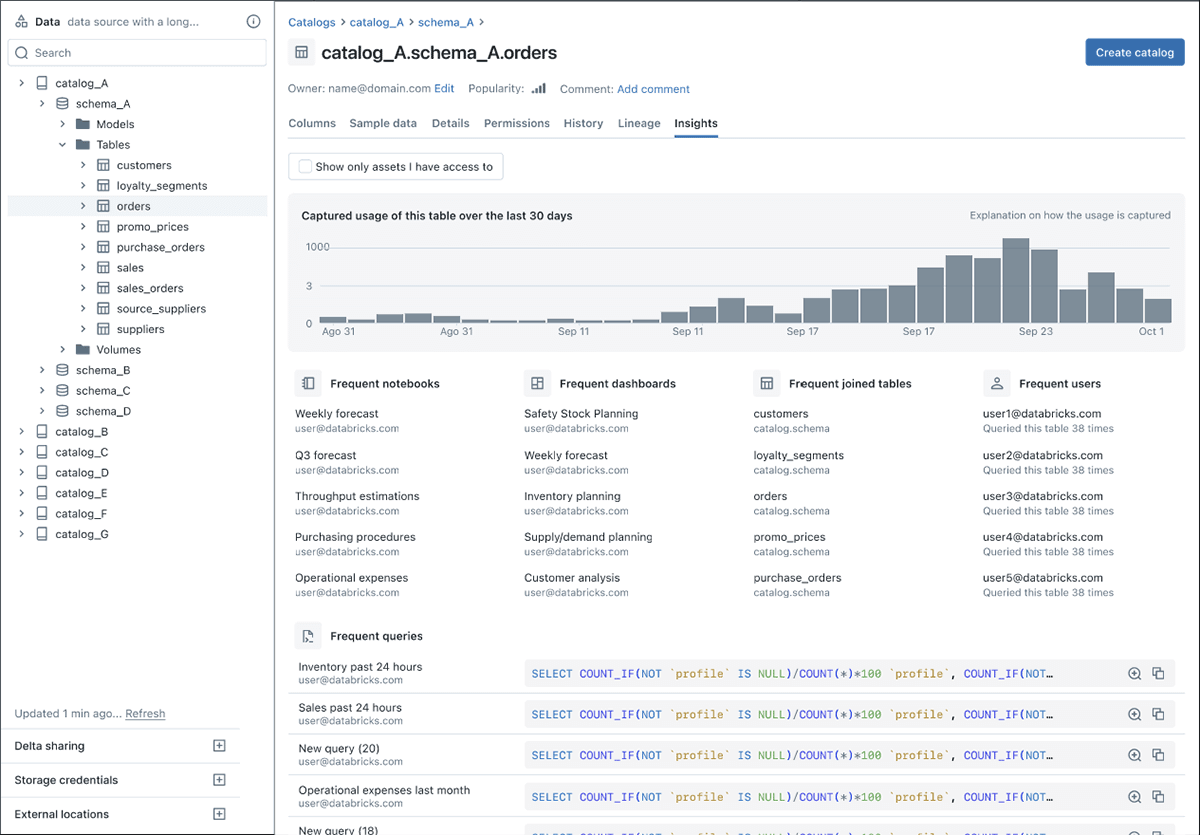

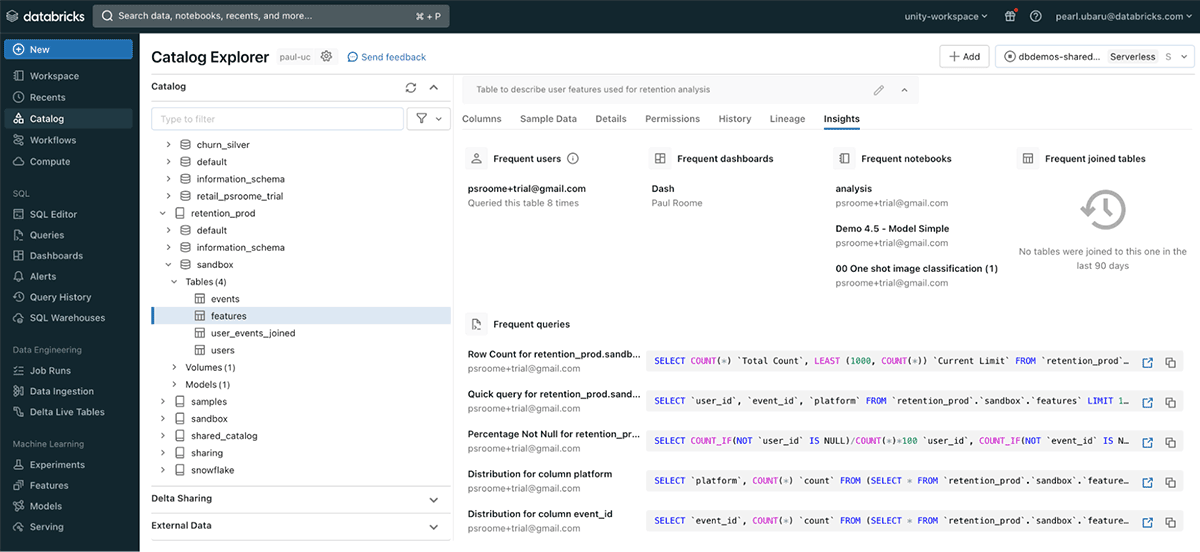

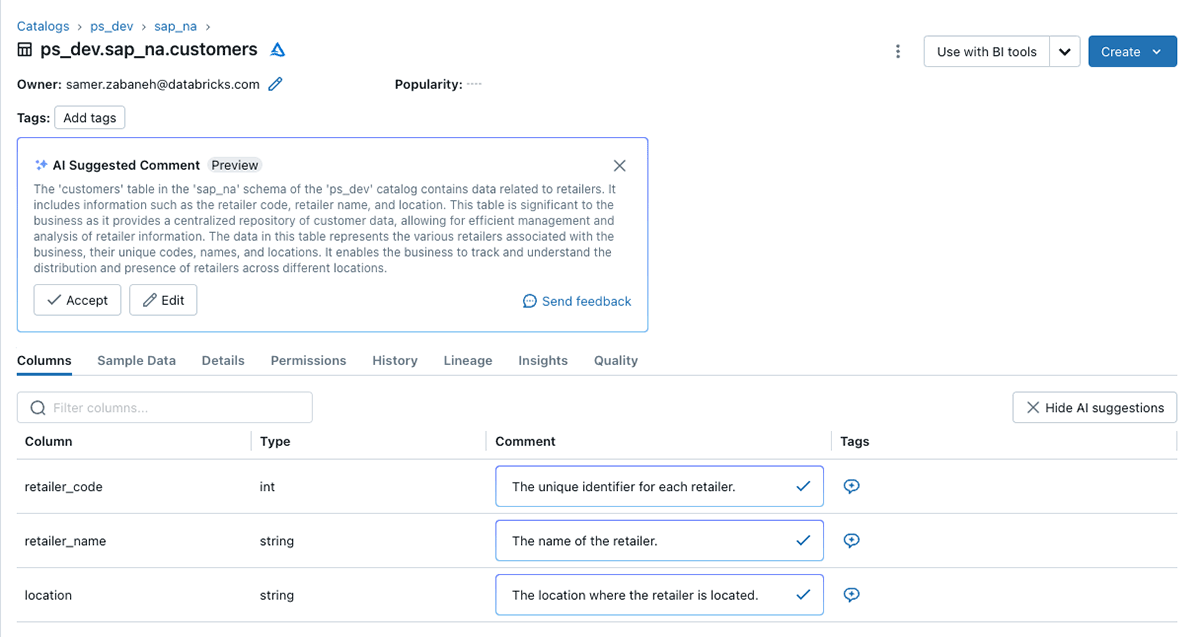

- Metadata tagging for information classifications: enrich your information and queries by offering contextual insights about your information belongings. These descriptions on the column and desk degree could be manually entered, or mechanically described with GenAI by Databricks Assistant. Beneath is an instance of descriptions and quantifiable traits:

Having one unified view leads to:

- Accelerated innovation: your insights are solely pretty much as good as your information. Your evaluation is barely pretty much as good as the information you entry. So, streamlining your information search drives sooner and higher era of enterprise insights, driving innovation.

- Value discount by centralized asset cataloging: lowers license prices (only one vendor resolution versus needing many distributors), lowers utilization charges, reduces time to market pains, and permits general operational efficiencies.

- It is simpler to find and entry all information by decreasing information sprawl throughout a number of databases, information warehouses, object storage methods, and extra.

Complete information and AI monitoring and reporting

Databricks Lakehouse Monitoring permits groups to observe their whole information pipelines — from information and options to ML fashions — with out extra instruments and complexity. Powered by Unity Catalog, it lets customers uniquely be certain that their information and AI belongings are top quality, correct and dependable by deep perception into the lineage of their information and AI belongings. The one, unified method to monitoring enabled by lakehouse structure makes it easy to diagnose errors, carry out root trigger evaluation, and discover options.

How do you guarantee belief in your information, ML fashions, and AI throughout your whole information pipeline in a single view no matter the place the information resides? Databricks Lakehouse Monitoring is the trade’s solely complete resolution from information (no matter the place it resides) to insights. It accelerates the invention of points, helps decide root causes, and in the end assists in recommending options.

UC offers Lakehouse Monitoring capabilities with each democratized dashboards and granular governance data that may be straight queried by system tables. The democratization of governance extends operational oversight and compliance to non-technical individuals, permitting a broad number of groups to observe all of their pipelines.

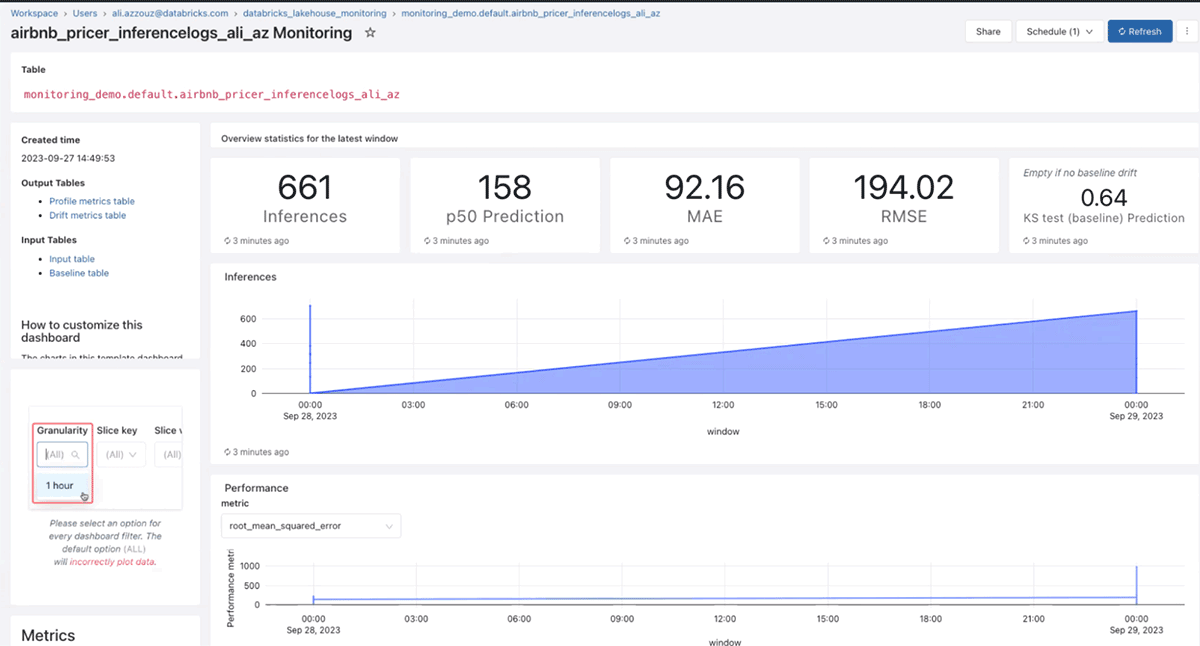

Beneath is a pattern dashboard of the outcomes of an ML mannequin together with its accuracy over time:

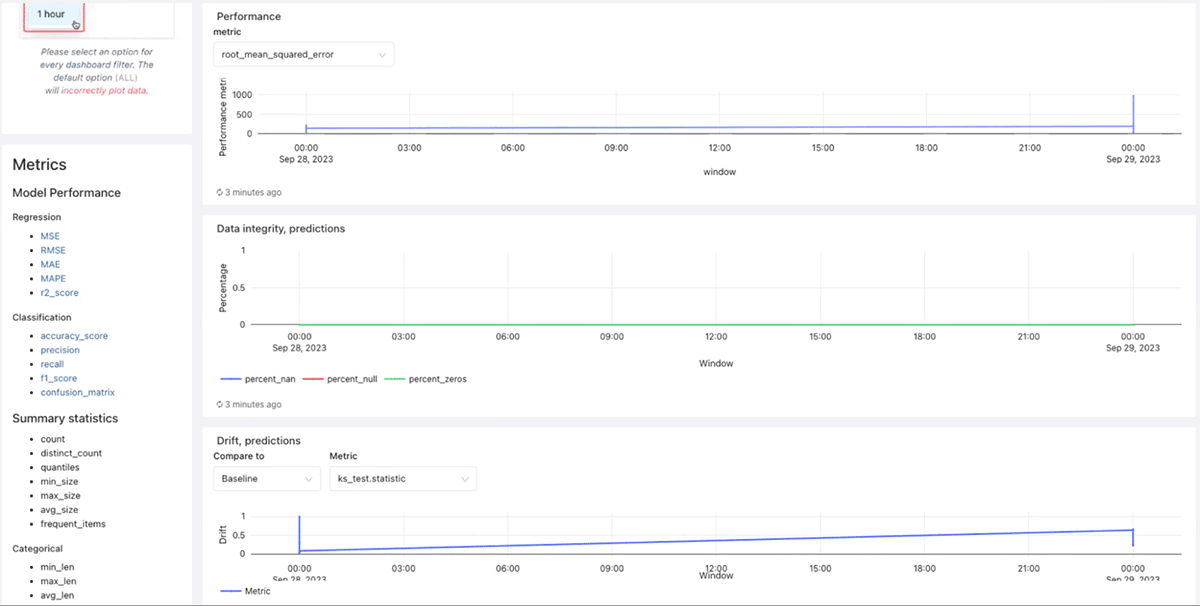

It additional exhibits information integrity of predictions and information drift over time:

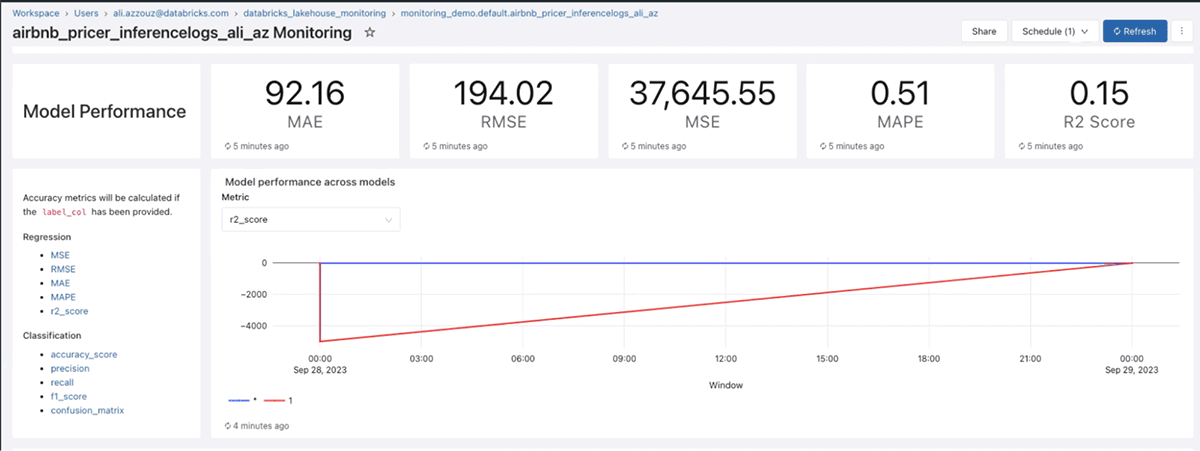

And mannequin efficiency over time, in accordance with quite a lot of ML metrics equivalent to R2, RMSE, and MAPE:

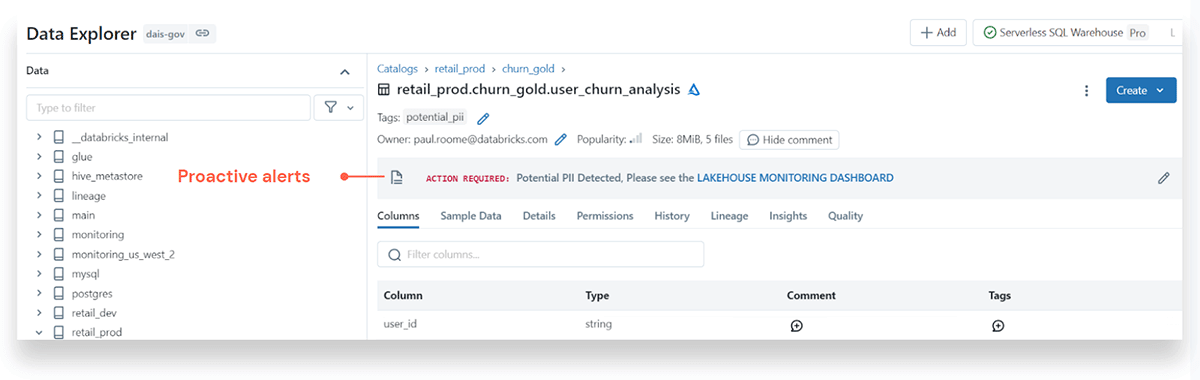

It is one factor to deliberately hunt down ML mannequin data if you find yourself searching for solutions, however it’s a complete different degree to get automated proactive alerts on errors, information drift, mannequin failures, or high quality points. Beneath is an instance alert for a possible PII (Private Identifiable Info) information breach:

Another factor – you may assess the impression of points, do a root trigger evaluation, and assess the downstream impression by Databrick’s highly effective lineage capabilities – from table-level to column-level.

System tables: metadata data for lakehouse observability and making certain compliance

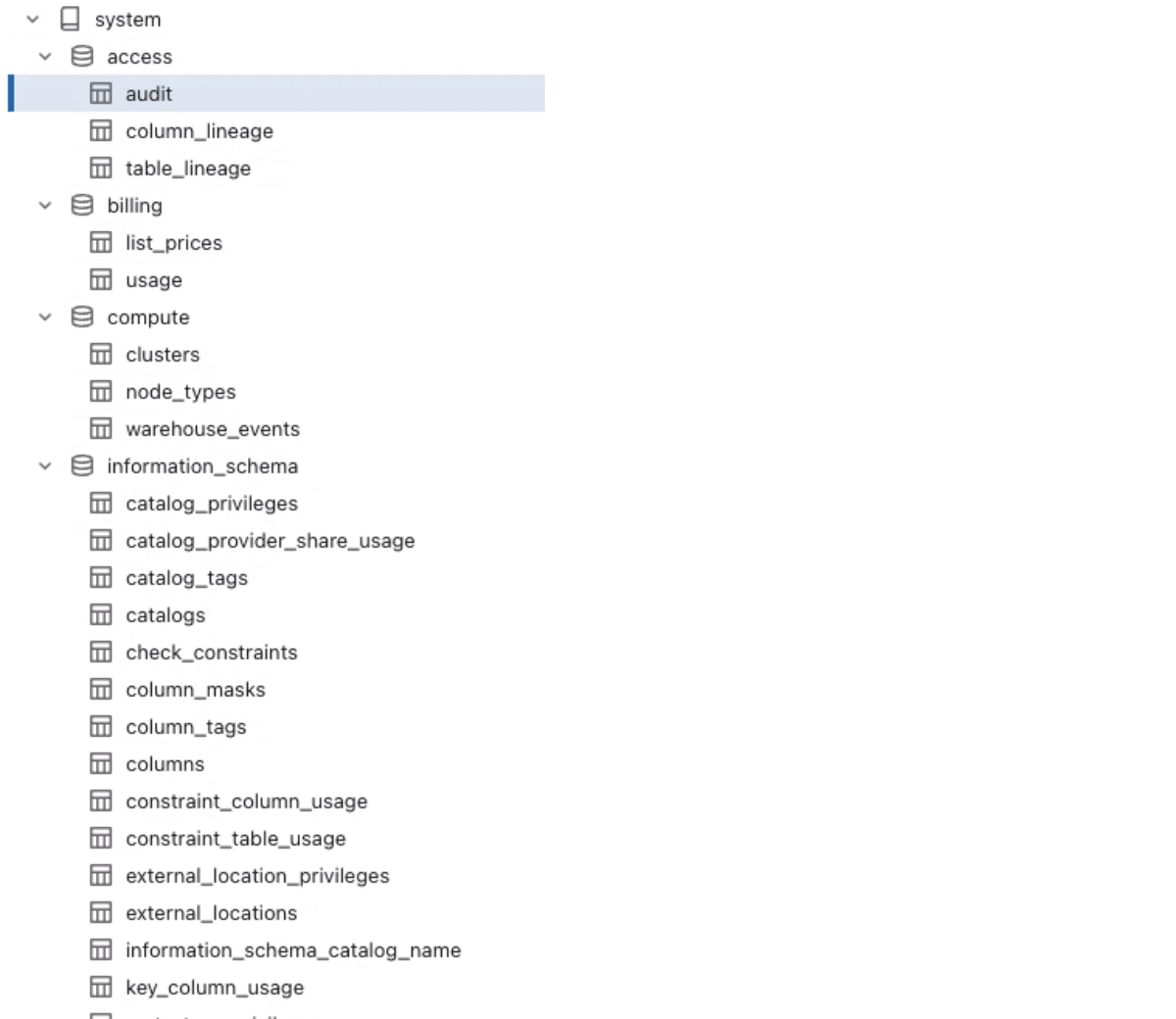

These underlying tables could be queried by SQL or exercise dashboards to offer observability about each asset throughout the Databricks Intelligence Platform. Examples embrace which customers have entry to which information objects; billing tables that present pricing and utilization; compute tables that take cluster utilization and warehouse occasions into consideration; and lineage data between columns and tables:

- Audit tables embrace data on all kinds of UC occasions. UC captures an audit log of actions carried out towards the metastore giving directors entry to particulars about who accessed a given dataset and the actions that they carried out.

- Billing and historic pricing tables will embrace information for all billable utilization throughout all the account; subsequently you may view your account’s international utilization from whichever area your workspace is in.

- Desk lineage and column lineage tables are nice as a result of they will let you programmatically question lineage information to gas determination making and studies. Desk lineage information every read-and-write occasion on a UC desk or path that may embrace job runs, pocket book runs and dashboards related to the desk. For column lineage, information is captured by studying the column

- Node sorts tables seize the at the moment out there node sorts with their primary {hardware} data outlining the node sort identify, the variety of vCPUs for the occasion, and the variety of GPUs and reminiscence for the occasion. Additionally in non-public preview are node_utilization metrics on how a lot utilization every node is leveraging.

- Question historical past holds data on all SQL instructions, i/o efficiency, and variety of rows returned.

- Clusters desk incorporates the total historical past of cluster configurations over time for all-purpose and job clusters.

- Predictive optimization tables are nice as a result of they optimize your information structure for peak efficiency and value effectivity. The tables monitor the operation historical past of optimized tables by offering the catalog identify, schema identify, desk identify, and operation metrics about compaction and vacuuming.

From the catalog explorer, listed here are only a few of the system tables any of which could be seen for extra particulars:

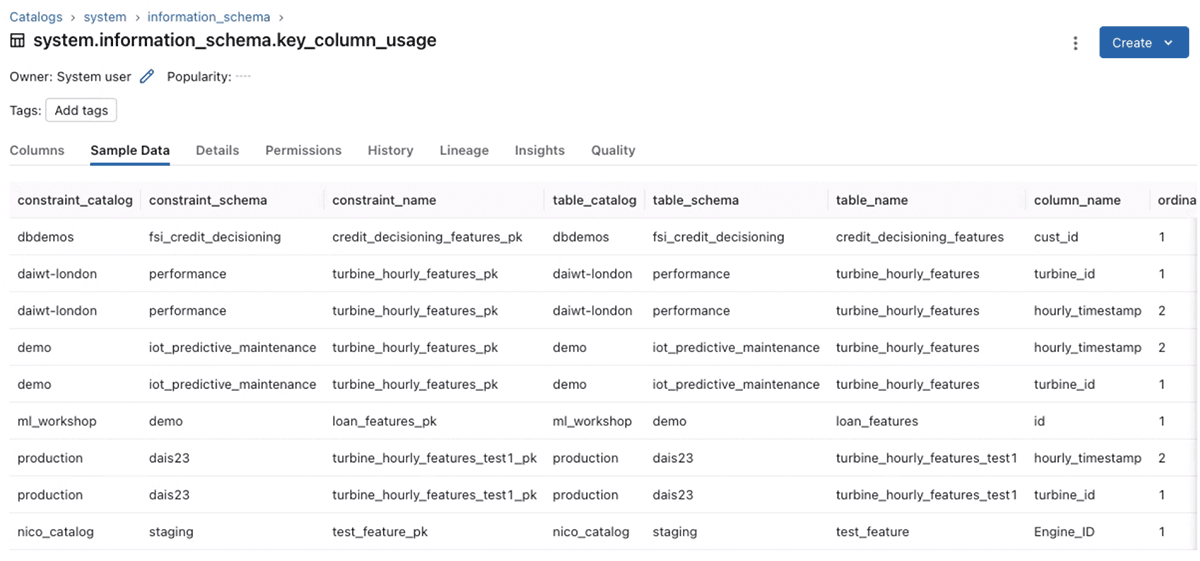

For instance, drilling down on the “key_column_usage” desk, you may see exactly how tables relate to one another by way of their major key:

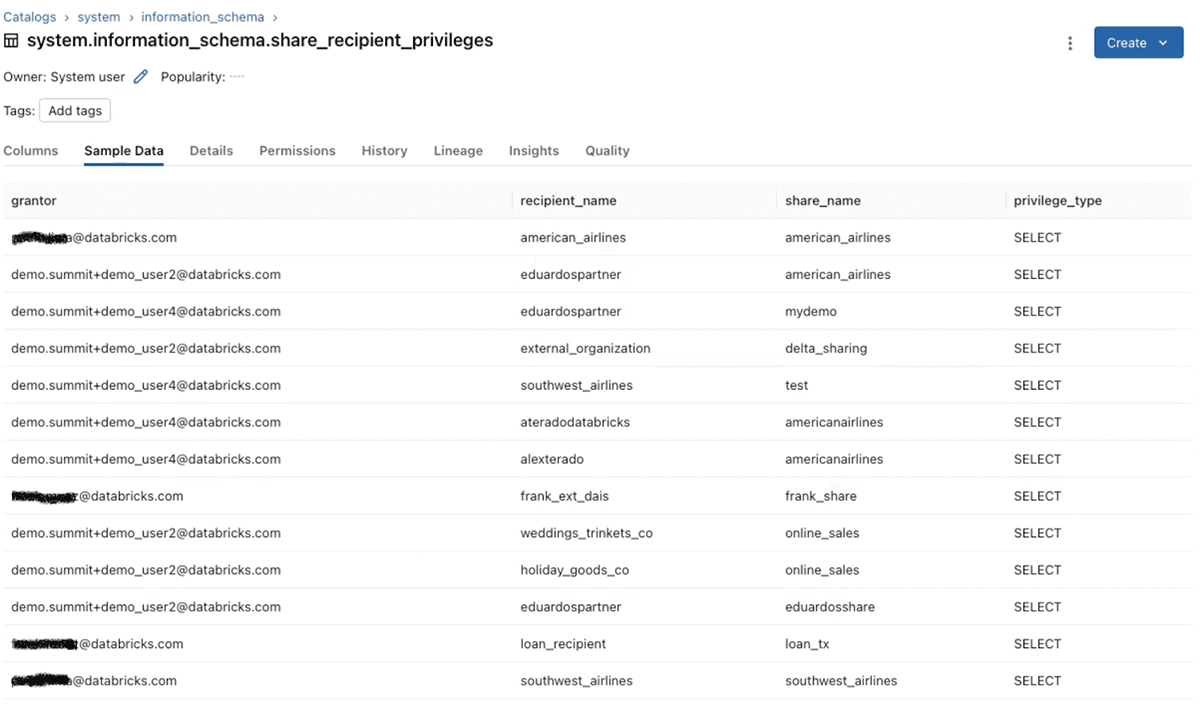

One other instance is the “share_recipient_privileges” desk, to see who granted which shares to whom:

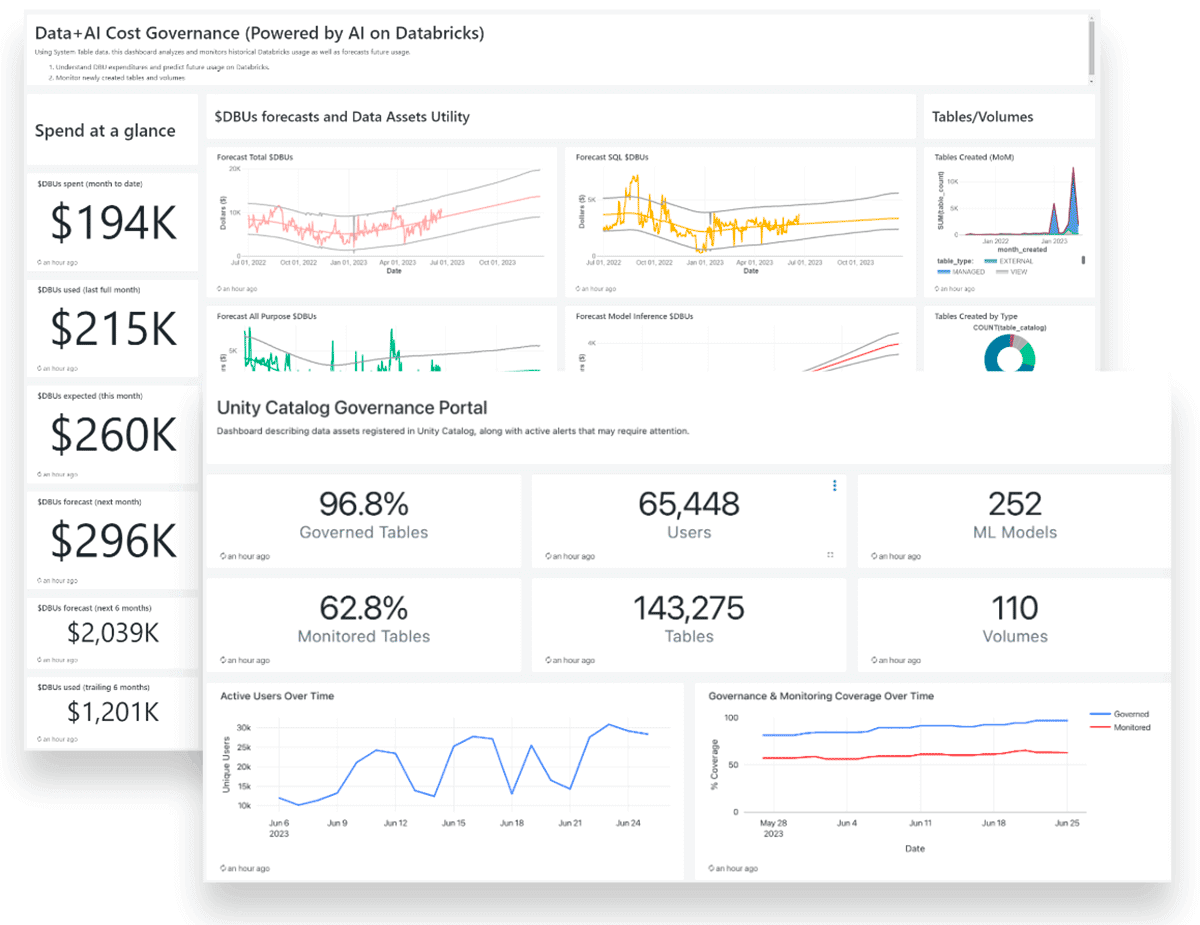

The instance dashboard beneath exhibits the variety of customers, tables, ML fashions, % of tables which are monitored or not, {dollars} spent on Databricks DBUs over time, and a lot extra:

What does having a complete information and AI monitoring and reporting device end in?

- Lowered danger of non-compliance with higher monitoring of inner insurance policies and safety breach potential leads to safeguarded repute and improved information and AI belief from workers and companions.

- Improved integrity and trustworthiness of knowledge and AI with “one supply of reality”, anomaly detection, and reliability metrics.

Worth Levers with Databricks Unity Catalog

In case you are seeking to be taught extra in regards to the values Unity Catalog brings to companies, the prior Unity Catalog Governance Worth Levers weblog went into element: mitigating danger round compliance; decreasing platform complexity and prices; accelerating innovation; facilitating higher inner and exterior collaboration; and monetizing the worth of knowledge.

Conclusion

Governance is vital to mitigating dangers, making certain compliance, accelerating innovation, and decreasing prices. Databricks Unity Catalog is exclusive available in the market, offering a single unified governance resolution for all of an organization’s information and AI throughout clouds and information platforms.

UC Databricks structure makes governance seamless: a unified view and discovery of all information belongings, one device for entry administration, one device for auditing for enhanced information and AI safety, and in the end enabling platform-independent collaboration that unlocks new enterprise values.

Getting began is straightforward – UC comes enabled by default with Databricks if you’re a brand new buyer! Additionally if you’re on premium or enterprise workspaces, there aren’t any extra prices.