Basis fashions are huge deep-learning fashions which were pretrained on an unlimited quantity of general-purpose, unlabeled knowledge. They are often utilized to quite a lot of duties, like producing pictures or answering buyer questions.

However these fashions, which function the spine for highly effective synthetic intelligence instruments like ChatGPT and DALL-E, can provide up incorrect or deceptive data. In a safety-critical scenario, similar to a pedestrian approaching a self-driving automotive, these errors may have severe penalties.

To assist stop such errors, researchers from MIT and the MIT-IBM Watson AI Lab developed a method to estimate the reliability of basis fashions earlier than they’re deployed to a particular job.

They do that by coaching a set of basis fashions which are barely totally different from each other. Then they use their algorithm to evaluate the consistency of the representations every mannequin learns about the identical take a look at knowledge level. If the representations are constant, it means the mannequin is dependable.

After they in contrast their method to state-of-the-art baseline strategies, it was higher at capturing the reliability of basis fashions on quite a lot of classification duties.

Somebody may use this method to resolve if a mannequin must be utilized in a sure setting, with out the necessity to take a look at it on a real-world dataset. This may very well be particularly helpful when datasets is probably not accessible as a result of privateness issues, like in well being care settings. As well as, the method may very well be used to rank fashions primarily based on reliability scores, enabling a person to pick the very best one for his or her job.

“All fashions may be mistaken, however fashions that know when they’re mistaken are extra helpful. The issue of quantifying uncertainty or reliability will get more durable for these basis fashions as a result of their summary representations are tough to match. Our technique lets you quantify how dependable a illustration mannequin is for any given enter knowledge,” says senior creator Navid Azizan, the Esther and Harold E. Edgerton Assistant Professor within the MIT Division of Mechanical Engineering and the Institute for Information, Programs, and Society (IDSS), and a member of the Laboratory for Info and Resolution Programs (LIDS).

He’s joined on a paper in regards to the work by lead creator Younger-Jin Park, a LIDS graduate pupil; Hao Wang, a analysis scientist on the MIT-IBM Watson AI Lab; and Shervin Ardeshir, a senior analysis scientist at Netflix. The paper will likely be offered on the Convention on Uncertainty in Synthetic Intelligence.

Counting the consensus

Conventional machine-learning fashions are educated to carry out a particular job. These fashions sometimes make a concrete prediction primarily based on an enter. As an example, the mannequin would possibly inform you whether or not a sure picture comprises a cat or a canine. On this case, assessing reliability may merely be a matter of trying on the remaining prediction to see if the mannequin is correct.

However basis fashions are totally different. The mannequin is pretrained utilizing normal knowledge, in a setting the place its creators don’t know all downstream duties it will likely be utilized to. Customers adapt it to their particular duties after it has already been educated.

In contrast to conventional machine-learning fashions, basis fashions don’t give concrete outputs like “cat” or “canine” labels. As an alternative, they generate an summary illustration primarily based on an enter knowledge level.

To evaluate the reliability of a basis mannequin, the researchers used an ensemble strategy by coaching a number of fashions which share many properties however are barely totally different from each other.

“Our thought is like counting the consensus. If all these basis fashions are giving constant representations for any knowledge in our dataset, then we will say this mannequin is dependable,” Park says.

However they bumped into an issue: How may they examine summary representations?

“These fashions simply output a vector, comprised of some numbers, so we will’t examine them simply,” he provides.

They solved this downside utilizing an thought referred to as neighborhood consistency.

For his or her strategy, the researchers put together a set of dependable reference factors to check on the ensemble of fashions. Then, for every mannequin, they examine the reference factors situated close to that mannequin’s illustration of the take a look at level.

By trying on the consistency of neighboring factors, they will estimate the reliability of the fashions.

Aligning the representations

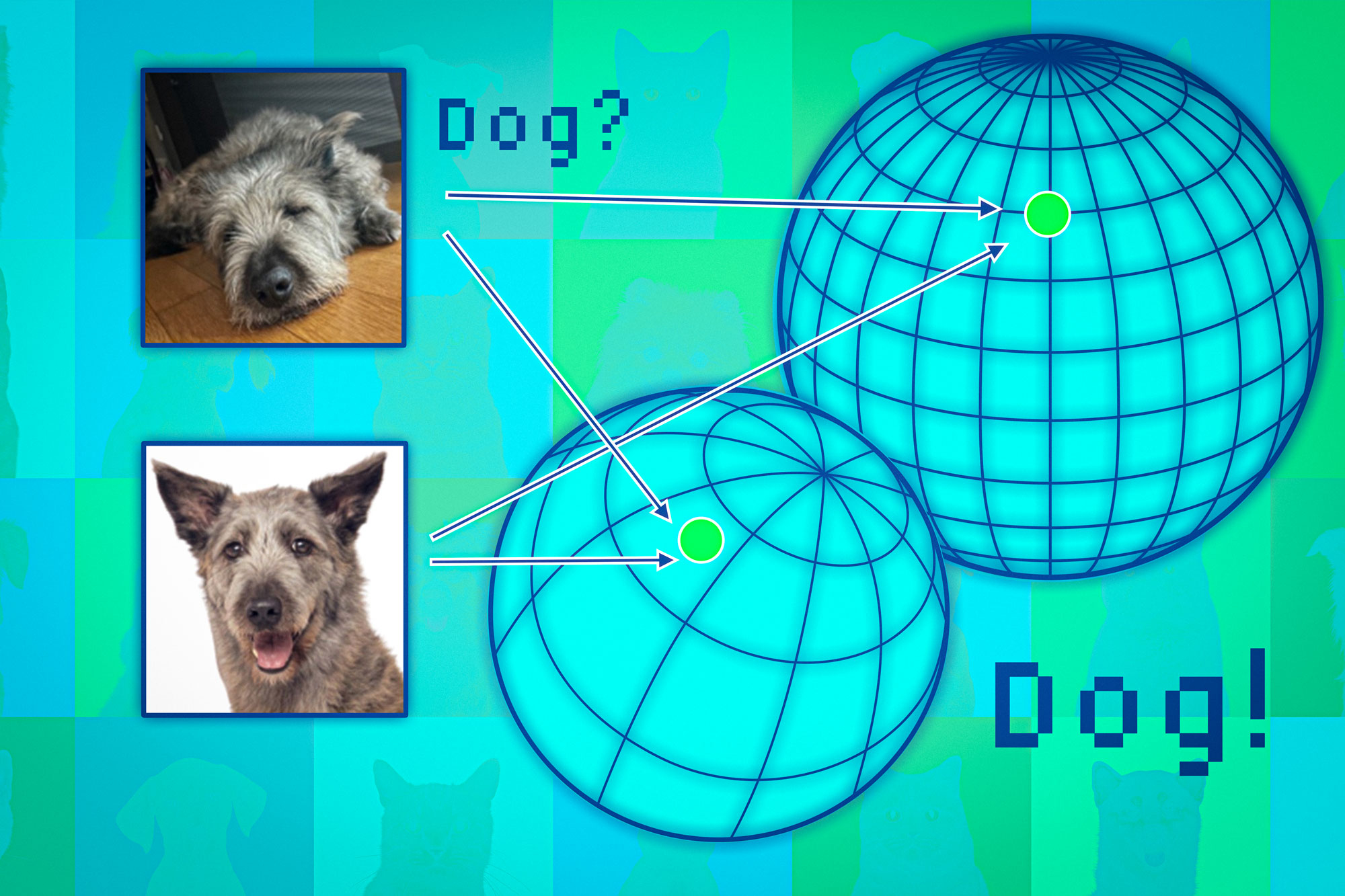

Basis fashions map knowledge factors in what is named a illustration area. A method to consider this area is as a sphere. Every mannequin maps related knowledge factors to the identical a part of its sphere, so pictures of cats go in a single place and pictures of canine go in one other.

However every mannequin would map animals in a different way in its personal sphere, so whereas cats could also be grouped close to the South Pole of 1 sphere, one other mannequin may map cats someplace within the Northern Hemisphere.

The researchers use the neighboring factors like anchors to align these spheres to allow them to make the representations comparable. If an information level’s neighbors are constant throughout a number of representations, then one must be assured in regards to the reliability of the mannequin’s output for that time.

After they examined this strategy on a variety of classification duties, they discovered that it was far more constant than baselines. Plus, it wasn’t tripped up by difficult take a look at factors that prompted different strategies to fail.

Furthermore, their strategy can be utilized to evaluate reliability for any enter knowledge, so one may consider how nicely a mannequin works for a specific sort of particular person, similar to a affected person with sure traits.

“Even when the fashions all have common efficiency total, from a person viewpoint, you’d want the one which works finest for that particular person,” Wang says.

Nonetheless, one limitation comes from the truth that they have to prepare an ensemble of enormous basis fashions, which is computationally costly. Sooner or later, they plan to seek out extra environment friendly methods to construct a number of fashions, maybe through the use of small perturbations of a single mannequin.

This work is funded, partially, by the MIT-IBM Watson AI Lab, MathWorks, and Amazon.